2 min to read

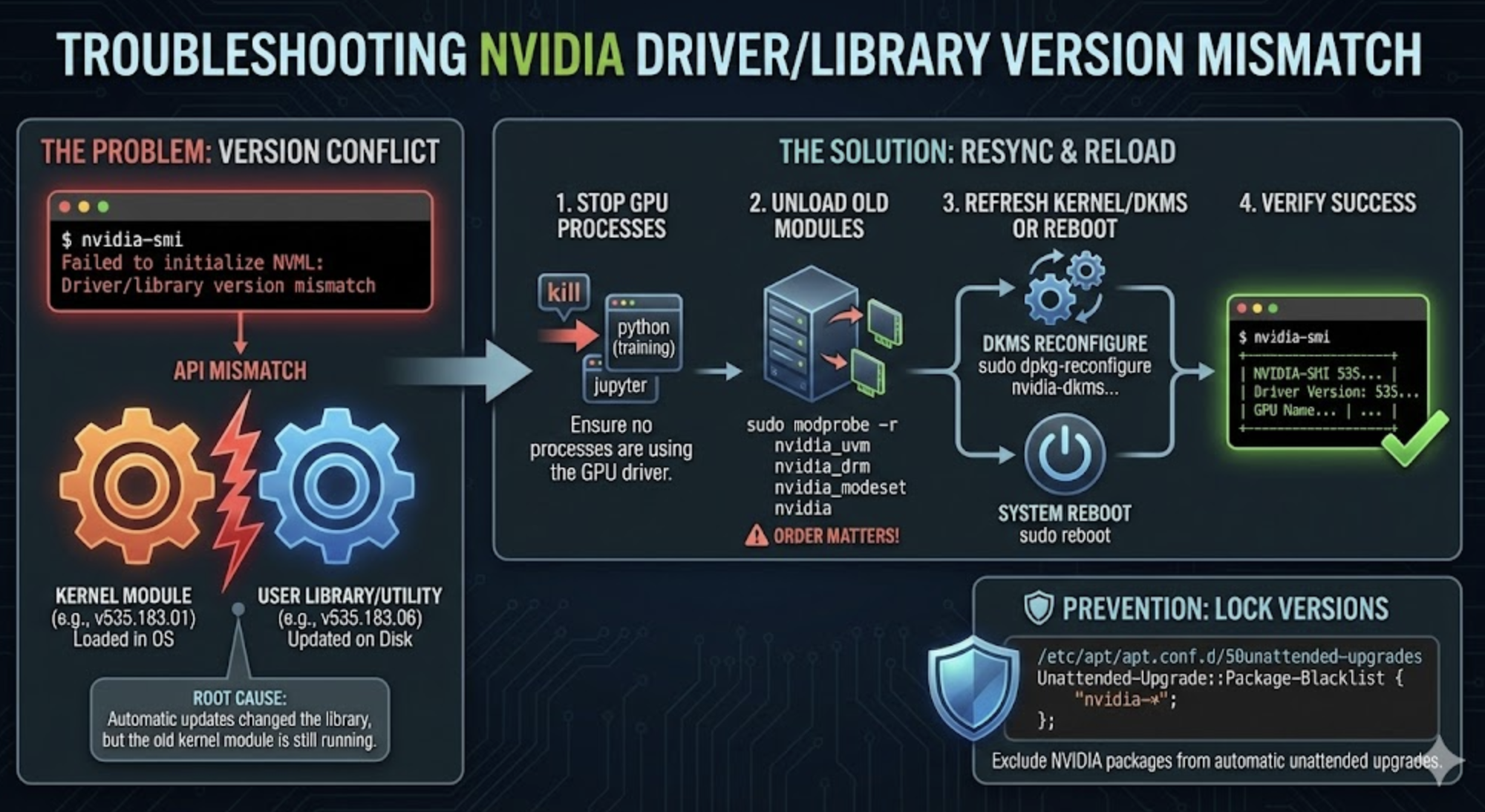

Resolving NVIDIA Driver/Library Version Mismatch Errors

How to fix 'Failed to initialize NVML Driver/library version mismatch' on GPU servers

Overview

Have you ever installed a GPU driver on your server and encountered the following error when running nvidia-smi?

nvidia-smi

Failed to initialize NVML: Driver/library version mismatch

This error is usually caused by a version mismatch between the NVIDIA driver kernel module and the utility library. This post explains the root cause, step-by-step resolution, and tips to prevent recurrence.

Problem Symptoms

nvidia-smi

Failed to initialize NVML: Driver/library version mismatch

Check with dmesg:

dmesg | grep NVRM

...

NVRM: API mismatch: the client has the version 535.183.06,

NVRM: but this kernel module has the version 535.183.01.

In this example, the utility is version 535.183.06, but the kernel module is 535.183.01—a clear version mismatch.

Root Cause Analysis

unattended-upgradeautomatically updated some NVIDIA packages, but not all components were replaced- The

nvidia-dkmskernel module was not recompiled, so the old version remained loaded - The kernel module was not reloaded (or the system not rebooted), causing a conflict

Resolution Steps

1. Check Loaded NVIDIA Kernel Modules

lsmod | grep nvidia

...

nvidia 56713216 ...

nvidia_modeset ...

nvidia_uvm ...

2. Terminate Related Processes

lsof /dev/nvidia*

kill -9 <PIDs>

lsof /dev/nvidia* # Repeat until no processes remain

3. Unload NVIDIA Modules

sudo modprobe -r nvidia_uvm nvidia_drm nvidia_modeset nvidia

Note: The unload order matters:

uvm→drm→modeset→nvidia

4. Reinstall DKMS or Reboot

sudo dpkg-reconfigure nvidia-dkms-535-server

# or

sudo reboot

5. Verify Normal Operation

nvidia-smi

# Should display driver and CUDA version, GPU list, and no errors

Prevention: Block Unattended Upgrades for NVIDIA Packages

To prevent similar issues, exclude NVIDIA packages from automatic upgrades:

sudo vi /etc/apt/apt.conf.d/50unattended-upgrades

...

Unattended-Upgrade::Package-Blacklist {

"nvidia-*";

};

Apply the changes:

sudo systemctl restart unattended-upgrades

Conclusion

This troubleshooting scenario is common in GPU server operations. For machine learning and deep learning clusters, managing GPU driver versions is especially critical.

Summary:

nvidia-smierror → kernel module and library mismatch- Terminate processes + unload modules → manual recovery

- Add NVIDIA packages to unattended-upgrades blacklist to prevent recurrence

By following these steps, you can resolve NVIDIA driver errors and ensure stable GPU operation in your server environment.

Comments