8 min to read

Resolving VM-to-VM Network Communication Issues in Cockpit

How to overcome macvtap limitations and establish stable VM networking for Kubernetes clusters

Overview

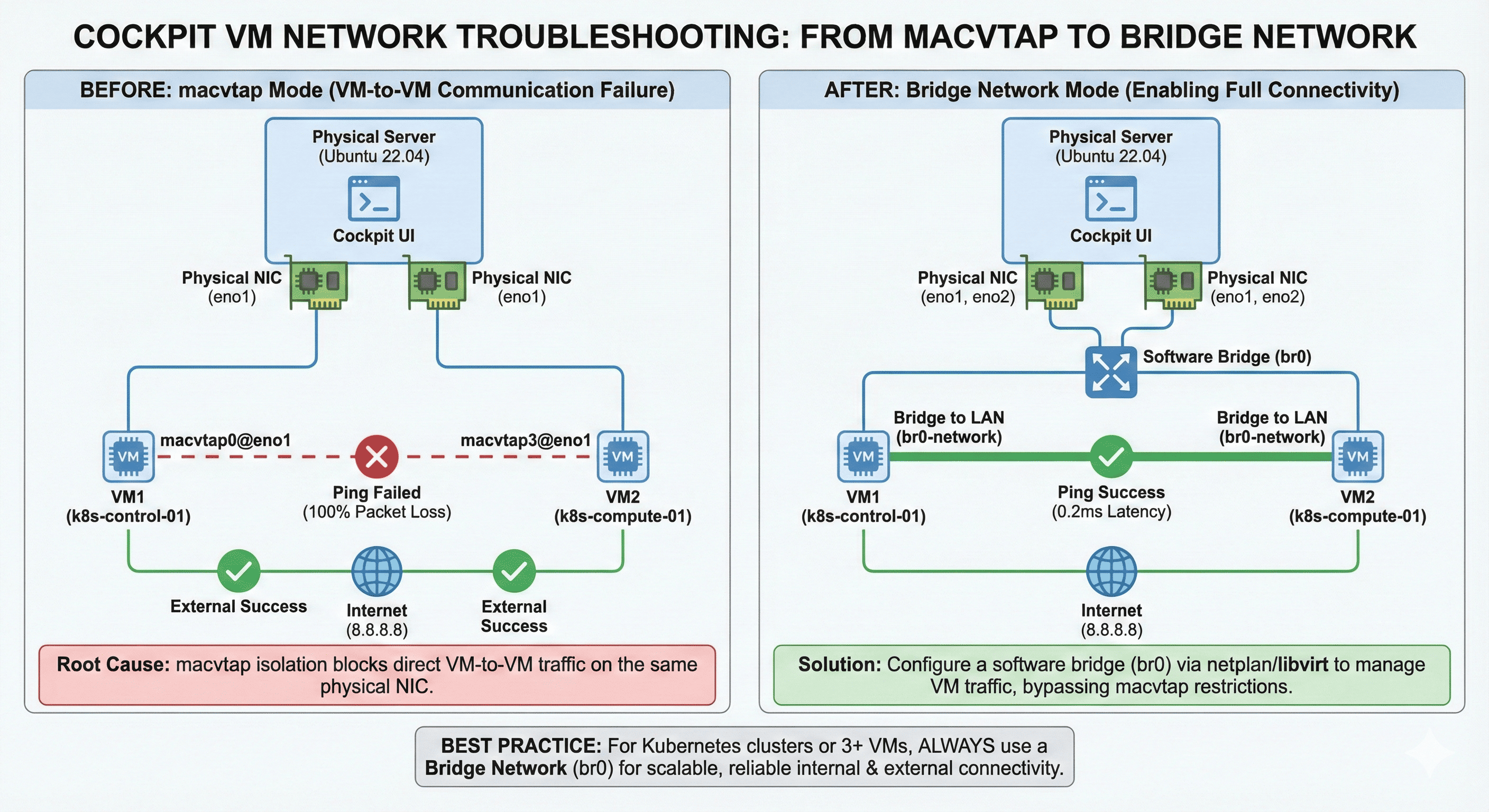

When managing virtual machines (VMs) using Cockpit, network communication issues between VMs are commonly encountered. Particularly when VMs are configured in “Direct” network mode, external communication works fine, but VM-to-VM internal communication often fails.

This article shares the step-by-step process of resolving actual networking problems encountered in production, including network design methods for Kubernetes cluster configuration.

Problem Situation

Symptoms:

- VMs connected to the same physical NIC via macvtap

- VM-to-VM ping communication failure (100% packet loss)

- External communication working normally

- Expected Pod communication issues when setting up Kubernetes cluster

Environment Information:

- Physical Server: Ubuntu 22.04 LTS

- Virtualization: KVM/QEMU with libvirt

- Management Tool: Cockpit Web Console

- Goal: Kubernetes cluster configuration

Method 1: Diagnosing the Problem - macvtap Fundamental Constraints

Current Network Configuration Check

First, I verified the network status of the physical server and VMs.

# Physical server (ubuntu)

root@ubuntu:~# ip a

3: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 90:5a:08:74:aa:21 brd ff:ff:ff:ff:ff:ff

inet 10.10.10.15/24 brd 10.10.10.255 scope global eno1

8: macvtap0@eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP default qlen 500

16: macvtap3@eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP default qlen 500

# VM1 (k8s-control-01)

root@k8s-control-01:~# ip a

3: enp7s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

inet 10.10.10.17/24 brd 10.10.10.255 scope global enp7s0

# VM2 (k8s-compute-01)

root@k8s-compute-01:~# ip a

3: enp7s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

inet 10.10.10.18/24 brd 10.10.10.255 scope global enp7s0

Communication Test Results

# VM-to-VM direct communication (Failed)

root@k8s-compute-01:~# ping 10.10.10.17

PING 10.10.10.17 (10.10.10.17) 56(84) bytes of data.

^C

--- 10.10.10.17 ping statistics ---

1 packets transmitted, 0 received, 100% packet loss, time 0ms

# External communication (Success)

root@k8s-compute-01:~# ping 8.8.8.8

PING 8.8.8.8 (8.8.8.8) 56(84) bytes of data.

64 bytes from 8.8.8.8: icmp_seq=1 ttl=116 time=8.42 ms

Understanding macvtap Constraints

macvtap Basic Operation Principles:

- Direct communication between macvtap interfaces connected to the same physical NIC is blocked

- Designed for VM isolation for security reasons

- Normal communication with external networks is possible

This was the core cause of our problem.

Method 2: Hardware-Based Solution Review

Additional NIC Utilization Approach

I attempted to activate the second network interface (eno2) and distribute VMs across different NICs.

# netplan configuration

network:

ethernets:

eno1:

dhcp4: no

addresses: [10.10.10.15/24]

nameservers:

addresses: [8.8.8.8]

routes:

- to: default

via: 10.10.10.1

metric: 100

eno2:

dhcp4: no

addresses: [10.10.10.23/24]

nameservers:

addresses: [8.8.8.8]

routes:

- to: default

via: 10.10.10.1

metric: 200

# Apply and verify results

sudo netplan apply

# Check results

root@ubuntu:~# ip a

2: eno2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

inet 10.10.10.23/24 brd 10.10.10.255 scope global eno2

3: eno1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

inet 10.10.10.15/24 brd 10.10.10.255 scope global eno1

Planned VM Distribution:

- VM1: macvtap@eno1 (10.10.10.17)

- VM2: macvtap@eno2 (10.10.10.18)

However, this method is only valid for up to 2 VMs.

Network Driver Analysis

To find the root cause of the problem, I conducted comparative analysis with different environments.

# Environment with good communication (cockpit machine)

root@cockpit:~# sudo ethtool -i enp4s0

driver: igc

version: 6.8.0-57-generic

firmware-version: 1057:8754

# Problematic environment (ubuntu machine)

root@ubuntu:~# sudo ethtool -i eno1

driver: igb

version: 5.15.0-144-generic

firmware-version: 3.30, 0x8000079c

root@ubuntu:~# sudo ethtool -i eno2

driver: atlantic

version: 5.15.0-144-generic

firmware-version: 1.3.30

Discovered Differences:

- igc driver (latest): Supports macvtap hairpin mode enabling VM-to-VM communication

- igb/atlantic drivers (older): Hairpin mode limitations preventing VM-to-VM communication

- Kernel version: 6.8.0 vs 5.15.0 difference

Method 3: Fundamental Solution with Bridge Network Configuration

netplan Bridge Setup

To circumvent hardware constraints with software, I configured a bridge network.

# This is the network config written by 'subiquity'

network:

ethernets:

eno1:

dhcp4: no

eno2:

dhcp4: no

bridges:

br0:

interfaces: [eno1, eno2]

addresses: [10.10.10.15/24]

routes:

- to: default

via: 10.10.10.1

nameservers:

addresses: [8.8.8.8]

# Apply configuration

sudo netplan apply

# Check bridge status

brctl show

bridge name bridge id STP enabled interfaces

br0 8000.905a0874aa21 no eno1

eno2

Creating libvirt Bridge Network

Method 1: Define network with XML file**

# Create bridge network definition file

cat > /tmp/br0-network.xml << EOF

<network>

<name>br0-network</name>

<forward mode='bridge'/>

<bridge name='br0'/>

</network>

EOF

# Create and start network

sudo virsh net-define /tmp/br0-network.xml

sudo virsh net-start br0-network

sudo virsh net-autostart br0-network

# Verify

sudo virsh net-list --all

Name State Autostart Persistent

----------------------------------------------

br0-network active yes yes

default active yes yes

Changing VM Network in Cockpit

- Virtualization → Networks: Verify new “br0-network”

- Change each VM’s network setting: “Direct” → “br0-network”

- Restart VMs

Alternative: Using “Bridge to LAN” option**

- Cockpit → Create Network → Forward mode: “Bridge to LAN”

- Bridge: Select “br0”

Method 4: Verification and Testing

VM-to-VM Communication Verification

# Ping from VM1 to VM2

root@k8s-control-01:~# ping 10.10.10.18

PING 10.10.10.18 (10.10.10.18) 56(84) bytes of data.

64 bytes from 10.10.10.18: icmp_seq=1 ttl=64 time=0.231 ms

64 bytes from 10.10.10.18: icmp_seq=2 ttl=64 time=0.187 ms

# Ping from VM2 to VM1

root@k8s-compute-01:~# ping 10.10.10.17

PING 10.10.10.17 (10.10.10.17) 56(84) bytes of data.

64 bytes from 10.10.10.17: icmp_seq=1 ttl=64 time=0.156 ms

64 bytes from 10.10.10.17: icmp_seq=2 ttl=64 time=0.201 ms

Network Performance Measurement

# Bandwidth testing using iperf3

# Run server on VM1

iperf3 -s

# Run client on VM2

iperf3 -c 10.10.10.17 -t 30

Troubleshooting Tips

1. Network Disconnection After Bridge Creation

# Solution: Move IP from physical interfaces to bridge in netplan configuration

# Remove IP from eno1, eno2 and configure only on br0

2. DNS Resolution Issues in VMs

# Solution: Verify nameservers configuration

sudo systemctl restart systemd-resolved

3. Bridge Performance Degradation

# Verify STP is disabled

sudo brctl stp br0 off

echo 0 > /sys/class/net/br0/bridge/forward_delay

Monitoring Commands

# Monitor bridge status

watch -n1 "brctl showmacs br0"

# Monitor network traffic

iftop -i br0

vnstat -i br0

Best Practices and Recommendations

For Small Environments (2 VMs)

- VM distribution across different physical NICs is possible

- Bridge configuration recommended for scalability

For Medium to Large Environments (3+ VMs)

- Bridge network configuration is essential

- Use “Bridge to LAN” mode

For Kubernetes Clusters

- Recommend br0-based single network configuration

- Complete service provision through MetalLB and DNS integration

For Production Environments

- Configure bonding for network redundancy

- Establish monitoring and logging systems

- Develop backup and disaster recovery plans

Conclusion

Through this troubleshooting process, I was able to identify the root cause and solution for VM networking problems in Cockpit.

Key Lessons Learned:

- Understanding macvtap constraints: VM-to-VM communication on the same physical NIC is blocked by default

- Hardware dependencies: Behavior varies by network driver (igc vs igb/atlantic)

- Bridge solution: Linux bridge networks can circumvent hardware constraints with software

- Value of simplicity: br0-based single network is more efficient than complex internal/external network separation

- Scalability consideration: Start with bridge configuration to prepare for VM expansion

This approach enables building a stable and scalable virtualization environment, particularly ensuring smooth networking for container orchestration platforms like Kubernetes.

Comments