8 min to read

Understanding Ceph: Comprehensive Guide to Distributed Storage Architecture

An in-depth exploration of Ceph's architecture, components, and operational best practices for modern data centers

Overview

Today, we’ll explore the fundamental concepts, architecture, key features, and operational considerations of Ceph distributed storage system.

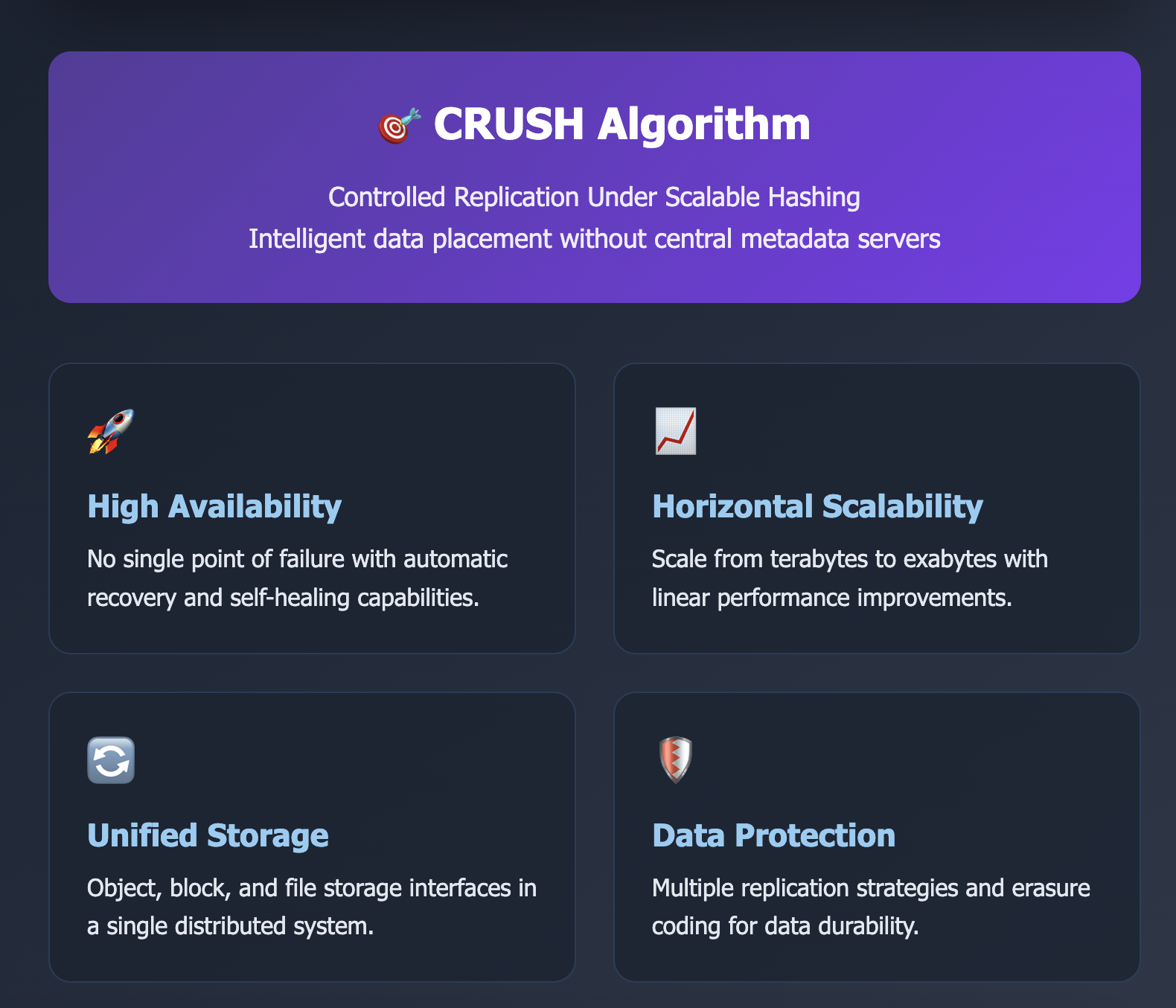

Ceph is an open-source distributed storage system that uniquely supports Object, Block, and File storage within a single unified system. It provides powerful capabilities including High Availability, horizontal Scalability, and Self-Healing, making it widely adopted in cloud environments and large-scale data centers.

This article will cover Ceph’s major architectural components (MON, MGR, OSD, MDS, RGW), the CRUSH algorithm for effective data placement, performance optimization, and operational best practices.

In the next article, we’ll dive deeper into Ceph-Kubernetes integration (Rook-Ceph) and operational automation.

What is Ceph?

Ceph is a distributed storage system that clusters multiple storage devices to appear as a single unified storage system.

It implements object storage on a distributed cluster, providing storage interfaces at object, block, and file levels. The key advantage is that it provides object storage, block storage, and file system capabilities all in one solution.

Ceph offers complete distributed processing without SPOF (Single Point of Failure) and can scale up to exabyte levels. To configure a Ceph Storage Cluster, you need at least one Ceph Monitor, Ceph Manager, and Ceph OSD (Object Storage Daemon). If you want to use Ceph File System clients, you also need a Ceph Metadata Server.

Key Advantages of Ceph

High Availability & Zero Downtime

- No SPOF (Single Point of Failure)

- Automatic recovery when failures occur

Horizontal Scalability

- Scalable up to exabyte levels

- Linear performance improvement with added nodes

Multiple Storage Interface Support

- Supports block, file, and object storage in a single system

- Unified management across different storage types

Open Source Foundation

- Cost-effective solution

- Active community support and development

Key Disadvantages of Ceph

High Hardware Requirements

- Requires substantial memory and disk IOPS performance

- Network bandwidth critical for performance

Complex Configuration

- Initial tuning and cluster setup can be challenging

- Requires expertise for optimal configuration

Recovery Speed

- Erasure Coding-based data recovery can be slow

- Network overhead during rebalancing operations

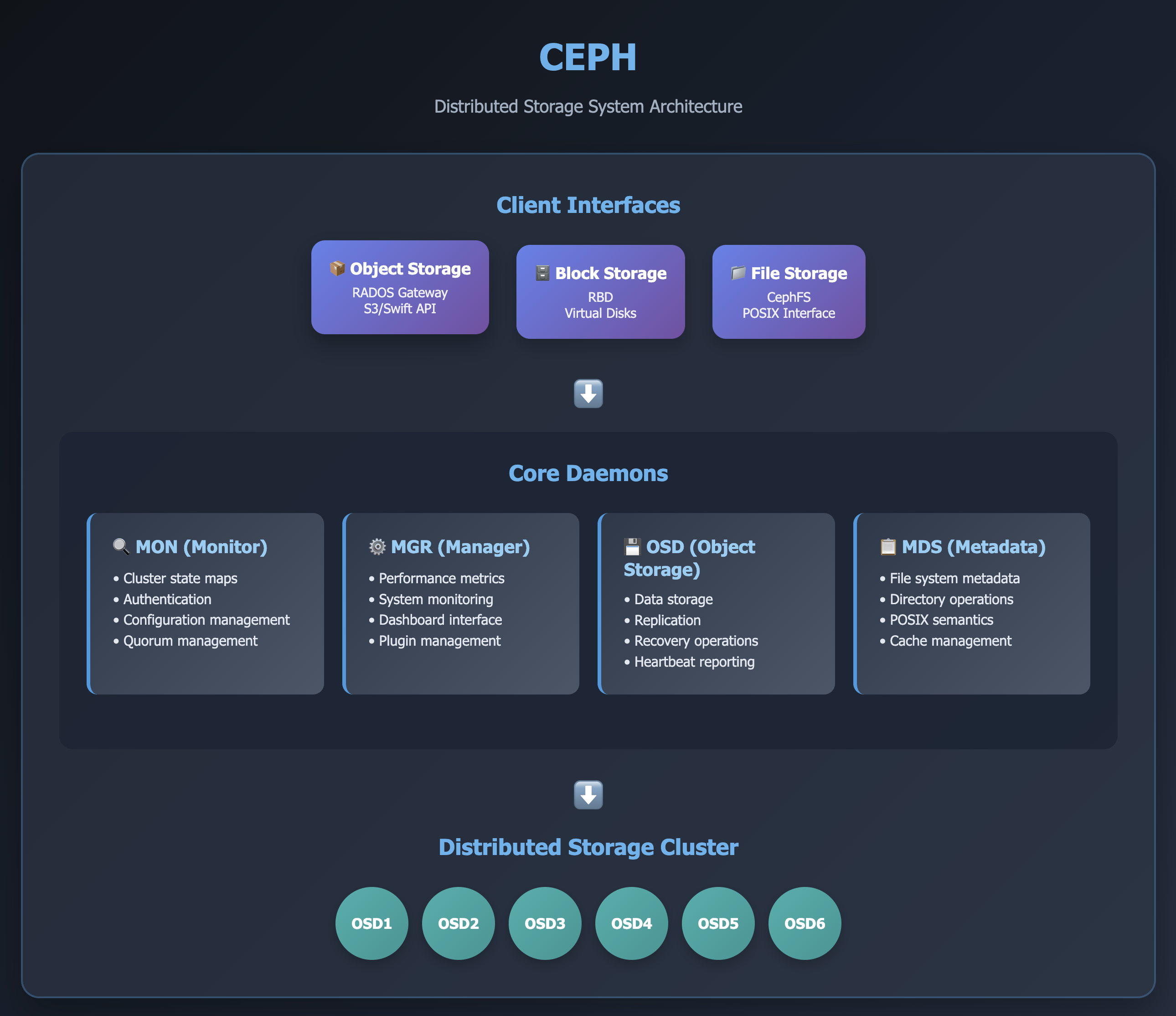

Ceph Architecture and Daemons

Component Overview

Each daemon plays the following roles in the Ceph ecosystem:

Monitors (MON)

- Ceph Monitor (ceph-mon) maintains cluster state maps including:

- Monitor map

- Manager map

- OSD map

- MDS map

- CRUSH map

- Recommendation: Deploy 3 or more monitors for High Availability

Managers (MGR)

- Ceph Manager daemon (ceph-mgr) tracks cluster runtime information:

- Storage utilization

- Performance metrics

- System load

- Recommendation: Deploy 2 or more managers for High Availability

Ceph OSDs

- Ceph OSD (Object Storage Daemon, ceph-osd) handles:

- Data storage

- Replication

- Recovery operations

- Rebalancing

- Heartbeat reporting to Monitors and Managers

- Recommendation: Deploy 3 or more OSDs for High Availability

MDSs (Metadata Servers)

- Ceph Metadata Server (MDS, ceph-mds) stores metadata for Ceph File System

- Note: Not used by Ceph Block Devices or Ceph Object Storage

- Enables POSIX File System users to execute basic commands without burdening the storage cluster

Ceph Object Gateway (RGW)

- RADOS Gateway (ceph-rgw) provides Object Storage layer

- Compatible with Amazon S3 and OpenStack Swift APIs

- RESTful interface for applications

Ceph Client Interfaces

Ceph provides various service interfaces for external data management:

RADOS (Ceph Storage Cluster)

- Scalable, Reliable Storage Service for petabyte-scale storage clusters

- Foundation service for all Ceph data access

- Used when reading and writing objects in Ceph

RADOS Block Device (RBD)

- Ceph Block Device provides block storage through RBD images

- Images consist of individual objects distributed across different OSDs

- Key Features:

- Storage for virtual disks in Ceph cluster

- Linux kernel mount support

- Boot support in QEMU, KVM, and OpenStack Cinder

Ceph Object Gateway (RADOS Gateway)

- Object storage interface built using librados library

- Communicates with Ceph cluster and writes data directly to OSD processes

- Supported APIs: Amazon S3 and OpenStack Swift

- Use Cases:

- Image storage (e.g., SmugMug, Tumblr)

- Backup services

- File storage and sharing (e.g., Dropbox)

Ceph File System (CephFS)

- Parallel file system providing scalable single-tier shared disk

- Metadata managed by Ceph Metadata Server (MDS)

- POSIX-compliant file system interface

Placement Groups (PGs)

Storing and managing millions of objects individually in a cluster is resource-intensive. Therefore, Ceph uses Placement Groups (PGs) to manage numerous objects more efficiently.

Key Concepts:

- PG: A subset of a Pool that contains a collection of objects

- Pool Division: Ceph divides Pools into a series of PGs

- CRUSH Algorithm: Distributes PGs evenly across cluster OSDs considering cluster map and state

Pool Types

Ceph supports data durability through two primary pool types:

Replicated Pool

- Characteristics:

- High durability

- 200% overhead with 3 replicas

- Fast recovery

- Use Case: Performance-critical applications

Erasure Coded Pool

- Characteristics:

- Cost-effective durability

- ~50% overhead

- Expensive recovery process

- Use Case: Long-term storage and archival

CRUSH Algorithm (Controlled Replication Under Scalable Hashing)

Core Algorithm for Distributed Data Placement

Key Features:

- Distributes data evenly across OSDs without central controller

- Uses CRUSH Map to determine data placement

- Applies data replication policies based on storage hierarchy (datacenter, rack, node)

CRUSH Operation Process:

- Object Hashing: Converts objects to hash values

- PG Mapping: Maps to appropriate Placement Groups

- OSD Placement: Uses CRUSH algorithm to place PGs on suitable OSDs

- Failure Recovery: Updates CRUSH Map and performs automatic recovery

CRUSH is the core technology that maximizes distributed storage efficiency without traditional centralized metadata servers.

Performance Tuning and Best Practices

Core Optimization Elements

Increase OSD Count

- More OSDs improve parallel processing performance

- Linear scalability with additional OSDs

SSD-based WAL/DB Configuration

- Place RocksDB on separate SSDs in BlueStore environment

- Significant performance improvement for metadata operations

Network Optimization

- Recommend 10Gbps+ network environment

- Separate public and cluster networks for optimal performance

CRUSH Map Optimization

- Configure failure domains (Availability Zone, Rack, Host)

- Balance data distribution across infrastructure

Erasure Coding Optimization

- Use Replication Pools for performance-critical applications

- Reserve Erasure Coding for cost-sensitive, less frequently accessed data

Ceph Use Cases

OpenStack Integration

- Cinder Backend: Provides block storage for virtual machines

- Swift Replacement: Object storage backend

Kubernetes Persistent Storage

- Rook-Ceph: Cloud-native Ceph deployment and management

- CSI Driver: Dynamic volume provisioning

Large-Scale Deployments

- Facebook: Petabyte-scale data storage

- CERN: Scientific data analysis and storage

Cephadm vs ceph-ansible Comparison

| Feature | Cephadm | ceph-ansible |

|---|---|---|

| Deployment Method | Container-based | Package-based |

| Difficulty | Easy | Relatively Complex |

| Upgrades | Rolling Upgrade Support | Manual Upgrade Required |

| Monitoring | Dashboard Included | Manual Grafana Setup |

| Recommended For | New Deployments | Existing Environment Maintenance |

Production Troubleshooting Scenarios

| Issue Type | Cause | Resolution Method |

|---|---|---|

| OSD Flapping | Disk performance degradation, Network latency | Analyze OSD logs, Replace or tune hardware |

| MON Quorum Instability | Insufficient MON count, Network partition | Maintain 3+ MONs, Check network connectivity |

| Cluster Full Status | Poor capacity management | Configure Pool quotas, Adjust data policies |

| Scrub Errors | Data inconsistency, Disk errors | Perform manual repair, Replace faulty disks |

Monitoring and Maintenance

Essential Monitoring Metrics

- Cluster Health: Overall cluster status and warnings

- OSD Performance: IOPS, latency, and utilization

- Network Utilization: Bandwidth and packet loss

- Pool Statistics: Usage, PG distribution, and scrub status

Regular Maintenance Tasks

- Health Checks: Daily cluster health verification

- Performance Monitoring: Track performance trends

- Capacity Planning: Monitor growth and plan expansion

- Security Updates: Regular software updates and patches

Conclusion

Throughout this article, we’ve explored Ceph’s fundamental concepts, architectural components, major daemon roles, advantages and disadvantages, and essential information for production operations.

Ceph is not just a simple storage system, but a powerful distributed storage solution that provides object, block, and file storage on a single platform. It has established itself as a robust storage backend with flexibility, scalability, and high availability in OpenStack, Kubernetes, and large-scale data storage environments.

However, Ceph is not a “set-and-forget” solution. It requires operational expertise from initial design through performance tuning, continuous monitoring, and incident response procedures.

The content covered today serves as a practical guide for both newcomers to Ceph and engineers building operational experience with the platform.

Key Takeaways:

- Ceph provides unified storage (object, block, file) in a single platform

- CRUSH algorithm enables intelligent data placement without central metadata

- Proper planning and tuning are critical for production success

- Container-based deployment (Cephadm) simplifies modern deployments

- Monitoring and proactive maintenance ensure long-term stability

Comments