6 min to read

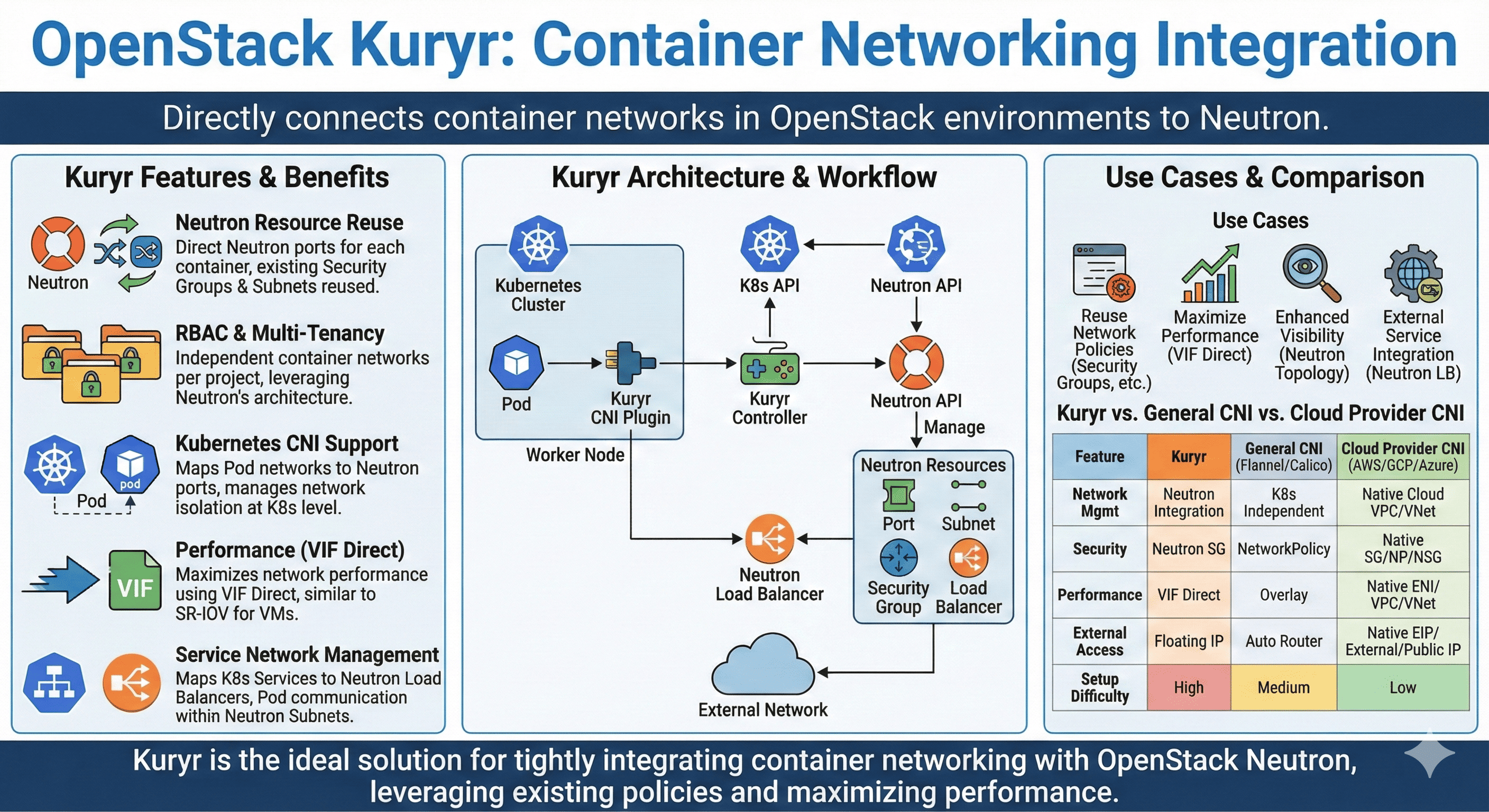

Deep Dive into OpenStack Kuryr

Understanding OpenStack's Container Networking Service

Understanding OpenStack Kuryr

Kuryr is OpenStack’s container networking service that directly connects container networks with Neutron.

It enables container environments like Kubernetes and Docker to utilize OpenStack’s native networking capabilities, providing consistent network policies and eliminating the need for separate network management.

What is Kuryr?

The Container Networking Bridge

Kuryr serves as OpenStack’s container networking integration service, providing essential functionality:

- Neutron Integration: Uses Neutron as the native network driver for containers

- Network Policy: Applies OpenStack network policies to container networks

- Multi-tenancy: Supports OpenStack’s RBAC and project isolation

- Performance: Enables VIF Direct for enhanced network performance

By bridging container networks with OpenStack networking, Kuryr provides a unified network management solution for containerized applications.

Kuryr Architecture Overview (Diagram Description)

- Core Features: Neutron Integration, CNI Plugin, Multi-tenancy

- Service Integration: Kubernetes, Docker, Neutron

- Network Management: Port Management, Security Groups, Load Balancing

- Performance: VIF Direct, SR-IOV, Network Optimization

Kuryr Architecture and Components

Kuryr’s architecture consists of several key components that work together to provide container networking capabilities.

Each component plays a specific role in network integration and management.

Core Components

| Component | Role | Description |

|---|---|---|

| Kuryr Controller | Resource Management |

|

| Kuryr CNI | Network Interface |

|

| Neutron Integration | Network Service |

|

Service Integration

Kuryr integrates with several container platforms:

- Kubernetes: Primary integration target with CNI plugin support

- Docker: Network plugin support for Docker containers

- OpenStack Services: Deep integration with Neutron and other services

This integration enables comprehensive container networking within the OpenStack ecosystem.

Key Features and Capabilities

Kuryr provides comprehensive container networking capabilities that enable effective network management and integration.

These features make it a powerful tool for container networking in OpenStack environments.

Core Features

| Feature | Description | Benefits |

|---|---|---|

| Neutron Integration | Native OpenStack networking |

|

| Multi-tenancy | Project-based isolation |

|

| Performance | VIF Direct support |

|

Best Practices

Key considerations for Kuryr deployment:

- Initial Setup: Plan network architecture before deployment

- Performance Tuning: Configure Neutron for high port density

- Security Groups: Design security policies for container workloads

- Monitoring: Implement network monitoring and logging

- Upgrade Planning: Plan for service updates and maintenance

These practices ensure reliable and maintainable container networking.

Implementation and Usage

Effective implementation of Kuryr requires proper configuration and integration with container platforms.

Here are key considerations and best practices for utilizing Kuryr effectively.

Common Operations

| Operation | Description | Command |

|---|---|---|

| Cluster Setup | Configure Kuryr CNI | openstack coe cluster template create --network-driver kuryr |

| Status Check | Verify Kuryr deployment | kubectl get pod -n kube-system | grep kuryr |

| Port Management | List container ports | openstack port list --device-owner kuryr |

Use Cases

Kuryr is particularly useful for:

- Network Policy Reuse: Leveraging existing OpenStack network policies

- Performance Optimization: Using VIF Direct for enhanced performance

- Network Visibility: Unified network topology visualization

- Service Integration: Connecting Kubernetes services with Neutron load balancers

These use cases demonstrate Kuryr’s flexibility and integration capabilities.

Advanced Configuration (Production Hardening)

Network Models Matrix

| Scenario | Recommendation | Notes |

|---|---|---|

| High Throughput | VIF Direct or SR‑IOV | Requires NIC/host support; bypasses kernel bridges |

| Multi‑tenant Isolation | Neutron provider networks + security groups | Project‑scoped subnets/routers; RBAC on SGs |

| Policy Control | Neutron security groups mapped from K8s NetworkPolicy | Define default‑deny and explicit allows |

| North‑South Access | Octavia LBaaS for Service type=LoadBalancer | Align health checks and timeouts with app SLOs |

Performance Tuning

- Align MTU across Neutron networks, Kuryr CNI, and node interfaces

- Prefer direct VIF types (where safe) for data‑plane intensive workloads

- Tune Neutron quotas and port security to reduce control‑plane contention

- Batch port operations and enable async reconciliation in Kuryr controller

High Availability (HA)

| Layer | Recommendation | Notes |

|---|---|---|

| Kuryr Controller | 2+ replicas with leader election | Monitor reconciliation lag |

| Message/DB | HA RabbitMQ / Galera (for Neutron) | Observe queue depth and DB latency |

| LBaaS | Octavia active/standby amphorae | Health checks, AZ spread |

Security & Compliance

- Enforce project isolation with Neutron RBAC and per‑namespace mapping

- Default‑deny security posture; generate SG rules from NetworkPolicy

- TLS for all APIs; restrict Kuryr controller credentials by scope

- Audit port/SecurityGroup changes; retain logs per compliance policy

Observability & Operations

- Metrics: port create/delete latency, failed bindings, SG rule churn, LB status

- Logs: structured controller logs with resource UIDs

- Runbooks: port leak cleanup, SG drift correction, LB failover procedures

CI/CD for Manifests

- Validate Kuryr/K8s manifests in CI (lint, policy checks)

- Canary upgrades of Kuryr components; verify NetworkPolicy enforcement

- Maintain compatibility matrix: Kuryr ↔ Neutron ↔ Kubernetes versions

Troubleshooting Playbook (Quick Checks)

- Pod Not Networking: Check Kuryr CNI DaemonSet, Neutron port status, SG rules

- MTU Mismatch: Verify end‑to‑end MTU; enable fragment or adjust CNI/Neutron MTU

- LB Not Ready: Inspect Octavia listener, pool members, health monitor

- Policy Not Applied: Confirm NetworkPolicy mapping and SG sync in controller

Key Points

-

Core Functionality

- Container networking integration

- Neutron native networking

- Multi-tenancy support

- Performance optimization -

Key Features

- CNI plugin support

- Security group integration

- Load balancer integration

- VIF Direct support -

Best Practices

- Network architecture planning

- Performance tuning

- Security configuration

- Monitoring setup

Comments