44 min to read

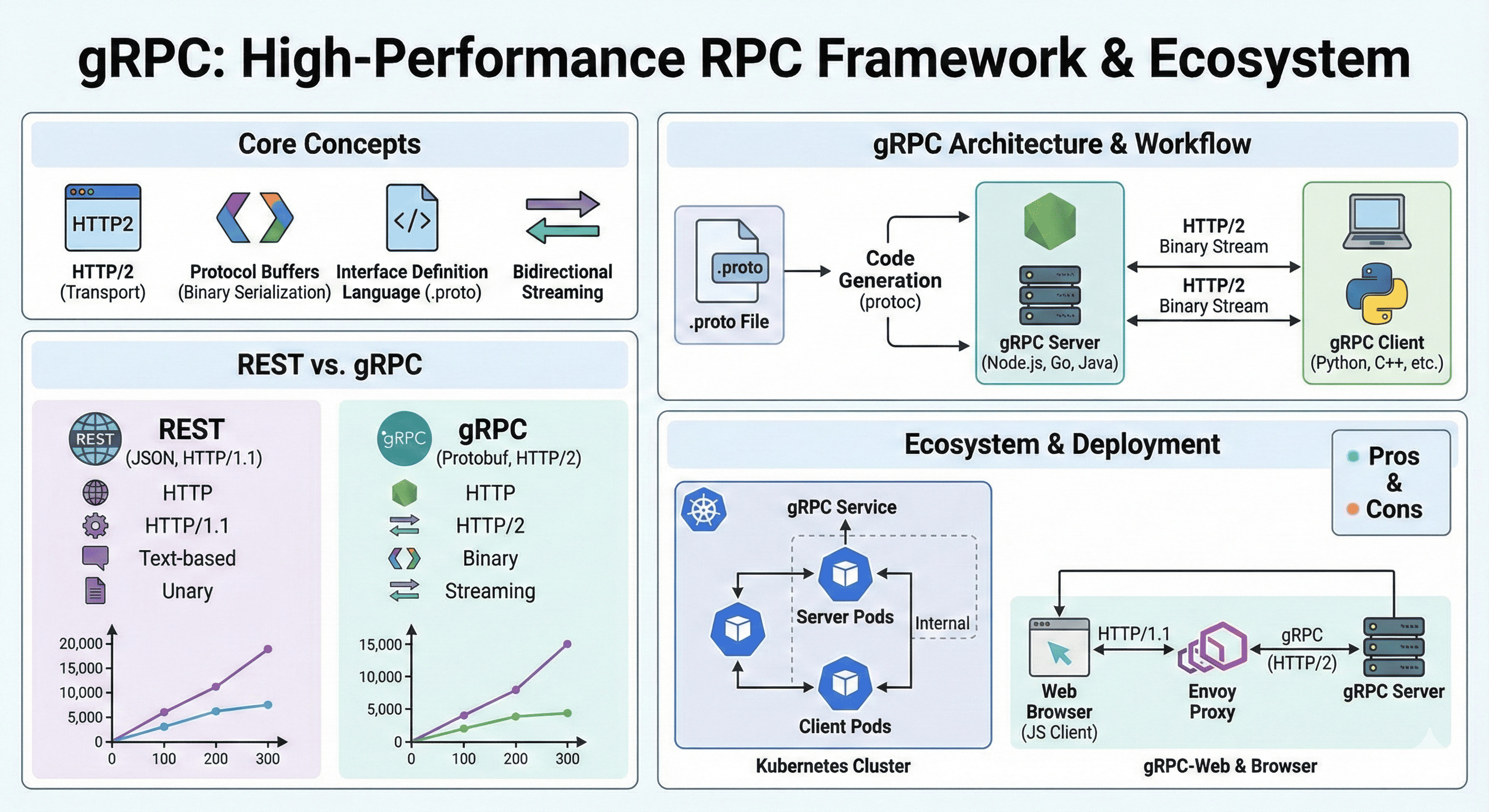

Understanding gRPC - Modern High-Performance RPC Framework

A comprehensive guide to gRPC protocol and its implementation strategies

Understanding gRPC

What is gRPC?

The Evolution of Remote Procedure Calls

gRPC revolutionizes inter-service communication by providing:

- HTTP/2 Foundation: Multiplexing, flow control, header compression

- Protocol Buffers: Efficient binary serialization format for faster data transmission

- Multi-Language Support: Official libraries for Go, Java, Python, Node.js, C++, C#, and more

- Streaming Capabilities: Client-side, server-side, and bidirectional streaming

- Code Generation: Automatically generates client and server code from service definitions

gRPC makes calling remote functions as simple as calling local functions, abstracting away the complexities of network communication.

gRPC Framework Overview

- Protocol Features: HTTP/2, Protocol Buffers, Strong Typing

- Communication Types: Unary RPC, Server Streaming, Client Streaming, Bidirectional Streaming

- Language Support: Cross-Platform, Code Generation, Multiple Languages

- Performance Benefits: Binary Serialization, HTTP/2 Multiplexing, Compact Wire Format

gRPC vs REST: Technical Comparison

Understanding the differences between gRPC and REST APIs helps in choosing the right communication protocol for specific use cases. While REST remains excellent for public APIs and web services, gRPC excels in internal microservice communication where performance and type safety are crucial.

Detailed Protocol Comparison

| Aspect | REST | gRPC |

|---|---|---|

| Transport Protocol |

|

|

| Data Format |

|

|

| Performance |

|

|

| Browser Support |

|

|

| Code Generation |

|

|

| Streaming |

|

|

Communication Patterns

Protocol Buffers: The Foundation of gRPC

Protocol Buffers (protobuf) serve as gRPC’s interface definition language and data serialization mechanism. Understanding protobuf syntax and concepts is essential for effective gRPC implementation, as it defines both the service interface and the data structures used in communication.

Basic Protocol Buffer Syntax

A typical .proto file contains service definitions and message types:

syntax = "proto3";

package user.v1;

import "google/protobuf/timestamp.proto";

service UserService {

rpc GetUser(GetUserRequest) returns (GetUserResponse);

rpc ListUsers(ListUsersRequest) returns (stream User);

rpc CreateUsers(stream CreateUserRequest) returns (CreateUsersResponse);

rpc ChatWithUsers(stream ChatMessage) returns (stream ChatMessage);

}

message User {

int64 id = 1;

string name = 2;

string email = 3;

repeated string roles = 4;

google.protobuf.Timestamp created_at = 5;

UserStatus status = 6;

}

message GetUserRequest {

int64 user_id = 1;

}

message GetUserResponse {

User user = 1;

bool found = 2;

}

message ListUsersRequest {

int32 page_size = 1;

string page_token = 2;

UserFilter filter = 3;

}

message UserFilter {

optional string name_contains = 1;

repeated UserStatus statuses = 2;

}

enum UserStatus {

USER_STATUS_UNSPECIFIED = 0;

USER_STATUS_ACTIVE = 1;

USER_STATUS_INACTIVE = 2;

USER_STATUS_SUSPENDED = 3;

}

message CreateUserRequest {

string name = 1;

string email = 2;

repeated string roles = 3;

}

message CreateUsersResponse {

repeated User created_users = 1;

int32 total_created = 2;

}

message ChatMessage {

int64 user_id = 1;

string content = 2;

google.protobuf.Timestamp timestamp = 3;

}

Advanced Protocol Buffer Features

- Field Options: optional, repeated, reserved; field validation and constraints

Example:string email = 1 [(validate.rules).string.email = true]; - Oneof Fields: Union types for mutually exclusive fields

Example:oneof auth_method { string password = 1; string api_key = 2; } - Well-Known Types: Common types like Timestamp, Duration; wrapper types for nullable primitives

Example:google.protobuf.Timestamp created_at = 1; - Maps: Key-value pair representations

Example:map<string, string> metadata = 1;

Practical Implementation: Node.js gRPC Service

Implementing a production-ready gRPC service involves several considerations beyond basic setup, including error handling, middleware, logging, and proper service architecture. This section demonstrates a comprehensive Node.js implementation with best practices.

Complete Service Implementation

Organize your gRPC project with clear separation of concerns:

grpc-service/

├── proto/

│ ├── user_service.proto

│ └── health.proto

├── src/

│ ├── server.js

│ ├── services/

│ │ ├── user-service.js

│ │ └── health-service.js

│ ├── middleware/

│ │ ├── auth.js

│ │ └── logging.js

│ └── utils/

│ └── db.js

├── client/

│ └── client.js

├── package.json

└── Dockerfile

Server Implementation

// src/server.js

const grpc = require('@grpc/grpc-js');

const protoLoader = require('@grpc/proto-loader');

const path = require('path');

// Load service definitions

const PROTO_PATH = path.join(__dirname, '../proto/user_service.proto');

const packageDefinition = protoLoader.loadSync(PROTO_PATH, {

keepCase: true,

longs: String,

enums: String,

defaults: true,

oneofs: true

});

const userProto = grpc.loadPackageDefinition(packageDefinition);

// Import service implementations

const UserService = require('./services/user-service');

const HealthService = require('./services/health-service');

// Import middleware

const authMiddleware = require('./middleware/auth');

const loggingMiddleware = require('./middleware/logging');

class GRPCServer {

constructor() {

this.server = new grpc.Server();

this.setupServices();

this.setupMiddleware();

}

setupServices() {

// Add UserService

this.server.addService(

userProto.user.v1.UserService.service,

new UserService()

);

// Add HealthService

this.server.addService(

userProto.grpc.health.v1.Health.service,

new HealthService()

);

}

setupMiddleware() {

// Add interceptors for auth and logging

this.server.addHttp2Port('0.0.0.0:50051', grpc.ServerCredentials.createInsecure());

}

async start() {

return new Promise((resolve, reject) => {

this.server.bindAsync(

'0.0.0.0:50051',

grpc.ServerCredentials.createInsecure(),

(error, port) => {

if (error) {

reject(error);

return;

}

console.log(`gRPC server running on port ${port}`);

this.server.start();

resolve(port);

}

);

});

}

async shutdown() {

return new Promise((resolve) => {

this.server.tryShutdown((error) => {

if (error) {

console.error('Error during server shutdown:', error);

this.server.forceShutdown();

}

resolve();

});

});

}

}

// Graceful shutdown handling

process.on('SIGINT', async () => {

console.log('Received SIGINT, shutting down gracefully...');

await server.shutdown();

process.exit(0);

});

// Start server

const server = new GRPCServer();

server.start().catch(console.error);

module.exports = GRPCServer;

Service Implementation with Error Handling

// src/services/user-service.js

const grpc = require('@grpc/grpc-js');

const db = require('../utils/db');

class UserService {

async getUser(call, callback) {

try {

const { user_id } = call.request;

// Validate input

if (!user_id || user_id <= 0) {

const error = new Error('Invalid user ID');

error.code = grpc.status.INVALID_ARGUMENT;

return callback(error);

}

// Fetch user from database

const user = await db.getUserById(user_id);

if (!user) {

const error = new Error('User not found');

error.code = grpc.status.NOT_FOUND;

return callback(error);

}

// Return successful response

callback(null, {

user: {

id: user.id,

name: user.name,

email: user.email,

roles: user.roles,

created_at: {

seconds: Math.floor(user.created_at.getTime() / 1000),

nanos: (user.created_at.getTime() % 1000) * 1000000

},

status: user.status

},

found: true

});

} catch (error) {

console.error('Error in getUser:', error);

const grpcError = new Error('Internal server error');

grpcError.code = grpc.status.INTERNAL;

callback(grpcError);

}

}

listUsers(call) {

try {

const { page_size = 10, page_token, filter } = call.request;

// Stream users from database

const userStream = db.getUserStream(page_size, page_token, filter);

userStream.on('data', (user) => {

call.write({

id: user.id,

name: user.name,

email: user.email,

roles: user.roles,

created_at: {

seconds: Math.floor(user.created_at.getTime() / 1000),

nanos: (user.created_at.getTime() % 1000) * 1000000

},

status: user.status

});

});

userStream.on('end', () => {

call.end();

});

userStream.on('error', (error) => {

console.error('Error in listUsers stream:', error);

const grpcError = new Error('Stream error');

grpcError.code = grpc.status.INTERNAL;

call.destroy(grpcError);

});

} catch (error) {

console.error('Error in listUsers:', error);

const grpcError = new Error('Internal server error');

grpcError.code = grpc.status.INTERNAL;

call.destroy(grpcError);

}

}

createUsers(call, callback) {

const createdUsers = [];

call.on('data', async (request) => {

try {

const { name, email, roles } = request;

// Validate and create user

const user = await db.createUser({ name, email, roles });

createdUsers.push(user);

} catch (error) {

console.error('Error creating user:', error);

const grpcError = new Error('Failed to create user');

grpcError.code = grpc.status.INTERNAL;

return callback(grpcError);

}

});

call.on('end', () => {

callback(null, {

created_users: createdUsers.map(user => ({

id: user.id,

name: user.name,

email: user.email,

roles: user.roles,

created_at: {

seconds: Math.floor(user.created_at.getTime() / 1000),

nanos: (user.created_at.getTime() % 1000) * 1000000

},

status: user.status

})),

total_created: createdUsers.length

});

});

call.on('error', (error) => {

console.error('Error in createUsers stream:', error);

const grpcError = new Error('Stream error');

grpcError.code = grpc.status.INTERNAL;

callback(grpcError);

});

}

chatWithUsers(call) {

// Handle bidirectional streaming for chat

call.on('data', (message) => {

try {

console.log('Received chat message:', message);

// Echo the message back with server timestamp

call.write({

user_id: message.user_id,

content: `Echo: ${message.content}`,

timestamp: {

seconds: Math.floor(Date.now() / 1000),

nanos: (Date.now() % 1000) * 1000000

}

});

} catch (error) {

console.error('Error in chat:', error);

const grpcError = new Error('Chat error');

grpcError.code = grpc.status.INTERNAL;

call.destroy(grpcError);

}

});

call.on('end', () => {

call.end();

});

call.on('error', (error) => {

console.error('Chat stream error:', error);

});

}

}

module.exports = UserService;

Client Implementation

// client/client.js

const grpc = require('@grpc/grpc-js');

const protoLoader = require('@grpc/proto-loader');

const path = require('path');

const PROTO_PATH = path.join(__dirname, '../proto/user_service.proto');

const packageDefinition = protoLoader.loadSync(PROTO_PATH, {

keepCase: true,

longs: String,

enums: String,

defaults: true,

oneofs: true

});

const userProto = grpc.loadPackageDefinition(packageDefinition);

class UserServiceClient {

constructor(serverAddress = 'localhost:50051') {

this.client = new userProto.user.v1.UserService(

serverAddress,

grpc.credentials.createInsecure()

);

}

async getUser(userId) {

return new Promise((resolve, reject) => {

this.client.getUser({ user_id: userId }, (error, response) => {

if (error) {

reject(error);

} else {

resolve(response);

}

});

});

}

listUsers(pageSize = 10, filter = {}) {

const call = this.client.listUsers({

page_size: pageSize,

filter: filter

});

const users = [];

call.on('data', (user) => {

users.push(user);

});

call.on('end', () => {

console.log('Received all users:', users);

});

call.on('error', (error) => {

console.error('Stream error:', error);

});

}

async createMultipleUsers(userRequests) {

return new Promise((resolve, reject) => {

const call = this.client.createUsers((error, response) => {

if (error) {

reject(error);

} else {

resolve(response);

}

});

// Send user creation requests

userRequests.forEach(userRequest => {

call.write(userRequest);

});

call.end();

});

}

startChat() {

const call = this.client.chatWithUsers();

call.on('data', (message) => {

console.log('Received message:', message);

});

call.on('end', () => {

console.log('Chat ended');

});

call.on('error', (error) => {

console.error('Chat error:', error);

});

// Send messages

setInterval(() => {

call.write({

user_id: 1,

content: `Hello from client at ${new Date().toISOString()}`,

timestamp: {

seconds: Math.floor(Date.now() / 1000),

nanos: (Date.now() % 1000) * 1000000

}

});

}, 5000);

return call;

}

close() {

this.client.close();

}

}

// Example usage

async function main() {

const client = new UserServiceClient();

try {

// Test unary RPC

const userResponse = await client.getUser(1);

console.log('User:', userResponse);

// Test server streaming

client.listUsers(5);

// Test client streaming

const createResponse = await client.createMultipleUsers([

{ name: 'Alice', email: 'alice@example.com', roles: ['user'] },

{ name: 'Bob', email: 'bob@example.com', roles: ['admin'] }

]);

console.log('Created users:', createResponse);

// Test bidirectional streaming

const chatCall = client.startChat();

// Cleanup after 30 seconds

setTimeout(() => {

chatCall.end();

client.close();

}, 30000);

} catch (error) {

console.error('Client error:', error);

}

}

if (require.main === module) {

main();

}

module.exports = UserServiceClient;

Kubernetes Deployment and Service Mesh Integration

Deploying gRPC services in Kubernetes requires special considerations for load balancing, service discovery, and traffic management. gRPC’s HTTP/2 nature and connection multiplexing can affect how traditional load balancers handle traffic distribution.

Kubernetes Deployment Configuration

- Namespace, Deployment, Service, HPA YAML examples (see original for details)

- Use readiness/liveness probes, resource limits, preStop hooks

# k8s/namespace.yaml

apiVersion: v1

kind: Namespace

metadata:

name: grpc-services

labels:

istio-injection: enabled

---

# k8s/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: user-service

namespace: grpc-services

labels:

app: user-service

version: v1

spec:

replicas: 3

selector:

matchLabels:

app: user-service

version: v1

template:

metadata:

labels:

app: user-service

version: v1

spec:

containers:

- name: user-service

image: your-registry/user-service:latest

ports:

- name: grpc

containerPort: 50051

protocol: TCP

- name: health

containerPort: 8080

protocol: TCP

env:

- name: PORT

value: "50051"

- name: HEALTH_PORT

value: "8080"

- name: DATABASE_URL

valueFrom:

secretKeyRef:

name: database-secret

key: url

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "512Mi"

cpu: "500m"

livenessProbe:

httpGet:

path: /health

port: health

initialDelaySeconds: 10

periodSeconds: 30

readinessProbe:

httpGet:

path: /ready

port: health

initialDelaySeconds: 5

periodSeconds: 10

lifecycle:

preStop:

exec:

command: ["/bin/sh", "-c", "sleep 15"]

---

# k8s/service.yaml

apiVersion: v1

kind: Service

metadata:

name: user-service

namespace: grpc-services

labels:

app: user-service

spec:

selector:

app: user-service

ports:

- name: grpc

port: 50051

targetPort: grpc

protocol: TCP

- name: health

port: 8080

targetPort: health

protocol: TCP

type: ClusterIP

---

# k8s/hpa.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: user-service-hpa

namespace: grpc-services

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: user-service

minReplicas: 2

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

Istio Service Mesh Configuration

- VirtualService, DestinationRule, Gateway YAML examples (see original for details)

- Use consistent hash load balancing, connection pool tuning, outlier detection

# istio/virtualservice.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: user-service

namespace: grpc-services

spec:

hosts:

- user-service

http:

- match:

- headers:

grpc-service:

exact: user.v1.UserService

route:

- destination:

host: user-service

subset: v1

weight: 90

- destination:

host: user-service

subset: v2

weight: 10

timeout: 30s

retries:

attempts: 3

perTryTimeout: 10s

retryOn: 5xx,reset,connect-failure,refused-stream

---

# istio/destinationrule.yaml

apiVersion: networking.istio.io/v1beta1

kind: DestinationRule

metadata:

name: user-service

namespace: grpc-services

spec:

host: user-service

trafficPolicy:

loadBalancer:

consistentHash:

httpHeaderName: "user-id"

connectionPool:

http:

http2MaxRequests: 100

maxRequestsPerConnection: 10

outlierDetection:

consecutiveGatewayErrors: 5

interval: 30s

baseEjectionTime: 30s

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

---

# istio/gateway.yaml

apiVersion: networking.istio.io/v1beta1

kind: Gateway

metadata:

name: grpc-gateway

namespace: grpc-services

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 443

name: grpc-tls

protocol: GRPC

tls:

mode: SIMPLE

credentialName: grpc-tls-secret

hosts:

- grpc.example.com

Health Check Implementation

// src/services/health-service.js

const grpc = require('@grpc/grpc-js');

class HealthService {

constructor() {

this.status = {

'': 'SERVING', // Overall service health

'user.v1.UserService': 'SERVING'

};

}

check(call, callback) {

const service = call.request.service || '';

const status = this.status[service];

if (status === undefined) {

const error = new Error('Service not found');

error.code = grpc.status.NOT_FOUND;

return callback(error);

}

callback(null, {

status: status === 'SERVING' ? 1 : 2 // SERVING = 1, NOT_SERVING = 2

});

}

watch(call) {

const service = call.request.service || '';

// Send initial status

const status = this.status[service];

if (status === undefined) {

const error = new Error('Service not found');

error.code = grpc.status.NOT_FOUND;

call.destroy(error);

return;

}

call.write({

status: status === 'SERVING' ? 1 : 2

});

// Monitor health changes (simplified example)

const interval = setInterval(() => {

const currentStatus = this.status[service];

if (currentStatus) {

call.write({

status: currentStatus === 'SERVING' ? 1 : 2

});

}

}, 10000);

call.on('cancelled', () => {

clearInterval(interval);

});

}

setStatus(service, status) {

this.status[service] = status;

}

}

module.exports = HealthService;

gRPC-Web: Bridging Browser Compatibility

While gRPC excels in server-to-server communication, browsers cannot directly communicate using the gRPC protocol due to HTTP/2 limitations. gRPC-Web solves this by providing a JavaScript client library and proxy layer that translates between browser-compatible requests and standard gRPC services.

Envoy Proxy Configuration

- Envoy YAML example for gRPC-Web gateway (see original for details)

# envoy/envoy.yaml

static_resources:

listeners:

- name: grpc_web_listener

address:

socket_address:

address: 0.0.0.0

port_value: 8080

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: AUTO

stat_prefix: grpc_web

route_config:

name: local_route

virtual_hosts:

- name: grpc_web_service

domains: ["*"]

routes:

- match:

prefix: "/"

grpc: {}

route:

cluster: grpc_service

timeout: 60s

- match:

prefix: "/"

route:

cluster: grpc_service

timeout: 60s

cors:

allow_origin_string_match:

- prefix: "*"

allow_methods: GET, PUT, DELETE, POST, OPTIONS

allow_headers: keep-alive,user-agent,cache-control,content-type,content-transfer-encoding,custom-header-1,x-accept-content-transfer-encoding,x-accept-response-streaming,x-user-agent,x-grpc-web,grpc-timeout

max_age: "1728000"

expose_headers: custom-header-1,grpc-status,grpc-message

http_filters:

- name: envoy.filters.http.grpc_web

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.grpc_web.v3.GrpcWeb

- name: envoy.filters.http.cors

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.cors.v3.Cors

- name: envoy.filters.http.router

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

clusters:

- name: grpc_service

connect_timeout: 0.25s

type: LOGICAL_DNS

typed_extension_protocol_options:

envoy.extensions.upstreams.http.v3.HttpProtocolOptions:

"@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions

explicit_http_config:

http2_protocol_options: {}

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: grpc_service

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: user-service

port_value: 50051

admin:

address:

socket_address:

address: 0.0.0.0

port_value: 9901

JavaScript Client Implementation

- Example using

UserServiceClientPband React hook for fetching user data (see original for details)

// frontend/src/grpc-client.js

import { UserServiceClient } from './proto/UserServiceClientPb';

import { GetUserRequest, ListUsersRequest } from './proto/user_service_pb';

class UserGRPCClient {

constructor(serverUrl = 'http://localhost:8080') {

this.client = new UserServiceClient(serverUrl, null, null);

}

async getUser(userId) {

return new Promise((resolve, reject) => {

const request = new GetUserRequest();

request.setUserId(userId);

this.client.getUser(request, {}, (err, response) => {

if (err) {

console.error('gRPC Error:', err);

reject(err);

} else {

resolve({

user: response.getUser(),

found: response.getFound()

});

}

});

});

}

listUsers(pageSize = 10, filter = {}) {

const request = new ListUsersRequest();

request.setPageSize(pageSize);

if (filter.nameContains) {

const userFilter = new UserFilter();

userFilter.setNameContains(filter.nameContains);

request.setFilter(userFilter);

}

const stream = this.client.listUsers(request, {});

const users = [];

return new Promise((resolve, reject) => {

stream.on('data', (response) => {

users.push({

id: response.getId(),

name: response.getName(),

email: response.getEmail(),

roles: response.getRolesList(),

status: response.getStatus()

});

});

stream.on('end', () => {

resolve(users);

});

stream.on('error', (err) => {

console.error('Stream error:', err);

reject(err);

});

});

}

// Example with proper error handling and loading states

async getUserWithErrorHandling(userId) {

try {

const response = await this.getUser(userId);

return {

data: response,

error: null,

loading: false

};

} catch (error) {

return {

data: null,

error: {

code: error.code,

message: error.message,

details: error.details

},

loading: false

};

}

}

}

// React Hook example

import { useState, useEffect } from 'react';

export function useUserData(userId) {

const [state, setState] = useState({

data: null,

loading: true,

error: null

});

useEffect(() => {

const client = new UserGRPCClient();

async function fetchUser() {

setState(prev => ({ ...prev, loading: true }));

try {

const response = await client.getUser(userId);

setState({

data: response,

loading: false,

error: null

});

} catch (error) {

setState({

data: null,

loading: false,

error: error

});

}

}

if (userId) {

fetchUser();

}

}, [userId]);

return state;

}

export default UserGRPCClient;

Docker Compose Setup for Development

# docker-compose.yml

version: '3.8'

services:

grpc-server:

build: .

ports:

- "50051:50051"

- "8080:8080"

environment:

- NODE_ENV=development

- DATABASE_URL=postgresql://user:pass@postgres:5432/userdb

depends_on:

- postgres

volumes:

- ./src:/app/src

- ./proto:/app/proto

envoy:

image: envoyproxy/envoy:v1.27-latest

ports:

- "8080:8080"

- "9901:9901"

volumes:

- ./envoy/envoy.yaml:/etc/envoy/envoy.yaml

depends_on:

- grpc-server

command: ["/usr/local/bin/envoy", "-c", "/etc/envoy/envoy.yaml", "-l", "debug"]

postgres:

image: postgres:15

environment:

POSTGRES_DB: userdb

POSTGRES_USER: user

POSTGRES_PASSWORD: pass

ports:

- "5432:5432"

volumes:

- postgres_data:/var/lib/postgresql/data

volumes:

postgres_data:

Production Considerations and Best Practices

Successfully deploying gRPC services in production requires attention to monitoring, security, performance optimization, and operational concerns. This section covers essential practices for building reliable, scalable gRPC services.

Monitoring and Observability

| Aspect | Implementation | Tools & Metrics |

|---|---|---|

| Request Tracing |

|

|

| Metrics Collection |

|

|

| Logging |

|

|

| Health Monitoring |

|

|

Security Best Practices

- TLS encryption: Always use TLS for production

const credentials = grpc.credentials.createSsl( fs.readFileSync('ca-cert.pem'), fs.readFileSync('client-key.pem'), fs.readFileSync('client-cert.pem') ); - Authentication: JWT or mTLS

const metadata = new grpc.Metadata(); metadata.add('authorization', `Bearer ${jwtToken}`); - Input validation

- Rate limiting

- Network policies

- Secrets management

Performance Optimization Strategies

- Connection pooling and reuse

- Keepalive settings

- Message size limits

// Connection pooling and reuse

class OptimizedGRPCClient {

constructor(serverAddress) {

this.address = serverAddress;

this.connectionPool = new Map();

this.credentials = grpc.credentials.createSsl();

}

getClient(serviceName) {

if (!this.connectionPool.has(serviceName)) {

const client = new serviceClients[serviceName](

this.address,

this.credentials,

{

// Optimization options

'grpc.keepalive_time_ms': 30000,

'grpc.keepalive_timeout_ms': 5000,

'grpc.keepalive_permit_without_calls': true,

'grpc.http2.max_pings_without_data': 0,

'grpc.http2.min_ping_interval_without_data_ms': 300000,

'grpc.http2.min_time_between_pings_ms': 10000,

'grpc.max_receive_message_length': 4 * 1024 * 1024,

'grpc.max_send_message_length': 4 * 1024 * 1024

}

);

this.connectionPool.set(serviceName, client);

}

return this.connectionPool.get(serviceName);

}

close() {

for (const client of this.connectionPool.values()) {

client.close();

}

this.connectionPool.clear();

}

}

When to Choose gRPC vs REST

The decision between gRPC and REST should be based on specific requirements, team capabilities, and system architecture. Both technologies have their strengths and are often used together in modern distributed systems.

Decision Matrix

| Use Case | gRPC Preferred | REST Preferred |

|---|---|---|

| Internal Microservices |

|

|

| Public APIs |

|

|

| Data Processing |

|

|

Hybrid Architecture Example

- Web Frontend → REST API → API Gateway

- Mobile App → gRPC-Web → API Gateway

- API Gateway → gRPC → User/Product/Notification/Inventory/Auth Services

- Legacy System via REST

- Analytics Service via gRPC Streaming

Key Points

-

Core Benefits

- High performance through binary serialization and HTTP/2

- Strong typing and automatic code generation with Protocol Buffers

- Native streaming support for real-time applications

- Excellent for internal microservice communication -

Implementation Strategy

- Use for internal service-to-service communication

- Combine with REST for public APIs and browser clients

- Implement proper error handling and monitoring

- Consider gRPC-Web for browser compatibility when needed -

Production Readiness

- Deploy with service mesh for advanced traffic management

- Implement comprehensive monitoring and tracing

- Use TLS encryption and proper authentication

- Design for scalability with connection pooling and health checks

Comments