16 min to read

Understanding Kubernetes Storage - PV, PVC, StorageClass, and CSI-Driver

A comprehensive guide to Kubernetes storage components and their interactions

Overview

Kubernetes storage is a critical aspect of deploying stateful applications. This comprehensive guide explores the key components of the Kubernetes storage ecosystem, including Persistent Volumes (PV), Persistent Volume Claims (PVC), StorageClass, and Container Storage Interface (CSI) drivers.

In containerized environments, pods are ephemeral by design - they can be created, destroyed, and rescheduled across the cluster. However, many applications require persistent data that survives pod restarts or rescheduling. Kubernetes storage solves this challenge by providing mechanisms to:

- Persist data beyond pod lifecycles

- Share data between pods

- Manage storage resources at scale

- Abstract underlying storage implementations

- Support stateful workloads in a cloud-native environment

Storage Architecture Overview

Kubernetes Storage Components

Persistent Volumes (PV)

A Persistent Volume is a cluster-level storage resource provisioned by administrators or dynamically via StorageClass.

- Cluster-wide resource not limited to any namespace

- Independent lifecycle from pods

- Abstracts underlying storage details

- Can be provisioned statically (manual) or dynamically (via StorageClass)

- Contains details necessary to mount a particular storage volume

Access Modes

PVs support different access modes that determine how they can be mounted by nodes:

| Access Mode | Abbreviation | Description | Use Case |

|---|---|---|---|

| ReadWriteOnce | RWO | Volume can be mounted as read-write by a single node | Databases, single-instance applications |

| ReadOnlyMany | ROX | Volume can be mounted as read-only by many nodes | Configuration files, static content |

| ReadWriteMany | RWX | Volume can be mounted as read-write by many nodes | Shared filesystems, collaborative applications |

| ReadWriteOncePod | RWOP | Volume can be mounted as read-write by a single pod (K8s v1.22+) | Critical applications requiring exclusive access |

Not all volume types support all access modes. For example, most cloud provider block storage (AWS EBS, GCE PD) only supports ReadWriteOnce, while file-based storage like NFS can support ReadWriteMany.

PV Example

apiVersion: v1

kind: PersistentVolume

metadata:

name: example-pv

spec:

capacity:

storage: 10Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

storageClassName: standard

mountOptions:

- hard

- nfsvers=4.1

nfs:

server: nfs-server.example.com

path: /exported/path

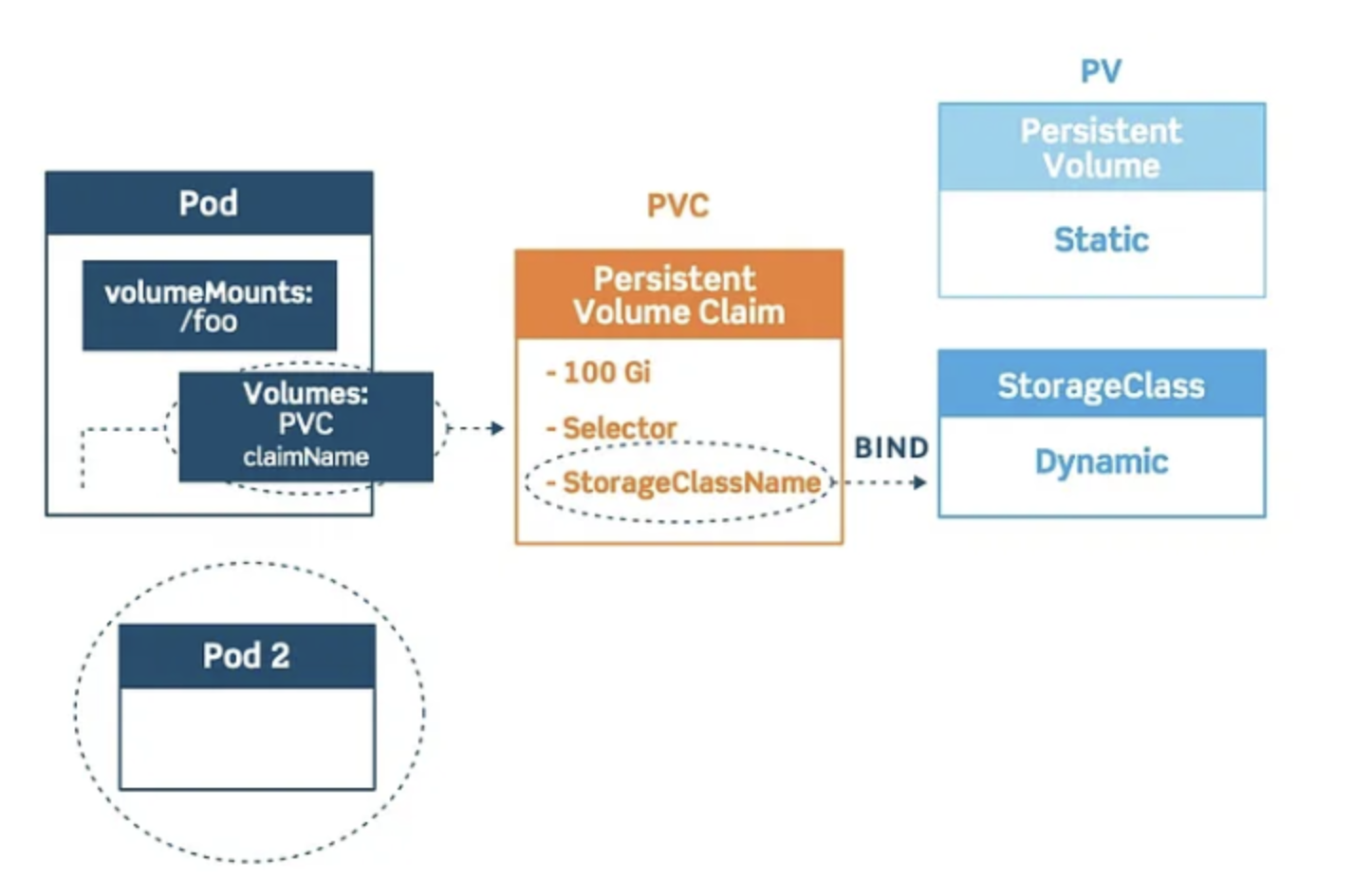

Persistent Volume Claims (PVC)

A Persistent Volume Claim is a request for storage by a user. It’s similar to a pod in that it consumes PV resources just as pods consume node resources.

- Namespace resource that represents a request for storage

- Specifies size, access mode, and storage class requirements

- Automatically binds to suitable PVs based on requirements

- Can trigger dynamic provisioning if no matching PV exists

- Referenced by name in pod specifications

PVC Example

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: example-pvc

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: standard

volumeMode: Filesystem

Pod Using PVC

apiVersion: v1

kind: Pod

metadata:

name: example-pod

spec:

containers:

- name: app

image: nginx

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: storage

ports:

- containerPort: 80

volumes:

- name: storage

persistentVolumeClaim:

claimName: example-pvc

Storage Lifecycle Management

PV Lifecycle Phases

1. Provisioning:

- Static: Administrator creates PVs in advance

- Dynamic: PVs created automatically via StorageClass

2. Binding:

- PVC created by user

- Control plane finds matching PV

- PV bound to PVC

3. Using:

- Pod references PVC

- Kubelet mounts volume

- Container uses mounted volume

4. Releasing:

- Pod deleted

- PVC deleted

- PV released (but not available)

5. Reclaiming:

- Based on persistentVolumeReclaimPolicy

Reclaim Policies

- Retain: Preserves volume and data after PVC deletion. Requires manual reclamation by administrators

- Delete: Automatically removes underlying storage resource when PVC is deleted (common for cloud environments)

- Recycle: Legacy policy that performs basic scrub (rm -rf) before making volume available again (deprecated in favor of dynamic provisioning)

The default reclaim policy depends on how the PV was created:

- Manually created PVs: Default to Retain

- Dynamically provisioned PVs: Default to Delete unless otherwise specified in the StorageClass

StorageClass

StorageClass provides a way to describe “classes” of storage offered in a Kubernetes cluster. It serves as an abstraction layer between users and the underlying storage infrastructure.

- Defines how storage will be dynamically provisioned

- Specifies the provisioner to use (CSI driver or in-tree plugin)

- Sets parameters for the underlying storage type

- Defines volume reclaim policy

- Controls volume expansion capabilities

- Manages mount options

StorageClass Parameters

| Parameter | Description |

|---|---|

| provisioner | Determines which volume plugin is used for provisioning |

| parameters | Configuration parameters for the provisioner (varies by provisioner) |

| reclaimPolicy | What happens to PVs when their claim is deleted (Delete/Retain) |

| allowVolumeExpansion | Whether volumes can be expanded after creation |

| volumeBindingMode | When volume binding and dynamic provisioning should occur |

| mountOptions | Additional mount options for when the volume is mounted |

Volume Binding Modes

- Immediate: Volume binding and dynamic provisioning occurs when PVC is created (default)

- WaitForFirstConsumer: Delayed until a pod using the PVC is created (useful for topology-aware scheduling)

StorageClass Examples

AWS EBS StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: standard

provisioner: kubernetes.io/aws-ebs

parameters:

type: gp2

fsType: ext4

encrypted: "true"

reclaimPolicy: Delete

allowVolumeExpansion: true

volumeBindingMode: WaitForFirstConsumer

GCE PD StorageClass

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: premium

provisioner: kubernetes.io/gce-pd

parameters:

type: pd-ssd

replication-type: none

reclaimPolicy: Delete

allowVolumeExpansion: true

Container Storage Interface (CSI)

The Container Storage Interface (CSI) is a standard for exposing arbitrary block and file storage systems to containerized workloads in Kubernetes and other container orchestration systems.

- Standardized API between container orchestrators and storage providers

- Enables third-party storage providers to develop plugins once for multiple platforms

- Storage drivers can be developed out-of-tree and deployed via standard Kubernetes primitives

- Supports advanced storage features like snapshots, cloning, and volume expansion

- Allows for storage driver updates without Kubernetes core changes

CSI Architecture

Runs on every node"] CO --> |CSI API Calls| CONTROLLER["Controller Plugin (ControllerServer)

Cluster-wide component"] CONTROLLER --> |Volume Operations| STORAGE["Storage System"] NODE --> |Mount Operations| STORAGE subgraph "CSI Components" NODE CONTROLLER end style K fill:#326ce5,stroke:#fff,stroke-width:1px,color:#fff style CO fill:#326ce5,stroke:#fff,stroke-width:1px,color:#fff style NODE fill:#ff9900,stroke:#fff,stroke-width:1px,color:#fff style CONTROLLER fill:#ff9900,stroke:#fff,stroke-width:1px,color:#fff style STORAGE fill:#ccc,stroke:#999,stroke-width:1px,color:#333

CSI Implementation Components

1. CSI Controller Plugin

- Handles volume creation/deletion

- Manages attachments between volumes and nodes

- Implements volume snapshots and clone operations

- Typically deployed as a StatefulSet or Deployment

2. CSI Node Plugin:

- Runs on every node (DaemonSet)

- Handles volume mount/unmount operations

- Formats and mounts volumes to paths

- Exposes node capabilities

3. CSI Driver Registrar:

- Registers CSI driver with Kubernetes

- Manages driver capabilities information

CSI Volume Types

- Block Storage: Raw block devices (AWS EBS, GCE PD)

- Best for performance-critical applications like databases

- Usually limited to ReadWriteOnce access mode

- Examples: AWS EBS CSI Driver, GCE PD CSI Driver, Azure Disk CSI Driver

- File Storage: Network-based filesystems (NFS, SMB)

- Supports ReadWriteMany access for multi-pod access

- Good for shared data between multiple pods

- Examples: NFS CSI Driver, Azure File CSI Driver, EFS CSI Driver

- Object Storage: S3-compatible storage

- Best for unstructured data and large datasets

- Often mounted via specialized gateways

- Examples: MinIO CSI Driver, Ceph Object Gateway CSI Driver

CSI Driver Example

A basic CSI driver deployment usually consists of several Kubernetes resources:

# Example CSI Driver configuration for a fictitious storage provider

---

apiVersion: storage.k8s.io/v1

kind: CSIDriver

metadata:

name: example.csi.company.com

spec:

attachRequired: true

podInfoOnMount: true

volumeLifecycleModes:

- Persistent

- Ephemeral

fsGroupPolicy: File

---

# Controller service

apiVersion: apps/v1

kind: Deployment

metadata:

name: example-csi-controller

spec:

replicas: 1

selector:

matchLabels:

app: example-csi-controller

template:

metadata:

labels:

app: example-csi-controller

spec:

serviceAccountName: example-csi-controller-sa

containers:

- name: csi-provisioner

image: k8s.gcr.io/sig-storage/csi-provisioner:v2.2.2

args:

- "--csi-address=$(ADDRESS)"

- "--v=5"

env:

- name: ADDRESS

value: /var/lib/csi/sockets/pluginproxy/csi.sock

volumeMounts:

- name: socket-dir

mountPath: /var/lib/csi/sockets/pluginproxy/

- name: example-csi-plugin

image: example/csi-driver:v1.0.0

args:

- "--endpoint=$(CSI_ENDPOINT)"

- "--nodeid=$(KUBE_NODE_NAME)"

env:

- name: CSI_ENDPOINT

value: unix:///var/lib/csi/sockets/pluginproxy/csi.sock

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: socket-dir

mountPath: /var/lib/csi/sockets/pluginproxy/

volumes:

- name: socket-dir

emptyDir: {}

---

# Node service

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: example-csi-node

spec:

selector:

matchLabels:

app: example-csi-node

template:

metadata:

labels:

app: example-csi-node

spec:

containers:

- name: csi-node-driver-registrar

image: k8s.gcr.io/sig-storage/csi-node-driver-registrar:v2.5.0

args:

- "--csi-address=$(ADDRESS)"

- "--kubelet-registration-path=$(DRIVER_REG_SOCK_PATH)"

- "--v=5"

env:

- name: ADDRESS

value: /csi/csi.sock

- name: DRIVER_REG_SOCK_PATH

value: /var/lib/kubelet/plugins/example.csi.company.com/csi.sock

volumeMounts:

- name: plugin-dir

mountPath: /csi

- name: registration-dir

mountPath: /registration

- name: example-csi-plugin

image: example/csi-driver:v1.0.0

securityContext:

privileged: true

args:

- "--endpoint=$(CSI_ENDPOINT)"

- "--nodeid=$(KUBE_NODE_NAME)"

env:

- name: CSI_ENDPOINT

value: unix:///csi/csi.sock

- name: KUBE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

volumeMounts:

- name: plugin-dir

mountPath: /csi

- name: pods-mount-dir

mountPath: /var/lib/kubelet/pods

mountPropagation: "Bidirectional"

- name: device-dir

mountPath: /dev

volumes:

- name: registration-dir

hostPath:

path: /var/lib/kubelet/plugins_registry/

type: Directory

- name: plugin-dir

hostPath:

path: /var/lib/kubelet/plugins/example.csi.company.com/

type: DirectoryOrCreate

- name: pods-mount-dir

hostPath:

path: /var/lib/kubelet/pods

type: Directory

- name: device-dir

hostPath:

path: /dev

type: Directory

Other Volume Types

In addition to PVs, Kubernetes supports several ephemeral volume types for pods:

| Volume Type | Description | Use Cases |

|---|---|---|

| emptyDir | Temporary directory that shares pod lifecycle | Scratch space, checkpointing, shared cache between containers |

| hostPath | Mounts file or directory from host node | Access node logs, kubelet configuration (use with caution, security risks) |

| configMap | Mounts a ConfigMap as a volume | Configuration files, environment variables |

| secret | Mounts a Secret as a volume | Sensitive data like passwords, tokens, keys |

| projected | Maps several volume sources into same directory | Combining secrets, configmaps, and downward API |

| downwardAPI | Exposes pod information as files | Access pod metadata and labels from container |

| ephemeral CSI | Ephemeral volumes using CSI drivers | Temporary storage with driver-specific features |

Best Practices

Storage Management

1. Use StorageClasses for dynamic provisioning

- Automates PV creation

- Ensures consistent settings

- Simplifies operations

2. Set appropriate reclaim policies

- Use

Retainfor important data - Use

Deletefor temporary or replaceable data

3. Implement data protection strategies

- Use volume snapshots for backups

- Consider using operators for database backups

4. Plan for capacity

- Monitor storage usage

- Set resource limits on PVCs

- Configure volume expansion when needed

Performance Considerations

1. Match storage type to workload

- Use SSDs for high-performance workloads

- Use network storage for shared access requirements

- Consider local storage for latency-sensitive applications

2. Optimize access patterns

- Use ReadWriteOnce for databases

- Use ReadWriteMany only when necessary

- Consider topology constraints for better performance

3. Monitor I/O performance

- Use Prometheus metrics

- Set up alerts for I/O latency

- Implement monitoring for volume usage

Troubleshooting

Common Issues

PVC stuck in Pending state

- Check if suitable PV exists

- Verify StorageClass exists and is configured correctly

- Check CSI driver logs for provisioning errors

- Validate access modes compatibility

Volume mounting failures

- Check kubelet logs on node

- Verify CSI node plugin is running

- Confirm node has access to storage backend

- Check filesystem permissions

Data loss or corruption

- Verify reclaim policies

- Check for unintended PVC deletions

- Review storage system logs

- Investigate storage provider issues

Useful Commands

# List PVs and their status

kubectl get pv

# List PVCs and their status

kubectl get pvc --all-namespaces

# Describe PV for details

kubectl describe pv <pv-name>

# Check PVC events

kubectl describe pvc <pvc-name> -n <namespace>

# View StorageClasses

kubectl get storageclass

# Check CSI driver pods

kubectl get pods -n kube-system | grep csi

# Check node plugin logs

kubectl logs -n kube-system <csi-node-pod-name> -c <container-name>

# View volume attachments

kubectl get volumeattachments

Conclusion

Kubernetes storage provides a flexible and powerful system for managing persistent data in containerized applications. Understanding the relationships between PVs, PVCs, StorageClasses, and CSI drivers is essential for effectively deploying stateful workloads in Kubernetes.

By leveraging these components properly, you can design robust storage solutions that balance performance, reliability, and scalability for your applications.

- Persistent Volumes provide an abstraction layer for underlying storage resources

- Persistent Volume Claims are namespace-specific requests for storage

- StorageClasses enable dynamic provisioning and define storage characteristics

- CSI Drivers provide a standardized interface for different storage backends

- Understanding volume lifecycle and reclaim policies is crucial for data management

- Matching storage types to workload requirements optimizes application performance

Comments