20 min to read

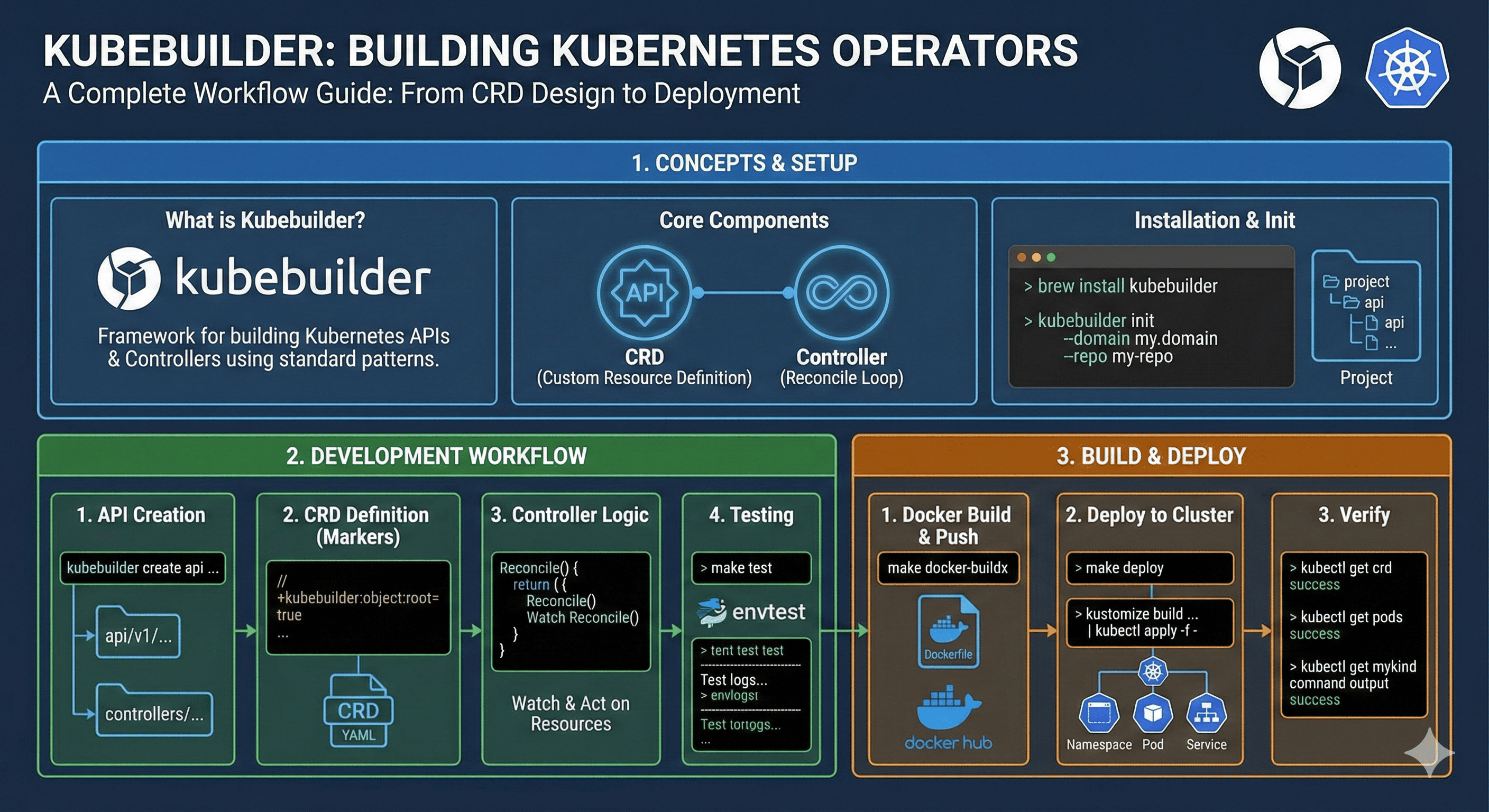

Creating Kubernetes Operators with Kubebuilder

A step-by-step guide to building custom controllers for Kubernetes

Overview

Kubernetes Operators extend the platform’s functionality by adding domain-specific logic to manage complex applications. This guide demonstrates how to build Kubernetes Operators using Kubebuilder, a powerful framework that simplifies the development of custom controllers and APIs.

A Kubernetes Operator is a method of packaging, deploying, and managing applications using custom resources and controllers. Operators follow Kubernetes principles to automate operational tasks that would otherwise require manual intervention.

Understanding Kubebuilder

What is Kubebuilder?

Kubebuilder is an SDK for building Kubernetes APIs and controllers using Go. It provides scaffolding, code generation, and other tools to simplify operator development.

- Code Generation: Automatically generates boilerplate code for CRDs and controllers

- Standards-Based: Built on controller-runtime library, adhering to Kubernetes API patterns

- Scaffolding: Provides project structure including configuration files, Dockerfile, and Makefile

- Testing: Integrates with tools like 'envtest' for Kubernetes testing

- Extensibility: Easily customizable to fit specific requirements

Kubebuilder Development Workflow

Getting Started with Kubebuilder

Prerequisites

Before starting, ensure you have the following tools installed:

- Go (version 1.19+)

- Docker

- kubectl

- kustomize

- Kubernetes cluster for testing

Installation

# macOS

brew install kubebuilder

# Linux

curl -L -o kubebuilder https://go.kubebuilder.io/dl/latest/$(go env GOOS)/$(go env GOARCH)

chmod +x kubebuilder

sudo mv kubebuilder /usr/local/bin/

Project Initialization

Create a new project with Kubebuilder:

# Create a directory for your project

mkdir -p ~/projects/namespace-sync

cd ~/projects/namespace-sync

# Initialize the project

kubebuilder init --domain nsync.dev --repo github.com/somaz94/k8s-namespace-sync

# Create an API

kubebuilder create api --group sync --version v1 --kind NamespaceSync

When prompted:

- Create Resource [y/n]:

y - Create Controller [y/n]:

y

This generates a skeleton project structure with:

- API definitions

- Controller scaffolding

- Configuration files

- Testing infrastructure

Understanding the Project Structure

api/- CRD API definitionscontrollers/- Reconciliation logicconfig/- Kubernetes resource manifestsMakefile- Build and deployment commandsmain.go- Operator entry point

Dockerfile- Container image definitiongo.mod- Go module dependencieshack/- Scripts and toolsPROJECT- Kubebuilder project metadata

Here’s a more detailed view of the generated project structure:

.

├── api # API definitions

│ └── v1

│ ├── groupversion_info.go

│ ├── namespacesync_types.go # CRD definition

│ └── zz_generated.deepcopy.go

├── bin # Binaries

├── cmd # Command-line interfaces

│ └── main.go # Main entry point

├── config # Kubernetes configuration

│ ├── crd # Custom Resource Definitions

│ ├── default # Default configurations

│ ├── manager # Controller manager

│ ├── rbac # Role-based access control

│ └── samples # Sample custom resources

├── controllers # Controller logic

│ ├── namespacesync_controller.go # Main controller logic

│ └── suite_test.go # Test setup

├── Dockerfile # Container build instructions

├── go.mod # Go module definition

├── go.sum # Go dependencies checksum

├── hack # Scripts and tools

│ └── boilerplate.go.txt

├── Makefile # Build, test, and deploy commands

└── PROJECT # Project metadata

Defining Custom Resources

The core of your operator is the Custom Resource Definition (CRD). This defines the API that users will interact with.

Understanding the API Definition

Let’s examine the API definition in api/v1/namespacesync_types.go:

// NamespaceSyncSpec defines the desired state of NamespaceSync

type NamespaceSyncSpec struct {

// SourceNamespace is the namespace to sync resources from

// +kubebuilder:validation:Required

SourceNamespace string `json:"sourceNamespace"`

// TargetNamespaces is a list of namespaces to sync resources to

// +optional

TargetNamespaces []string `json:"targetNamespaces,omitempty"`

// NamespaceSelector selects namespaces to sync resources to

// +optional

NamespaceSelector *metav1.LabelSelector `json:"namespaceSelector,omitempty"`

// Resources is a list of resources to sync

// +kubebuilder:validation:Required

// +kubebuilder:validation:MinItems=1

Resources []ResourceSelector `json:"resources"`

}

// ResourceSelector defines which resources to sync

type ResourceSelector struct {

// Kind of the resource (e.g. ConfigMap, Secret)

// +kubebuilder:validation:Required

// +kubebuilder:validation:Enum=ConfigMap;Secret

Kind string `json:"kind"`

// Name of the resource

// +kubebuilder:validation:Required

Name string `json:"name"`

}

// NamespaceSyncStatus defines the observed state of NamespaceSync

type NamespaceSyncStatus struct {

// Conditions represent the latest available observations of the NamespaceSync

// +optional

Conditions []metav1.Condition `json:"conditions,omitempty"`

}

Adding Kubebuilder Markers

Kubebuilder uses special comment markers to generate additional code and metadata:

// +kubebuilder:object:root=true

// +kubebuilder:subresource:status

// +kubebuilder:printcolumn:name="Source",type="string",JSONPath=".spec.sourceNamespace"

// +kubebuilder:printcolumn:name="Age",type="date",JSONPath=".metadata.creationTimestamp"

// +kubebuilder:printcolumn:name="Status",type="string",JSONPath=".status.conditions[?(@.type=='Ready')].status"

// +kubebuilder:printcolumn:name="Message",type="string",JSONPath=".status.conditions[?(@.type=='Ready')].message"

These markers:

- Define the resource as a root object

- Add a status subresource

- Configure columns for

kubectl getoutput - Define validation rules for fields

| Marker Type | Purpose |

|---|---|

| object | Defines object characteristics (e.g., root=true for top-level resources) |

| subresource | Adds subresources like status or scale |

| printcolumn | Configures columns in kubectl output |

| validation | Adds schema validation (e.g., required fields, enums) |

| rbac | Defines permissions needed for the controller |

Implementing the Controller

The controller contains the reconciliation logic for your custom resource.

Understanding the Reconciliation Loop

The controller watches for changes to your custom resource and other related resources, then triggers the reconciliation loop to ensure the actual state matches the desired state.

Controller Implementation

Let’s look at a simplified version of a controller from our NamespaceSync example:

// NamespaceSyncReconciler reconciles a NamespaceSync object

type NamespaceSyncReconciler struct {

client.Client

Scheme *runtime.Scheme

Recorder record.EventRecorder

}

// +kubebuilder:rbac:groups=sync.nsync.dev,resources=namespacesyncs,verbs=get;list;watch;create;update;patch;delete

// +kubebuilder:rbac:groups=sync.nsync.dev,resources=namespacesyncs/status,verbs=get;update;patch

// +kubebuilder:rbac:groups=sync.nsync.dev,resources=namespacesyncs/finalizers,verbs=update

// +kubebuilder:rbac:groups="",resources=namespaces,verbs=get;list;watch

// +kubebuilder:rbac:groups="",resources=configmaps;secrets,verbs=get;list;watch;create;update;patch;delete

func (r *NamespaceSyncReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

log := log.FromContext(ctx)

// Fetch the NamespaceSync instance

var namespacesync syncv1.NamespaceSync

if err := r.Get(ctx, req.NamespacedName, &namespacesync); err != nil {

// Handle not-found error

return ctrl.Result{}, client.IgnoreNotFound(err)

}

// Add finalizer if needed

if !controllerutil.ContainsFinalizer(&namespacesync, finalizerName) {

controllerutil.AddFinalizer(&namespacesync, finalizerName)

if err := r.Update(ctx, &namespacesync); err != nil {

log.Error(err, "Failed to add finalizer")

return ctrl.Result{}, err

}

}

// Check if being deleted

if !namespacesync.ObjectMeta.DeletionTimestamp.IsZero() {

return r.handleDeletion(ctx, &namespacesync)

}

// Get target namespaces

targetNamespaces, err := r.getTargetNamespaces(ctx, &namespacesync)

if err != nil {

return ctrl.Result{}, err

}

// Synchronize resources to target namespaces

if err := r.syncResources(ctx, &namespacesync, targetNamespaces); err != nil {

// Update status to reflect error

r.updateStatusCondition(&namespacesync, metav1.ConditionFalse, "SyncFailed", err.Error())

return ctrl.Result{}, err

}

// Update status to reflect success

r.updateStatusCondition(&namespacesync, metav1.ConditionTrue, "Synced", "Resources synchronized successfully")

return ctrl.Result{RequeueAfter: 5 * time.Minute}, nil

}

Key Controller Components

- RBAC Markers: Define the permissions needed by the controller

- Reconcile Function: Main logic that handles the resource

- Finalizers: Ensure cleanup when resources are deleted

- Status Updates: Report the current state back to the user

Building and Testing Your Operator

Key Makefile Commands

Kubebuilder creates a comprehensive Makefile with all necessary commands:

| Command | Description |

|---|---|

make generate |

Generate code (DeepCopy methods, etc.) for custom resources |

make manifests |

Generate CRD manifests and RBAC configuration |

make test |

Run unit tests with a simulated Kubernetes API server |

make run |

Run the controller locally against your configured Kubernetes cluster |

make docker-build |

Build the Docker image |

make docker-push |

Push the Docker image to a registry |

make deploy |

Deploy the controller to the Kubernetes cluster |

Running Tests

Kubebuilder integrates with the Kubernetes envtest package to simulate the API server for testing:

// controllers/suite_test.go

func TestAPIs(t *testing.T) {

RegisterFailHandler(Fail)

RunSpecs(t, "Controller Suite")

}

var _ = BeforeSuite(func() {

logf.SetLogger(zap.New(zap.WriteTo(GinkgoWriter), zap.UseDevMode(true)))

By("bootstrapping test environment")

testEnv = &envtest.Environment{

CRDDirectoryPaths: []string{filepath.Join("..", "config", "crd", "bases")},

ErrorIfCRDPathMissing: true,

}

cfg, err := testEnv.Start()

Expect(err).NotTo(HaveOccurred())

Expect(cfg).NotTo(BeNil())

err = syncv1.AddToScheme(scheme.Scheme)

Expect(err).NotTo(HaveOccurred())

k8sClient, err = client.New(cfg, client.Options{Scheme: scheme.Scheme})

Expect(err).NotTo(HaveOccurred())

Expect(k8sClient).NotTo(BeNil())

})

To run tests:

make test

Building for Multiple Platforms

For cross-platform support, add a multi-platform build rule to your Makefile:

Real-World Example: Namespace Sync Operator

Let’s explore a real-world example of a Kubernetes Operator built with Kubebuilder: a Namespace Synchronization Operator that copies resources from one namespace to others.

Use Case

- Synchronize ConfigMaps and Secrets from a source namespace to multiple target namespaces

- Target namespaces can be explicitly listed or selected via labels

- Resources stay in sync automatically when the source changes

CRD Example

apiVersion: sync.nsync.dev/v1

kind: NamespaceSync

metadata:

name: config-sync

spec:

sourceNamespace: source-namespace

targetNamespaces:

- target-namespace-1

- target-namespace-2

resources:

- kind: ConfigMap

name: shared-config

- kind: Secret

name: shared-credentials

Or using a namespace selector:

apiVersion: sync.nsync.dev/v1

kind: NamespaceSync

metadata:

name: config-sync-all

spec:

sourceNamespace: source-namespace

namespaceSelector:

matchLabels:

sync-enabled: "true"

resources:

- kind: ConfigMap

name: shared-config

Building the Feature Step by Step

1. Resource Synchronization Logic:

func (r *NamespaceSyncReconciler) syncResources(ctx context.Context, namespacesync *syncv1.NamespaceSync, targetNamespaces []string) error {

log := log.FromContext(ctx)

sourceNamespace := namespacesync.Spec.SourceNamespace

for _, res := range namespacesync.Spec.Resources {

// Get source resource

sourceObj, err := r.getSourceResource(ctx, sourceNamespace, res)

if err != nil {

return err

}

// Apply to target namespaces

for _, targetNs := range targetNamespaces {

if targetNs == sourceNamespace {

// Skip source namespace

continue

}

if err := r.applyToTarget(ctx, sourceObj, targetNs); err != nil {

log.Error(err, "Failed to apply resource to target",

"kind", res.Kind, "name", res.Name, "target", targetNs)

continue

}

log.Info("Synchronized resource",

"kind", res.Kind, "name", res.Name,

"from", sourceNamespace, "to", targetNs)

}

}

return nil

}

2. Target Namespace Resolution:

func (r *NamespaceSyncReconciler) getTargetNamespaces(ctx context.Context, namespacesync *syncv1.NamespaceSync) ([]string, error) {

// If explicit targets provided, use those

if len(namespacesync.Spec.TargetNamespaces) > 0 {

return namespacesync.Spec.TargetNamespaces, nil

}

// If selector provided, query matching namespaces

if namespacesync.Spec.NamespaceSelector != nil {

selector, err := metav1.LabelSelectorAsSelector(namespacesync.Spec.NamespaceSelector)

if err != nil {

return nil, err

}

var namespaceList corev1.NamespaceList

if err := r.List(ctx, &namespaceList, &client.ListOptions{

LabelSelector: selector,

}); err != nil {

return nil, err

}

result := make([]string, 0, len(namespaceList.Items))

for _, ns := range namespaceList.Items {

result = append(result, ns.Name)

}

return result, nil

}

// No targets specified

return nil, fmt.Errorf("no target namespaces specified")

}

Testing the Operator

To test the operator locally:

# Run the controller against your configured Kubernetes cluster

make run

# In another terminal, apply a sample CR

kubectl apply -f config/samples/sync_v1_namespacesync.yaml

# Check the status

kubectl get namespacesync

# Verify resources were synced

kubectl get configmap -n target-namespace-1

Deploying Your Operator

Building and Pushing the Container Image

# Set the image name

export IMG=yourdockerhub/namespace-sync:v0.1.0

# Build and push

make docker-build docker-push IMG=$IMG

Deploying to a Kubernetes Cluster

# Install the CRDs

make install

# Deploy the controller

make deploy IMG=$IMG

Verifying the Deployment

# Check the operator deployment

kubectl get pods -n namespace-sync-system

# Check the CRD

kubectl get crd | grep namespacesync

# Check if any CRs exist

kubectl get namespacesync --all-namespaces

Advanced Topics

Finalizers

Finalizers ensure proper cleanup when resources are deleted:

const finalizerName = "namespacesync.sync.nsync.dev/finalizer"

func (r *NamespaceSyncReconciler) handleDeletion(ctx context.Context, namespacesync *syncv1.NamespaceSync) (ctrl.Result, error) {

log := log.FromContext(ctx)

// Perform cleanup here...

log.Info("Cleaning up resources")

// When finished, remove the finalizer

controllerutil.RemoveFinalizer(namespacesync, finalizerName)

if err := r.Update(ctx, namespacesync); err != nil {

return ctrl.Result{}, err

}

log.Info("Finalizer removed")

return ctrl.Result{}, nil

}

Webhooks

Kubebuilder supports generating admission webhooks for validation and defaulting:

# Add webhook support to your API

kubebuilder create webhook --group sync --version v1 --kind NamespaceSync --defaulting --programmatic-validation

Metrics and Monitoring

The controller-runtime library automatically exposes Prometheus metrics. Add custom metrics:

var (

syncCount = promauto.NewCounterVec(

prometheus.CounterOpts{

Name: "namespacesync_resources_synced_total",

Help: "Number of resources synced by the operator",

},

[]string{"kind", "source_namespace", "target_namespace"},

)

)

// Use in your reconciler

syncCount.WithLabelValues(res.Kind, sourceNamespace, targetNs).Inc()

Best Practices for Operator Development

- Follow the single responsibility principle - one operator for one application type

- Make operations idempotent - reconcile function should be safe to call multiple times

- Design for eventual consistency - handle delays and unavailability gracefully

- Add proper status reporting so users can understand the current state

- Structure your reconciliation to achieve maximum convergence

- Use owner references to manage related resources

- Add clear documentation with examples in your CRDs

- Include meaningful validation to catch errors early

- Use shared informers for watching resources

- Implement proper error handling with appropriate backoff

- Add thorough unit and integration tests

- Minimize container image size for faster deployments

- Implement proper logging with structured contextual information

- Add Prometheus metrics for monitoring

- Set resource requests and limits on controller pods

- Implement leader election for high availability

- Version your APIs properly using semantic versioning

- Document upgrade procedures for your operator

Conclusion

Building Kubernetes Operators with Kubebuilder streamlines the development process for extending Kubernetes with custom resources and controllers. This guide demonstrated the key steps in creating an operator, from project initialization to deployment, using a real-world Namespace Synchronization example.

As your operators grow more complex, leveraging the full power of Kubebuilder’s scaffolding, code generation, and testing tools will help you create robust, production-ready extensions to Kubernetes.

Comments