12 min to read

Kubernetes Network Traffic Flow

Understanding network communication in CSP and On-premise environments

Overview

Understanding Kubernetes network traffic flow is crucial for proper cluster architecture, security, and troubleshooting.

This guide explores networking in both Cloud Service Provider (CSP) and on-premise environments, detailing how traffic moves through different components of a Kubernetes cluster.

Kubernetes networking is built on several key principles:

- Every Pod gets its own IP address

- Pods can communicate with all other pods without NAT

- Agents on a node can communicate with all pods on that node

- The Pod network is flat - all pods can reach each other directly

Network Traffic Paths

Kubernetes networking involves several distinct traffic paths, each with its own characteristics and components.

Network Environments and Traffic Flow

CSP Environment Traffic Flow

In cloud service provider environments (AWS, GCP, Azure, etc.), Kubernetes leverages cloud infrastructure for external connectivity.

Service LoadBalancer] C --> D{Routing} D --> E[Pod 1] D --> F[Pod 2] D --> G[Pod 3] style A fill:#f9f,stroke:#333,stroke-width:2px style B fill:#bbf,stroke:#333,stroke-width:2px style C fill:#ffa,stroke:#333,stroke-width:2px

Key Components in CSP Environments

- External Load Balancer:

- Provided by the cloud provider (e.g., AWS ELB/ALB, GCP Load Balancer, Azure Load Balancer)

- Located outside the Kubernetes cluster in the cloud provider’s infrastructure

- Automatically provisioned when a Service of type

LoadBalanceris created - Routes external traffic to nodes in the cluster

- Ingress Controller/Service:

- Ingress Controllers handle HTTP/HTTPS traffic routing based on hostnames and paths

- Services of type

LoadBalancerexpose non-HTTP protocols - Receive traffic from the cloud load balancer and route it to the appropriate pods

- Pods:

- End destinations for network traffic

- Run application containers

- Each has a unique IP address within the cluster

On-premise Environment Traffic Flow

On-premise Kubernetes deployments require different networking approaches since they don’t have cloud provider integration.

or Router] B --> C[Ingress Controller

NodePort or MetalLB] C --> D{Routing} D --> E[Pod 1] D --> F[Pod 2] D --> G[Pod 3] style A fill:#f9f,stroke:#333,stroke-width:2px style B fill:#bbf,stroke:#333,stroke-width:2px style C fill:#ffa,stroke:#333,stroke-width:2px

Key Components in On-premise Environments

- External Load Balancer/Router:

- Self-managed hardware or software load balancer (e.g., F5, NGINX, HAProxy)

- Located within the on-premise data center

- Manually configured to route traffic to Kubernetes nodes

- Ingress Controller/Service:

- Similar role as in CSP environments

- Often exposed via

NodePortservices or with MetalLB - Receive traffic from the external load balancer and route it to appropriate pods

- Pods:

- Functionally identical to pods in CSP environments

- Network connectivity may be implemented differently depending on CNI plugin choice

Ingress Controllers in Different Environments

Ingress Controllers in CSP Environments

In cloud environments, ingress controllers are tightly integrated with cloud provider load balancing services.

When you deploy an Ingress Controller in a CSP environment:

- The Ingress Controller pod(s) are deployed in the cluster

- A Service of type LoadBalancer is created for the Ingress Controller

- The CSP automatically provisions an external load balancer and assigns it a public IP

- The CSP configures the load balancer to route traffic to the Ingress Controller

- The Ingress Controller handles routing based on Ingress resources

# Example AWS ALB Ingress Controller configuration

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example-ingress

annotations:

kubernetes.io/ingress.class: alb

alb.ingress.kubernetes.io/scheme: internet-facing

spec:

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: example-service

port:

number: 80

Ingress Controllers in On-premise Environments

In on-premise environments, the LoadBalancer service type doesn’t automatically receive an external IP without additional components.

In on-premise environments, several challenges must be addressed:

- No automatic external IP assignment for LoadBalancer services

- Manual configuration of external load balancers

- IP address management

- Network routing between external networks and the Kubernetes cluster

On-premise deployments typically use one of these approaches:

- NodePort Services:

- Expose the Ingress Controller on a port on each node (30000-32767 range)

- Configure external load balancer to distribute traffic to these ports across nodes

- MetalLB or similar solutions:

- Enables LoadBalancer service type in on-premise environments

- Provides automatic IP assignment from a configured pool

MetalLB for On-premise Load Balancing

MetalLB is a popular solution for implementing LoadBalancer services in on-premise Kubernetes clusters.

IP Address] B --> C[MetalLB Speaker

Layer 2 or BGP] C --> D[Kubernetes

Service LoadBalancer] D --> E[Target Pods] style B fill:#bbf,stroke:#333,stroke-width:2px style C fill:#ffa,stroke:#333,stroke-width:2px

MetalLB Configuration

MetalLB works in two modes:

- Layer 2 Mode: Uses ARP (IPv4) or NDP (IPv6) to claim IP addresses on the local network

- BGP Mode: Establishes BGP peering sessions with network routers for more advanced configurations

# Example MetalLB configuration

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: production-pool

namespace: metallb-system

spec:

addresses:

- 192.168.1.100-192.168.1.150

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: l2-advertisement

namespace: metallb-system

spec:

ipAddressPools:

- production-pool

When a LoadBalancer service is deployed with MetalLB:

- MetalLB assigns an IP from the configured pool

- The speaker component advertises the IP on the local network

- Traffic sent to that IP is routed to the appropriate service

- The service distributes traffic to the pods

Component Communication in Kubernetes

Kubernetes networking enables several types of communication between different components in a cluster.

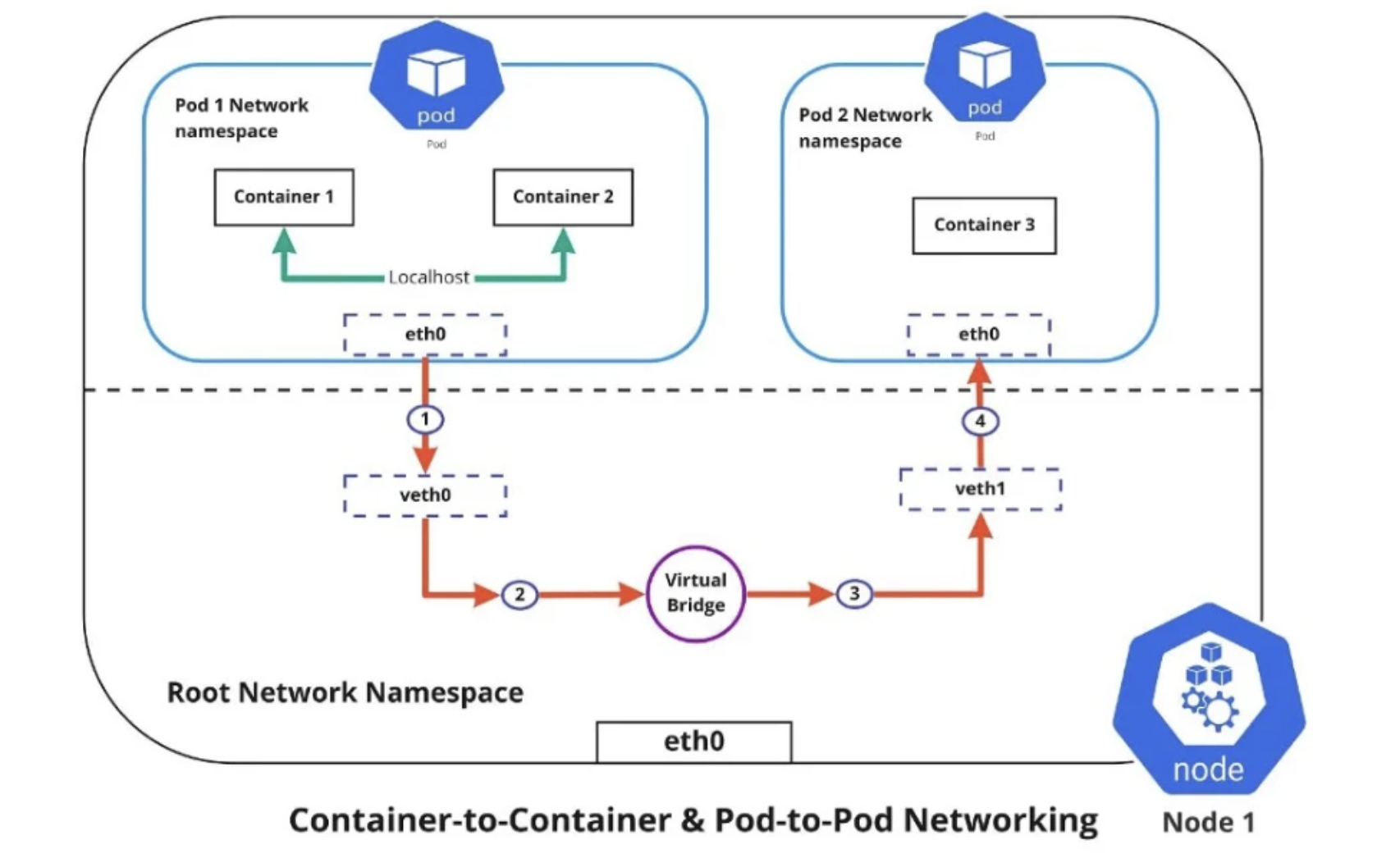

Container-to-Container Communication

Containers within the same Pod share the same network namespace, enabling localhost communication.

Port 8080] --- B[Container B

Port 9090] A --> |localhost:9090| B B --> |localhost:8080| A end style A fill:#bbf,stroke:#333,stroke-width:2px style B fill:#ffa,stroke:#333,stroke-width:2px

Key Characteristics:

- Containers in the same pod share the same IP address

- They can communicate via localhost

- Shared volumes allow file-based communication

- Container ports must be unique within a pod

- This enables sidecar, ambassador, and adapter patterns

Pod-to-Pod Communication

Kubernetes ensures all pods can communicate directly with each other regardless of their node location.

10.244.1.2] B[Pod B

10.244.1.3] end subgraph "Node 2" C[Pod C

10.244.2.2] D[Pod D

10.244.2.3] end A <--> B A <--> C A <--> D B <--> C B <--> D C <--> D style A fill:#bbf,stroke:#333,stroke-width:2px style D fill:#ffa,stroke:#333,stroke-width:2px

Key Characteristics:

- Every pod has a unique IP address

- All pods can reach each other without NAT

- CNI (Container Network Interface) plugins implement this functionality

- Different CNI implementations (Calico, Flannel, Cilium, etc.) provide various features and performance characteristics

- Cross-node communication typically uses overlay networks or direct routing

Pod-to-Service Communication

Services provide stable endpoints for pods, handling internal load balancing and service discovery.

10.244.1.2] --> |ClusterIP

10.96.0.10| B[Kubernetes Service] B --> C[Endpoint 1

10.244.2.2] B --> D[Endpoint 2

10.244.3.3] B --> E[Endpoint 3

10.244.1.4] style A fill:#bbf,stroke:#333,stroke-width:2px style B fill:#ffa,stroke:#333,stroke-width:2px

Key Components:

- Pod: Source of traffic, with its own IP address

- Virtual Interface: Each pod has an eth0 interface connecting to the node’s network

- Node: Hosts multiple pods and manages network traffic

- kube-proxy: Runs on each node, managing service routing using iptables, IPVS, or userspace proxying

- Service: Provides a stable ClusterIP that routes to a dynamic set of pod endpoints

- Cluster Network: Enables all communication across the cluster

Internet-to-Service Communication

External traffic enters the cluster through several different mechanisms.

Client] --> B{Access Type} B --> |LoadBalancer| C[Cloud

Load Balancer] C --> G[Service

LoadBalancer] B --> |NodePort| D[Any Node

Port 30000-32767] D --> H[Service

NodePort] B --> |Ingress| E[Ingress

Controller] E --> I[Ingress

Resource] I --> J[Service

ClusterIP] G --> K[Target Pods] H --> K J --> K style A fill:#f9f,stroke:#333,stroke-width:2px style K fill:#bbf,stroke:#333,stroke-width:2px

Access Types:

- LoadBalancer Service:

- Automatically provisions a cloud load balancer in CSP environments

- Requires MetalLB or similar solution in on-premise deployments

- Provides stable external IP address

- NodePort Service:

- Opens a specific port on all nodes

- Allows access via any node’s IP address

- Port range is limited to 30000-32767 by default

- Ingress:

- HTTP/HTTPS-based routing layer

- Enables hostname and path-based routing

- Can provide SSL termination, authentication, and more sophisticated traffic management

Network Components and Features

Network Components Table

| Component | Purpose | Communication Type | Implementation Examples |

|---|---|---|---|

Container |

Application runtime environment | localhost (127.0.0.1) | Docker, containerd, CRI-O |

Pod |

Basic scheduling unit, shared network namespace | Pod IP (e.g., 10.244.1.2) | kubelet, container runtime |

Service |

Stable endpoint for pod sets | ClusterIP (e.g., 10.96.0.10) | kube-proxy, CoreDNS |

Ingress |

HTTP/HTTPS routing to services | HTTP(S) host/path based | NGINX, Traefik, Contour |

CNI Plugin |

Pod networking implementation | Pod-to-pod networking | Calico, Flannel, Cilium |

Load Balancer |

External traffic distribution | External IP to Service | Cloud LB, MetalLB, HAProxy |

Key Network Features

-

Pod Networking (Layer 3)

- Flat network space

- No NAT between pods

- Unique IP per pod

- Implemented by CNI plugins -

Service Networking (Layer 4)

- Stable endpoints via ClusterIP

- Load balancing across pods

- Service discovery via DNS

- Implemented by kube-proxy and CoreDNS -

Application Routing (Layer 7)

- HTTP/HTTPS routing via Ingress

- Path and hostname based routing

- SSL termination

- Authentication options

Advanced Networking Concepts

Network Policies

Network Policies provide firewall-like rules for pod-to-pod communication, enabling micro-segmentation.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-frontend-to-backend

spec:

podSelector:

matchLabels:

app: backend

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: frontend

ports:

- protocol: TCP

port: 8080

Service Mesh

Service meshes like Istio, Linkerd, and Consul provide advanced networking capabilities for microservices:

| Feature | Description |

|---|---|

| Traffic Management | Fine-grained routing, traffic splitting, A/B testing, canary deployments |

| Security | Mutual TLS, certificate management, authentication, authorization |

| Observability | Distributed tracing, metrics collection, traffic visualization |

| Reliability | Circuit breaking, retries, timeouts, fault injection |

DNS in Kubernetes

CoreDNS provides service discovery within Kubernetes clusters:

- Each Service gets a DNS entry:

<service-name>.<namespace>.svc.cluster.local - Pods can have DNS entries (if enabled):

<pod-ip-with-dashes>.<namespace>.pod.cluster.local - DNS resolution is handled by CoreDNS pods in the

kube-systemnamespace

Troubleshooting Kubernetes Networking

Common networking issues and diagnostic commands:

# Check if pods can reach each other

kubectl exec -it <pod-name> -- ping <other-pod-ip>

# Check DNS resolution from a pod

kubectl exec -it <pod-name> -- nslookup <service-name>

# Examine service configuration

kubectl describe service <service-name>

# Check endpoints for a service

kubectl get endpoints <service-name>

# Test connectivity to a service

kubectl exec -it <pod-name> -- curl <service-name>:<port>

# Check ingress configuration

kubectl describe ingress <ingress-name>

# View network policies

kubectl get networkpolicies

Key Points

-

Architecture Differences

- CSP environments leverage cloud load balancers

- On-premise requires solutions like MetalLB

- Both share common internal networking principles -

Traffic Flow

- External → Ingress/Service → Pods → Containers

- Pod-to-Pod communication across nodes

- Service abstraction for stable endpoints -

Implementation Considerations

- CNI plugin selection affects performance and features

- NetworkPolicy support varies by CNI plugin

- Service mesh adds overhead but provides advanced features

Comments