16 min to read

Automated Ceph Cluster Deployment with Cephadm-Ansible and Kubernetes Integration

Complete automation workflow for Ceph deployment using cephadm-ansible and seamless integration with Kubernetes via Ceph-CSI

Overview

In this article, we’ll cover the complete process of installing Ceph clusters in an automated manner using cephadm-ansible and integrating them with Kubernetes environments.

Cephadm is a container-based Ceph management tool, while cephadm-ansible provides separate Ansible automation for repetitive tasks such as initial host setup, cluster management, and purging operations that are not directly handled by cephadm itself.

The installation process involves provisioning VMs with Terraform, building a Kubernetes cluster with Kubespray, then using Cephadm and Ansible scripts to bootstrap the Ceph cluster and configure OSD nodes, MON/MGR nodes.

We’ll also install Ceph-CSI drivers to enable Kubernetes integration with Ceph RBD storage, and configure StorageClass and test Pods to demonstrate the complete workflow.

What is Cephadm?

Cephadm is Ceph’s latest deployment and management tool, introduced starting with the Ceph Octopus release.

It’s designed to simplify deploying, configuring, managing, and scaling Ceph clusters. It can bootstrap a cluster with a single command and deploys Ceph services using container technology.

Cephadm doesn’t rely on external configuration tools like Ansible, Rook, or Salt. However, these external configuration tools can be used to automate tasks not performed by cephadm itself.

Related Resources:

What is Cephadm-ansible?

Cephadm-ansible is a collection of Ansible playbooks designed to simplify workflows not covered by cephadm itself.

The workflows it handles include:

- Preflight: Initial host setup before bootstrapping the cluster

- Client: Client host configuration

- Purge: Ceph cluster removal

Kubernetes Installation

Refer to the linked article for Kubernetes installation. Important note: Storage nodes must have at least 32GB of memory.

Infrastructure Configuration

Master Node (Control Plane)

| Component | IP | CPU | Memory |

|---|---|---|---|

| test-server | 10.77.101.47 | 16 | 32G |

Worker Nodes

| Component | IP | CPU | Memory |

|---|---|---|---|

| test-server-agent | 10.77.101.43 | 16 | 32G |

| test-server-storage | 10.77.101.48 | 16 | 32G |

Terraform Configuration for Storage Node

Add the following configuration:

Verify Kubernetes Installation

kubectl get nodes

NAME STATUS ROLES AGE VERSION

test-server Ready control-plane 6m27s v1.29.1

test-server-agent Ready <none> 5m55s v1.29.1

Cephadm-Ansible Installation

Clone Repository and Setup Environment

git clone https://github.com/ceph/cephadm-ansible

VENVDIR=cephadm-venv

CEPAHADMDIR=cephadm-ansible

python3.10 -m venv $VENVDIR

source $VENVDIR/bin/activate

cd $CEPAHADMDIR

pip install -U -r requirements.txt

Verify Storage Disks

Check disk configuration on the test-server-storage node:

lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

loop0 7:0 0 63.9M 1 loop /snap/core20/2105

loop1 7:1 0 368.2M 1 loop /snap/google-cloud-cli/207

loop2 7:2 0 40.4M 1 loop /snap/snapd/20671

loop3 7:3 0 91.9M 1 loop /snap/lxd/24061

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 49.9G 0 part /

├─sda14 8:14 0 4M 0 part

└─sda15 8:15 0 106M 0 part /boot/efi

sdb 8:16 0 50G 0 disk

sdc 8:32 0 50G 0 disk

sdd 8:48 0 50G 0 disk

Configuration Files Setup

Create Inventory File

# inventory.ini

[all]

test-server ansible_host=10.77.101.47

test-server-agent ansible_host=10.77.101.43

test-server-storage ansible_host=10.77.101.48

# Ceph Client Nodes (Kubernetes nodes that require access to Ceph storage)

[clients]

test-server

test-server-agent

test-server-storage

# Admin Node (Usually the first monitor node)

[admin]

test-server

Update cephadm-distribute-ssh-key.yml

Fix the file attribute check:

# Change this line:

# or not cephadm_pubkey_path_stat.stat.isfile | bool

# To:

or not cephadm_pubkey_path_stat.stat.isreg | bool

Complete corrected file:

Update cephadm-preflight.yml

Add Ubuntu-specific tasks:

- name: Ubuntu related tasks

when: ansible_facts['distribution'] == 'Ubuntu'

block:

- name: Update apt cache

apt:

update_cache: yes

cache_valid_time: 3600

changed_when: false

- name: install container engine

block:

- name: install docker

# ... existing docker installation tasks

- name: ensure Docker is running

service:

name: docker

state: started

enabled: true

- name: install jq package

apt:

name: jq

state: present

update_cache: yes

register: result

until: result is succeeded

Automation Scripts

Variables Configuration (ceph_vars.sh)

#!/bin/bash

# Define variables (Modify as needed)

SSH_KEY="/home/somaz/.ssh/id_rsa_ansible" # SSH KEY Path

INVENTORY_FILE="inventory.ini" # Inventory Path

CEPHADM_PREFLIGHT_PLAYBOOK="cephadm-preflight.yml"

CEPHADM_CLIENTS_PLAYBOOK="cephadm-clients.yml"

CEPHADM_DISTRIBUTE_SSHKEY_PLAYBOOK="cephadm-distribute-ssh-key.yml"

HOST_GROUP=(test-server test-server-agent test-server-storage) # All host group

ADMIN_HOST="test-server" # Admin host name

OSD_HOST="test-server-storage" # OSD host name

HOST_IPS=("10.77.101.47" "10.77.101.43" "10.77.101.48") # Corresponding IPs

OSD_DEVICES=("sdb" "sdc" "sdd") # OSD devices, without /dev/ prefix

CLUSTER_NETWORK="10.77.101.0/24" # Cluster network CIDR

SSH_USER="somaz" # SSH user

CLEANUP_CEPH="false" # Reset based on user input

Function Library (ceph_functions.sh)

Main Setup Script (setup_ceph_cluster.sh)

#!/bin/bash

# Load functions from ceph_functions.sh and ceph_vars.sh

source ceph_vars.sh

source ceph_functions.sh

read -p "Do you want to cleanup existing Ceph cluster? (yes/no): " user_confirmation

if [[ "$user_confirmation" == "yes" ]]; then

CLEANUP_CEPH="true"

else

CLEANUP_CEPH="false"

fi

echo "Starting Ceph cluster setup..."

# Check for existing SSH key and generate if it does not exist

if [ ! -f "$SSH_KEY" ]; then

echo "Generating SSH key..."

ssh-keygen -f "$SSH_KEY" -N ''

echo "SSH key generated successfully."

else

echo "SSH key already exists. Skipping generation."

fi

# Copy SSH key to each host in the group

for host in "${HOST_GROUP[@]}"; do

echo "Copying SSH key to $host..."

ssh-copy-id -i "${SSH_KEY}.pub" -o StrictHostKeyChecking=no "$host"

done

# Cleanup existing Ceph setup if confirmed

cleanup_ceph_cluster

# Wipe OSD devices

echo "Wiping OSD devices on $OSD_HOST..."

for device in ${OSD_DEVICES[@]}; do

if ssh $OSD_HOST "sudo wipefs --all /dev/$device"; then

echo "Wiped $device successfully."

else

echo "Failed to wipe $device."

fi

done

# Run cephadm-ansible preflight playbook

echo "Running cephadm-ansible preflight setup..."

run_ansible_playbook $CEPHADM_PREFLIGHT_PLAYBOOK ""

# Create a temporary Ceph configuration file for initial settings

TEMP_CONFIG_FILE=$(mktemp)

echo "[global]

osd crush chooseleaf type = 0

osd_pool_default_size = 1" > $TEMP_CONFIG_FILE

# Bootstrap the Ceph cluster

MON_IP="${HOST_IPS[0]}"

echo "Bootstrapping Ceph cluster with MON_IP: $MON_IP"

add_to_known_hosts $MON_IP

sudo cephadm bootstrap --mon-ip $MON_IP --cluster-network $CLUSTER_NETWORK --ssh-user $SSH_USER -c $TEMP_CONFIG_FILE --allow-overwrite --log-to-file

rm -f $TEMP_CONFIG_FILE

# Distribute Cephadm SSH keys to all hosts

echo "Distributing Cephadm SSH keys to all hosts..."

run_ansible_playbook $CEPHADM_DISTRIBUTE_SSHKEY_PLAYBOOK "-e cephadm_ssh_user=$SSH_USER -e admin_node=$ADMIN_HOST -e cephadm_pubkey_path=$SSH_KEY.pub"

# Fetch FSID of the Ceph cluster

FSID=$(sudo ceph fsid)

echo "Ceph FSID: $FSID"

# Add and label hosts in the Ceph cluster

add_host_and_label

# Prepare and add OSDs

sleep 60

add_osds_and_wait

# Check Ceph cluster status and OSD creation

check_osd_creation

echo "Ceph cluster setup and client configuration completed successfully."

Execute the Setup

# Make script executable

chmod +x setup_ceph_cluster.sh

./setup_ceph_cluster.sh

Verification and Management

Ceph Dashboard Access

After successful installation, you’ll see:

Ceph Dashboard is now available at:

URL: https://test-server:8443/

User: admin

Password: 9m16nzu1h7

Cluster Status Commands

# Check Ceph hosts

sudo ceph orch host ls

test-server 10.77.101.47 _admin

test-server-agent 10.77.101.43

test-server-storage 10.77.101.48 osd

3 hosts in cluster

# Check Ceph status

sudo ceph -s

cluster:

id: 43d4ca77-cf91-11ee-8e5d-831aa89df15f

health: HEALTH_WARN

1 pool(s) have no replicas configured

services:

mon: 1 daemons, quorum test-server (age 7m)

mgr: test-server.nckhts(active, since 6m)

osd: 3 osds: 3 up (since 3m), 3 in (since 3m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 577 KiB

usage: 872 MiB used, 149 GiB / 150 GiB avail

pgs: 1 active+clean

# Check OSD tree

sudo ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.14639 root default

-3 0.14639 host test-server-storage

0 ssd 0.04880 osd.0 up 1.00000 1.00000

1 ssd 0.04880 osd.1 up 1.00000 1.00000

2 ssd 0.04880 osd.2 up 1.00000 1.00000

# Check service status

sudo ceph orch ls --service-type mon

sudo ceph orch ls --service-type mgr

sudo ceph orch ls --service-type osd

Pool Management

# Check current pools

ceph df

# Create a new pool

ceph osd pool create kube 128

# Check pool replica settings

ceph osd pool get kube size

# Modify pool settings

ceph osd pool set kube size 3

# Set placement groups

ceph osd pool set kube pg_num 256

# Delete pool (if needed)

# ceph osd pool delete {pool-name} {pool-name} --yes-i-really-really-mean-it

Understanding Placement Groups (PGs)

Placement Groups (PGs) are logical units for data distribution and management within Ceph clusters. Ceph stores data as objects and assigns these objects to PGs, which are then distributed across various OSDs in the cluster. PGs optimize cluster scalability, performance, and resilience.

Kubernetes Integration with Ceph-CSI

Install Ceph-CSI Driver

To use Ceph as a storage solution for Kubernetes Pods, install Ceph-CSI (Container Storage Interface). Ceph-CSI enables Ceph as persistent storage for Kubernetes, supporting both block and file storage.

# Add Helm repository

helm repo add ceph-csi https://ceph.github.io/csi-charts

helm repo update

# Search available charts

helm search repo ceph-csi

NAME CHART VERSION APP VERSION DESCRIPTION

ceph-csi/ceph-csi-cephfs 3.10.2 3.10.2 Container Storage Interface (CSI) driver, provi...

ceph-csi/ceph-csi-rbd 3.10.2 3.10.2 Container Storage Interface (CSI) driver, provi...

# Install RBD driver

helm install ceph-csi-rbd ceph-csi/ceph-csi-rbd --namespace ceph-csi --create-namespace

# Install CephFS driver (optional)

helm install ceph-csi-cephfs ceph-csi/ceph-csi-cephfs --namespace ceph-csi --create-namespace

Create Ceph-CSI Values Configuration

Since we have one worker node, set replicaCount to 1:

# Get Ceph cluster ID

sudo ceph fsid

afdfd487-cef1-11ee-8e5d-831aa89df15f

# Check monitor endpoint

ss -nlpt | grep 6789

LISTEN 0 512 10.77.101.47:6789 0.0.0.0:*

Create ceph-csi-values.yaml:

csiConfig:

- clusterID: "afdfd487-cef1-11ee-8e5d-831aa89df15f" # ceph cluster id

monitors:

- "10.77.101.47:6789"

provisioner:

replicaCount: 1

Install Ceph-CSI Driver with Custom Values

# Create namespace

kubectl create namespace ceph-csi

# Install with custom values

helm install -n ceph-csi ceph-csi ceph-csi/ceph-csi-rbd -f ceph-csi-values.yaml

# Verify installation

kubectl get all -n ceph-csi

StorageClass Configuration

Create Secret and StorageClass

First, get the Ceph authentication information:

sudo ceph auth list

# Look for client.admin key

client.admin

key: AQC899JlcL6CKBAAQsBOJqWw/CVTQKUD+2FbyQ==

caps: [mds] allow *

caps: [mgr] allow *

caps: [mon] allow *

caps: [osd] allow *

Create the StorageClass configuration:

# ceph-csi-storageclass.yaml

apiVersion: v1

kind: Secret

metadata:

name: csi-rbd-secret

namespace: kube-system

stringData:

userID: admin # ceph user id (client.admin) - admin is the user

userKey: "AQC899JlcL6CKBAAQsBOJqWw/CVTQKUD+2FbyQ==" # client.admin key

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: rbd

annotations:

storageclass.beta.kubernetes.io/is-default-class: "true"

storageclass.kubesphere.io/supported-access-modes: '["ReadWriteOnce","ReadOnlyMany","ReadWriteMany"]'

provisioner: rbd.csi.ceph.com

parameters:

clusterID: "afdfd487-cef1-11ee-8e5d-831aa89df15f"

pool: "kube"

imageFeatures: layering

csi.storage.k8s.io/provisioner-secret-name: csi-rbd-secret

csi.storage.k8s.io/provisioner-secret-namespace: kube-system

csi.storage.k8s.io/controller-expand-secret-name: csi-rbd-secret

csi.storage.k8s.io/controller-expand-secret-namespace: kube-system

csi.storage.k8s.io/node-stage-secret-name: csi-rbd-secret

csi.storage.k8s.io/node-stage-secret-namespace: kube-system

csi.storage.k8s.io/fstype: ext4

reclaimPolicy: Delete

allowVolumeExpansion: true

mountOptions:

- discard

Apply the configuration:

kubectl apply -f ceph-csi-storageclass.yaml

# Verify StorageClass

kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rbd (default) rbd.csi.ceph.com Delete Immediate true 21s

Testing the Integration

Deploy Test Pod with Persistent Volume

# test-pod.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ceph-rbd-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: rbd

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: pod-using-ceph-rbd

spec:

containers:

- name: my-container

image: nginx

volumeMounts:

- mountPath: "/var/lib/www/html"

name: mypd

volumes:

- name: mypd

persistentVolumeClaim:

claimName: ceph-rbd-pvc

Deploy and verify:

# Apply test configuration

kubectl apply -f test-pod.yaml

# Verify resources

kubectl get pod,pv,pvc

NAME READY STATUS RESTARTS AGE

pod/pod-using-ceph-rbd 1/1 Running 0 16s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-83fd673e-077c-4d24-b9c9-290118586bd3 1Gi RWO Delete Bound default/ceph-rbd-pvc rbd 16s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/ceph-rbd-pvc Bound pvc-83fd673e-077c-4d24-b9c9-290118586bd3 1Gi RWO rbd 16s

Best Practices and Recommendations

Infrastructure as Code Benefits

- Automation: Cephadm-ansible eliminates manual configuration steps

- Repeatability: Scripts ensure consistent deployments across environments

- Version Control: Infrastructure configurations can be versioned and tracked

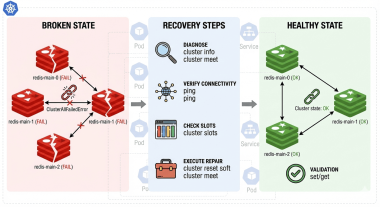

- Disaster Recovery: Automated procedures enable rapid cluster recovery

Production Considerations

- Multi-Node Setup: Use multiple MON and MGR nodes for high availability

- Storage Replication: Configure appropriate replication levels for data durability

- Monitoring: Implement comprehensive monitoring for cluster health

- Backup Strategy: Establish regular backup procedures for critical data

Troubleshooting Tips

- Log Analysis: Check cephadm and Ansible logs for deployment issues

- Network Connectivity: Ensure proper network configuration between nodes

- Disk Preparation: Verify disk wiping and LVM cleanup before deployment

- Service Dependencies: Ensure container runtime is properly configured

Conclusion

Cephadm-ansible provides a powerful automation framework for Ceph cluster deployment, significantly simplifying the complex process of distributed storage setup. The integration with Kubernetes through Ceph-CSI creates a robust storage solution for cloud-native applications.

Key Achievements:

- Automated Deployment: Complete Ceph cluster automation using scripts

- Infrastructure as Code: Terraform-based VM provisioning

- Kubernetes Integration: Seamless storage integration via Ceph-CSI

- Production Ready: Scalable architecture with monitoring capabilities

Operational Benefits:

- Reduced Manual Work: Automated host setup, SSH key distribution, OSD disk cleanup

- Cluster Management: Automated purging and labeling operations

- Storage Integration: Native Kubernetes persistent storage support

- Scalability: Foundation for enterprise-grade storage automation

Future Enhancements:

- Implement multi-cluster federation

- Add automated backup and disaster recovery

- Integrate with CI/CD pipelines for infrastructure updates

- Develop custom monitoring and alerting solutions

Learning to configure clusters directly through Cephadm and Ansible integration, understanding OSD configuration flows, and storage class integration provides invaluable experience for enterprise-level storage operation automation.

“Mastering automation tools like cephadm-ansible is essential for building reliable, scalable infrastructure in modern cloud environments.”

Comments