19 min to read

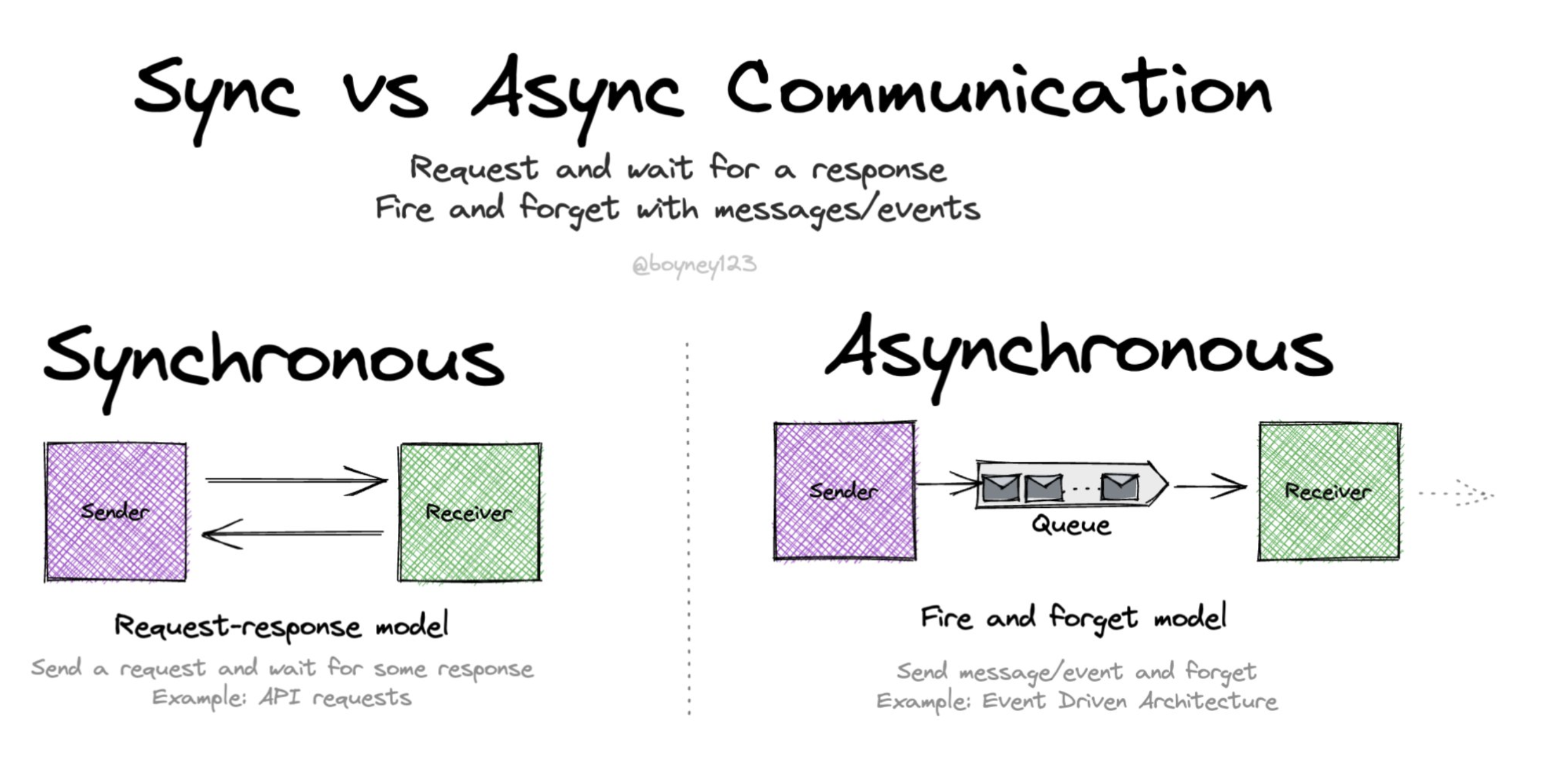

Synchronous and Asynchronous Processing

A comprehensive guide to sync and async programming with Python examples

Overview

Understanding synchronous and asynchronous processing is fundamental in software development, especially in how programs handle tasks.

Synchronous and asynchronous processing represent two distinct approaches to executing operations in software. These paradigms influence how applications handle I/O operations, CPU-intensive tasks, and user interactions. In an increasingly connected world with growing demands for responsive applications, understanding when and how to apply each approach is critical for developers.

Synchronous Processing

Synchronous processing follows a sequential execution model where each operation must complete before the next one begins. This approach creates a straightforward, predictable flow of execution.

Key Characteristics

- Blocking: Tasks complete one at a time

- Linear Execution: Sequential order

- Simplicity: Easier to program and debug

- Use Cases: File operations, database transactions

- Single-threaded by default in many languages

In synchronous processing, the program execution flow is straightforward:

- Task A begins execution

- Program waits for Task A to complete

- Task B begins only after Task A finishes

- Program waits for Task B to complete

- And so on...

During waiting periods (like I/O operations), the thread is blocked and cannot perform other operations.

Synchronous Examples

Python Synchronous Example

def read_file(file_name):

with open(file_name, 'r') as file:

print(f"Reading {file_name}...")

data = file.read()

print(f"Finished reading {file_name}.")

return data

def process_data(data):

print("Processing data...")

processed_data = data.upper()

print("Data processing completed.")

return processed_data

def main():

# Sequential execution - each step must complete before the next begins

data1 = read_file('somaz1.txt')

processed1 = process_data(data1)

data2 = read_file('somaz2.txt')

processed2 = process_data(data2)

print("All tasks completed.")

if __name__ == "__main__":

main()

JavaScript Synchronous Example

function readFileSync(fileName) {

console.log(`Reading ${fileName}...`);

// Simulating file read with a synchronous operation

const content = require('fs').readFileSync(fileName, 'utf8');

console.log(`Finished reading ${fileName}.`);

return content;

}

function processData(data) {

console.log("Processing data...");

// Simulating data processing

const processed = data.toUpperCase();

console.log("Data processing completed.");

return processed;

}

function main() {

// Sequential execution

const data1 = readFileSync('somaz1.txt');

const processed1 = processData(data1);

const data2 = readFileSync('somaz2.txt');

const processed2 = processData(data2);

console.log("All tasks completed.");

}

main();

Asynchronous Processing

Asynchronous processing allows multiple operations to be in progress simultaneously without waiting for each other to complete. This non-blocking approach can significantly improve performance and responsiveness, especially for I/O-bound applications.

Key Characteristics

- Non-blocking: Tasks run independently

- Concurrent Execution: Parallel processing

- Complexity: More challenging to manage

- Use Cases: API calls, UI operations, I/O tasks

- Relies on callbacks, promises, or async/await patterns

Asynchronous processing typically follows these patterns:

- Task A begins execution

- Instead of waiting, the program initiates Task B

- When Task A completes, a callback, promise, or event handler is triggered

- Results from Task A are processed while other tasks continue

This approach prevents blocking and allows the application to remain responsive during time-consuming operations.

Asynchronous Examples

Python Asynchronous Example with asyncio

import asyncio

import aiofiles

import time

async def read_file(file_name):

print(f"Starting to read {file_name}...")

async with aiofiles.open(file_name, 'r') as file:

content = await file.read()

print(f"Finished reading {file_name}.")

return content

async def process_data(data):

print("Processing data...")

# Simulate processing time

await asyncio.sleep(1)

processed_data = data.upper()

print("Data processing completed.")

return processed_data

async def handle_file(file_name):

data = await read_file(file_name)

processed = await process_data(data)

return processed

async def main():

start_time = time.time()

# Concurrent execution of multiple tasks

tasks = [

handle_file('somaz1.txt'),

handle_file('somaz2.txt')

]

# Wait for all tasks to complete

results = await asyncio.gather(*tasks)

end_time = time.time()

print(f"All tasks completed in {end_time - start_time:.2f} seconds.")

return results

if __name__ == "__main__":

asyncio.run(main())

JavaScript Asynchronous Example with Promises

const fs = require('fs').promises;

function readFileAsync(fileName) {

console.log(`Starting to read ${fileName}...`);

return fs.readFile(fileName, 'utf8')

.then(content => {

console.log(`Finished reading ${fileName}.`);

return content;

});

}

function processData(data) {

console.log("Processing data...");

return new Promise(resolve => {

// Simulate processing time

setTimeout(() => {

const processed = data.toUpperCase();

console.log("Data processing completed.");

resolve(processed);

}, 1000);

});

}

async function handleFile(fileName) {

const data = await readFileAsync(fileName);

const processed = await processData(data);

return processed;

}

async function main() {

const startTime = Date.now();

try {

// Concurrent execution using Promise.all

const results = await Promise.all([

handleFile('somaz1.txt'),

handleFile('somaz2.txt')

]);

const endTime = Date.now();

console.log(`All tasks completed in ${(endTime - startTime)/1000} seconds.`);

return results;

} catch (error) {

console.error("Error:", error);

}

}

main();

Concurrency Models

Understanding different concurrency models helps provide context for how synchronous and asynchronous processing are implemented across languages and frameworks.

Thread-based Concurrency

Thread-based concurrency involves multiple threads of execution within a process, each with its own stack but sharing the same heap memory.

Characteristics:

- True parallelism on multi-core systems

- Operating system-managed scheduling

- Shared memory introduces concurrency challenges

- Requires synchronization mechanisms (locks, mutexes)

Languages with Strong Thread Support:

- Java

- C#

- C++

Example (Java):

public class ThreadExample {

public static void main(String[] args) {

Thread thread1 = new Thread(() -> {

System.out.println("Thread 1 started");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("Thread 1 completed");

});

Thread thread2 = new Thread(() -> {

System.out.println("Thread 2 started");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("Thread 2 completed");

});

thread1.start();

thread2.start();

try {

thread1.join();

thread2.join();

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("All threads completed");

}

}

Event-Loop Concurrency

Event-loop concurrency is built around a single-threaded event loop that processes events and callbacks. This model is particularly popular in JavaScript environments and modern asynchronous frameworks.

Characteristics:

- Single-threaded at its core

- Non-blocking I/O operations

- Event-driven architecture

- Callbacks or promises for asynchronous operations

- No true parallelism (within a single event loop)

Languages/Environments:

- JavaScript (Node.js, Browsers)

- Python (asyncio)

- Dart

Example (Node.js):

const fs = require('fs');

console.log("Starting program");

// Non-blocking file read that registers a callback

fs.readFile('somaz1.txt', 'utf8', (err, data1) => {

if (err) {

console.error("Error reading file:", err);

return;

}

console.log("File 1 read complete");

// Process data after file is read

setTimeout(() => {

console.log("Processing file 1 data");

}, 100);

});

// This executes immediately without waiting for the file read

fs.readFile('somaz2.txt', 'utf8', (err, data2) => {

if (err) {

console.error("Error reading file:", err);

return;

}

console.log("File 2 read complete");

});

console.log("Continuing program execution");

Actor Model

The Actor model treats “actors” as the universal primitives of concurrent computation, with each actor processing messages sequentially but multiple actors operating concurrently.

Characteristics:

- Actors encapsulate state and behavior

- Message-passing for communication

- No shared state between actors

- Supervision hierarchies for fault tolerance

Languages/Frameworks:

- Erlang/Elixir

- Akka (JVM)

- Orleans (.NET)

Synchronous vs Asynchronous Comparison

| Feature | Synchronous | Asynchronous |

|---|---|---|

| Execution | Sequential | Parallel |

| Blocking | Yes | No |

| Complexity | Simple | Complex |

| Use Case | Sequential tasks | Independent tasks |

| Performance | Lower throughput | Higher throughput |

| Resource Utilization | Inefficient for I/O | Efficient for I/O |

| Error Handling | Straightforward | Complex |

| Debugging | Easier | More difficult |

| Memory Usage | Potentially higher (for threads) | Generally lower |

When to Use What

Use Synchronous When:

- Tasks must complete in order

- Each task depends on previous results

- Simple, straightforward processes

- CPU-bound operations

- When predictability is more important than speed

Use Asynchronous When:

- Tasks can run independently

- High I/O operations (network, disk)

- Better performance needed

- UI responsiveness required

- Scaling to handle many concurrent operations

- Task IndependenceCan tasks run independently, or does each depend on the previous task's result?

- I/O vs CPU BoundAre operations primarily waiting on I/O (favor async) or performing computation (may favor sync)?

- Response Time RequirementsIs responsiveness critical for your application?

- Development ComplexityIs your team comfortable with asynchronous programming concepts?

- Error HandlingHow complex is error recovery in your application flow?

- Scalability NeedsDoes your application need to handle many concurrent operations?

Common Challenges and Solutions

Asynchronous Challenges

Callback Hell

One of the earliest patterns for asynchronous programming was callbacks, which could lead to deeply nested, hard-to-maintain code:

// Callback hell example

getData(function(a) {

getMoreData(a, function(b) {

getEvenMoreData(b, function(c) {

getYetEvenMoreData(c, function(d) {

getFinalData(d, function(finalData) {

console.log(finalData);

});

});

});

});

});

Solutions:

- Promises (JavaScript)

- Async/await syntax

- Functional composition

- Libraries for managing async flow

Race Conditions

Asynchronous operations can complete in unpredictable order, potentially causing race conditions:

# Potential race condition in async code

async def update_counter():

value = await get_counter() # Read

await set_counter(value + 1) # Update

# If two functions call this concurrently, they might both read the same value

# before either has updated it, leading to only one increment instead of two

Solutions:

- Locks and semaphores

- Atomic operations

- Transaction-based approaches

- Event sourcing patterns

Synchronous Challenges

Blocking Performance

Long-running operations in synchronous code can freeze entire applications:

def process_large_dataset():

data = load_massive_dataset() # Blocks for potentially minutes

results = perform_complex_analysis(data) # Also time-consuming

return results

# During execution, the application is completely unresponsive

Solutions:

- Background threads

- Process pools

- Asynchronous processing

- Task queues and workers

Real-World Applications

Web Servers

Synchronous Web Server (Traditional Model):

- One thread per connection

- Simple to implement and reason about

- Limited scalability due to thread overhead

- Examples: Early versions of Apache HTTP Server

Asynchronous Web Server:

- Event-driven architecture

- Can handle thousands of concurrent connections

- Lower memory footprint

- Examples: Nginx, Node.js

Database Access

Synchronous Database Access:

# Synchronous database query

import psycopg2

def get_user(user_id):

conn = psycopg2.connect("dbname=mydb user=postgres")

cur = conn.cursor()

cur.execute("SELECT * FROM users WHERE id = %s", (user_id,))

user = cur.fetchone()

cur.close()

conn.close()

return user

Asynchronous Database Access:

# Asynchronous database query

import asyncpg

import asyncio

async def get_user(user_id):

conn = await asyncpg.connect("postgresql://postgres@localhost/mydb")

user = await conn.fetchrow("SELECT * FROM users WHERE id = $1", user_id)

await conn.close()

return user

Mobile Applications

Synchronous UI Updates (Bad Practice):

// This would freeze the UI

public void onButtonClick() {

String data = downloadLargeFile(); // Blocks UI thread

updateUI(data);

}

Asynchronous UI Updates (Good Practice):

// This keeps the UI responsive

public void onButtonClick() {

showLoadingIndicator();

CompletableFuture.supplyAsync(() -> downloadLargeFile())

.thenAccept(data -> {

runOnUiThread(() -> {

hideLoadingIndicator();

updateUI(data);

});

});

}

Performance Considerations

I/O-Bound vs CPU-Bound Tasks

Understanding the nature of your tasks is crucial for choosing the right concurrency model:

I/O-Bound Tasks: Operations that spend most of their time waiting for input/output completion (file, network, database)

- Typically benefit greatly from asynchronous approaches

- Example: Web servers handling many client connections

CPU-Bound Tasks: Operations that spend most of their time performing calculations

- May benefit more from multi-threading or multi-processing

- Example: Image processing, machine learning

Consider a web server handling 1000 requests, each requiring a 100ms database query:

Synchronous Approach:- Sequential processing: 1000 × 100ms = 100 seconds total time

- One request processed at a time

- Simple implementation, high latency, low throughput

- Concurrent processing: ~100ms total time (plus some overhead)

- All 1000 requests initiated almost simultaneously

- More complex implementation, low latency, high throughput

Benchmarking Example

Here’s a simple benchmark comparing synchronous vs asynchronous HTTP requests in Python:

import time

import requests

import asyncio

import aiohttp

# Synchronous version

def fetch_sync(urls):

start = time.time()

results = []

for url in urls:

response = requests.get(url)

results.append(response.text)

end = time.time()

print(f"Synchronous: {end - start:.2f} seconds")

return results

# Asynchronous version

async def fetch_async(urls):

start = time.time()

async with aiohttp.ClientSession() as session:

tasks = [session.get(url) for url in urls]

responses = await asyncio.gather(*tasks)

results = [await r.text() for r in responses]

end = time.time()

print(f"Asynchronous: {end - start:.2f} seconds")

return results

# Test with multiple URLs

urls = ["https://example.com"] * 10

# Run synchronous version

sync_results = fetch_sync(urls)

# Run asynchronous version

asyncio.run(fetch_async(urls))

# Sample output:

# Synchronous: 5.83 seconds

# Asynchronous: 0.62 seconds

Comments