15 min to read

Understanding Processes and Threads

A comprehensive guide to processes, threads, and their differences

Overview

Understanding the differences between processes and threads is crucial for software development. Let’s explore these concepts in detail.

Operating systems manage resources and provide services for computer programs. Two fundamental concepts in operating system design are processes and threads, which enable concurrent execution of tasks. This article provides a comprehensive understanding of these concepts, their differences, and how they interact with the CPU and memory.

Program vs Process

A program is a set of instructions stored on disk, while a process is a running instance of a program. When you execute a program, the operating system loads it into memory, allocates resources, and creates a process to run it.

Key Differences:

- Program is static, Process is dynamic

- Program is code, Process is code in execution

- Multiple processes can run from the same program

- Programs exist as files on storage, processes exist in memory

Process Components:

- Code segment (text section)

- Data segment (initialized data)

- BSS segment (uninitialized data)

- Heap (dynamic memory allocation)

- Stack (function call data, local variables)

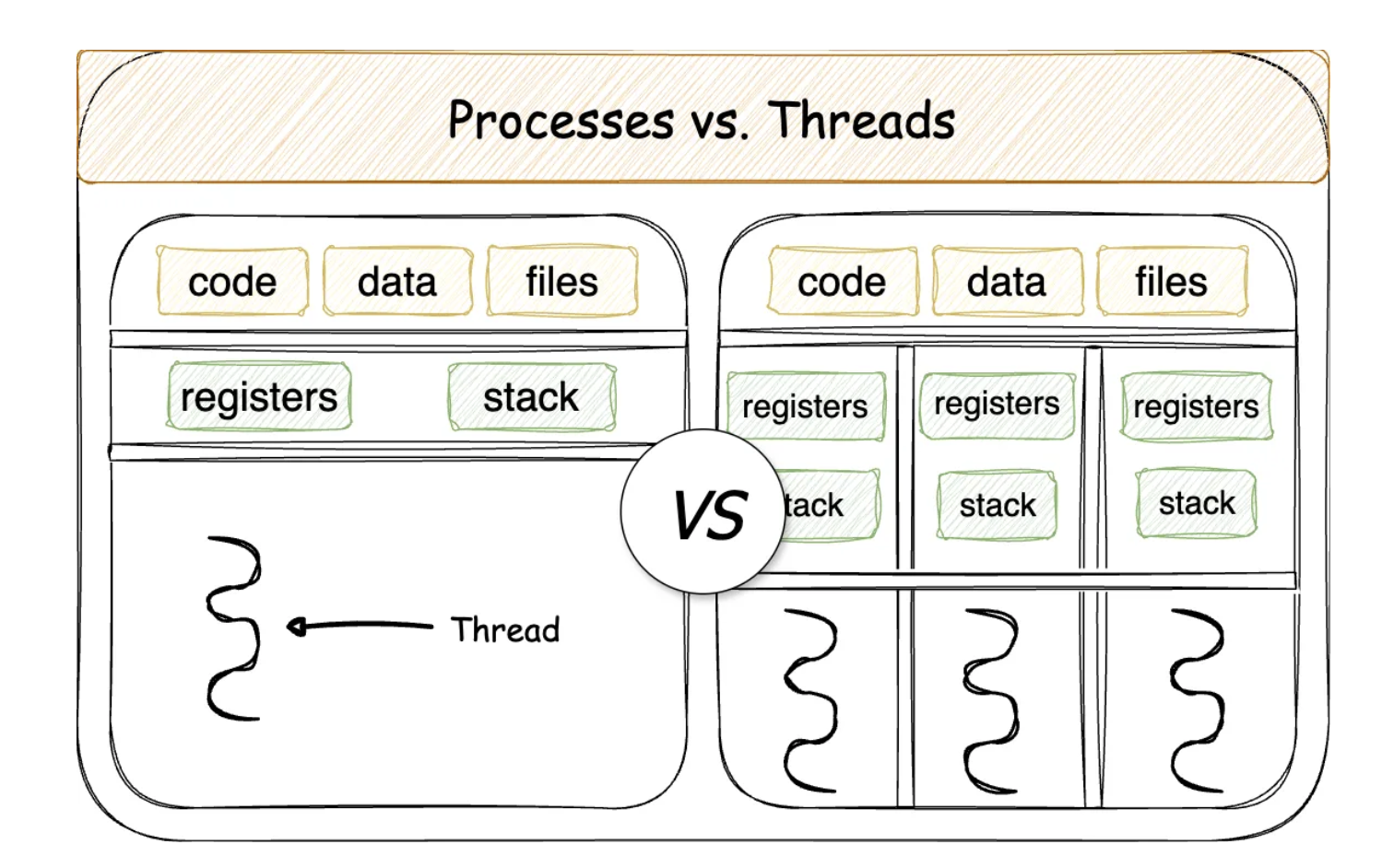

Process vs Thread

Process

A process is an independent execution unit with its own memory space and system resources. The operating system manages processes, providing isolation and protection between them.

Characteristics:

- Independent entity with its own memory space

- Provides isolation and security

- Has its own process control block (PCB)

- Resource-intensive to create and manage

- Key concept: Isolation

Process Control Block (PCB):

- Process ID (PID)

- Process state

- Program counter

- CPU registers

- Memory management information

- I/O status information

- Accounting information

Thread

A thread is a lightweight execution unit that exists within a process. Multiple threads in the same process share memory and resources but execute independently.

Characteristics:

- Lightweight unit within a process

- Shares memory with other threads in the same process

- Has its own thread control block (TCB)

- Faster to create and destroy than processes

- Key concept: Concurrency

Thread Control Block (TCB):

- Thread ID

- Program counter

- Register set

- Stack

Shared Resources (with other threads in the same process):

- Code section

- Data section

- Open files

- Signals

- Heap memory

Comparison Table

| Aspect | Process | Thread |

|---|---|---|

| Definition | Program instance in execution | Smallest unit of programmed instructions |

| Memory | Separate memory space | Shared memory within process |

| Creation | Resource-intensive | Lightweight |

| Communication | Complex (IPC) | Simple (shared memory) |

| Control | Controlled by Operating System | Controlled by Process |

| Overhead | High | Low |

| Use Case | When isolation is needed | For concurrent tasks |

| Failure Impact | Failure is isolated | Failure affects other threads |

| Context Switch | Expensive | Less expensive |

| Data Sharing | Requires IPC mechanisms | Direct access to shared data |

Process States and Lifecycle

Throughout its lifetime, a process goes through various states as it executes. The operating system’s scheduler manages these transitions based on system conditions and process behavior.

Basic Process States

Basic Process States

- NEW: Process is being created. The operating system is allocating resources and setting up the process control block.

- READY: Process is waiting to be assigned to a processor. It has all the resources needed to run but the CPU is not currently executing its instructions.

- RUNNING: Process is currently being executed by the CPU. Its instructions are being carried out.

- WAITING (BLOCKED): Process is waiting for some event to occur (such as I/O completion or receiving a signal).

- TERMINATED: Process has finished execution or has been terminated by the operating system. Resources may still be held until the parent process collects exit status information.

Expanded Process States

Expanded Process States**

- SUSPENDED READY: Process is temporarily removed from memory and placed on disk, but is otherwise ready to execute.

- SUSPENDED WAITING: Process is waiting for an event and has been swapped out to disk.

- ZOMBIE: Process execution has completed, but its entry still remains in the process table until the parent collects its exit status.

Process Creation

Processes can be created through several mechanisms:

- System Initialization: Initial processes started during boot

- Process Creation System Call (fork): A process creates a copy of itself

- User Request: User starting an application

- Batch Job Initiation: In batch systems

Process Creation in Unix/Linux

#include <stdio.h>

#include <unistd.h>

int main() {

pid_t pid = fork(); // Create child process

if (pid < 0) {

// Error occurred

fprintf(stderr, "Fork Failed\n");

return 1;

} else if (pid == 0) {

// Child process

printf("Child Process: PID = %d\n", getpid());

} else {

// Parent process

printf("Parent Process: PID = %d, Child PID = %d\n", getpid(), pid);

}

return 0;

}

Process Creation in Windows

#include <windows.h>

#include <stdio.h>

int main() {

STARTUPINFO si;

PROCESS_INFORMATION pi;

ZeroMemory(&si, sizeof(si));

si.cb = sizeof(si);

ZeroMemory(&pi, sizeof(pi));

// Create child process

if (!CreateProcess(NULL, // Application name

"notepad.exe", // Command line

NULL, // Process handle not inheritable

NULL, // Thread handle not inheritable

FALSE, // Set handle inheritance to FALSE

0, // No creation flags

NULL, // Use parent's environment block

NULL, // Use parent's starting directory

&si, // Pointer to STARTUPINFO structure

&pi)) // Pointer to PROCESS_INFORMATION structure

{

printf("CreateProcess failed (%d).\n", GetLastError());

return 1;

}

printf("Process created with ID: %lu\n", pi.dwProcessId);

// Close process and thread handles

CloseHandle(pi.hProcess);

CloseHandle(pi.hThread);

return 0;

}

Thread Types and Models

Threads can be implemented at different levels, each with its own advantages and disadvantages.

User-Level Threads

User-level threads are managed by a thread library rather than the operating system. The kernel is unaware of these threads.

Advantages:

- Thread switching doesn’t require kernel mode privileges

- Can be implemented on any operating system

- Scheduling can be application-specific

- Fast thread creation and switching

Disadvantages:

- If one thread blocks on I/O, all threads within the process are blocked

- No true parallelism with multiple CPUs since the kernel allocates CPU time to processes, not threads

Examples: POSIX Threads (Pthreads), Java threads

Kernel-Level Threads

Kernel-level threads are managed directly by the operating system. The kernel maintains context information for both the process and its threads.

Advantages:

- If one thread blocks, the kernel can schedule another thread from the same process

- True parallelism on multiprocessor systems

- Kernel can schedule threads from different processes

Disadvantages:

- Thread operations are slower because they require system calls

- More overhead for the kernel to manage threads

- Thread implementation is operating system dependent

Examples: Windows threads, Linux’s Native POSIX Thread Library (NPTL)

Thread Models

Operating systems implement various threading models:

- Many-to-One Model: Many user-level threads mapped to a single kernel thread

- One-to-One Model: Each user-level thread maps to a kernel thread

- Many-to-Many Model: Maps many user-level threads to a smaller or equal number of kernel threads

Multi-Threading vs Multi-Processing

Multi-Threading

Multi-threading involves multiple threads of execution within a single process. These threads share the same memory space and resources but execute independently.

Characteristics:

- Single process, multiple threads

- Shared memory space

- Fast context switching

- Lower resource usage

- Efficient for I/O-bound tasks

- Potential for race conditions and deadlocks

Use Cases:

- GUI applications (responsive interface)

- Web servers (handling multiple clients)

- Background processing (non-blocking operations)

- Parallel algorithms (divide and conquer)

Multi-Processing

Multi-processing involves multiple processes running concurrently. Each process has its own memory space and resources, providing isolation but requiring more overhead for communication.

Characteristics:

- Multiple processes

- Separate memory spaces

- Slower context switching

- Higher resource usage

- Better isolation and stability

- More complex inter-process communication

Use Cases:

- CPU-intensive applications

- Applications requiring high reliability

- Security-critical applications

- Systems with large memory requirements

Python Example: Multi-Processing vs Multi-Threading

# Multi-processing example

from multiprocessing import Process

def process_function(name):

print(f'Process {name} is running')

if __name__ == '__main__':

processes = []

for i in range(5):

p = Process(target=process_function, args=(f'P{i}',))

processes.append(p)

p.start()

for p in processes:

p.join()

# Multi-threading example

import threading

def thread_function(name):

print(f'Thread {name} is running')

if __name__ == '__main__':

threads = []

for i in range(5):

t = threading.Thread(target=thread_function, args=(f'T{i}',))

threads.append(t)

t.start()

for t in threads:

t.join()

Context Switching

Context switching is the process of saving and restoring the state of a CPU so that multiple processes or threads can share a single CPU resource. The context includes all register values, the program counter, memory management information, and other data required to resume execution exactly where it left off.

Context Switching is the process where the CPU switches from one process or thread to another. It involves storing the state of the current process and loading the state of the next process.

Steps in Context Switching

- Time Out (Preemption):

- The running process (e.g., P1) reaches its time slice limit.

- The Scheduler is notified to switch the running process.

- Saving State:

- The state of P1 (CPU registers, program counter, etc.) is saved to memory.

- This ensures that P1 can resume from where it stopped.

- Loading Next Process State:

- The state of P2 is loaded from memory.

- P2 is now ready to run.

- Dispatching:

- The CPU starts executing P2.

- This cycle repeats for process scheduling.

Context Switching Overhead

Context switching incurs overhead that affects system performance:

- Direct Costs: CPU time spent saving and loading registers, changing memory maps, etc.

- Indirect Costs: Cache misses, TLB flushes, pipeline stalls

- Process vs Thread Switching: Thread context switches are generally less expensive since they share memory address space

- Scheduling Algorithms: Different algorithms can affect the frequency of context switches

Reducing Context Switch Overhead

Operating systems employ various techniques to reduce context switching overhead:

- Efficient Thread Scheduling: Group related threads together

- CPU Affinity: Keep processes/threads on the same CPU core

- Processor Sharing: Use time slicing to reduce the number of full context switches

- Asynchronous I/O: Avoid blocking operations that force context switches

Hyper-Threading and Hardware Concurrency

Hyper-Threading is Intel’s implementation of Simultaneous Multi-Threading (SMT) technology, which allows a single physical CPU core to execute multiple threads simultaneously.

How Hyper-Threading Works

- Physical Core Duplication

- Duplicate register sets

- Multiple thread states managed by the same core

- Appears as multiple logical processors to the operating system

- Shared Resources

- Shared execution engine

- Shared caches (L1, L2, L3)

- Shared arithmetic logic units and floating-point units

- Benefits

- Improved efficiency for multi-threaded applications

- Enhanced parallelism for tasks with different resource needs

- Better multitasking performance

- Utilization of idle execution units

Performance Considerations

Hyper-Threading generally provides a performance boost of 15-30% compared to single-threaded performance on the same physical core. However, performance benefits vary depending on:

- Application type (CPU-bound vs. I/O-bound)

- Thread contention for shared resources

- Cache usage patterns

- Instruction mix

AMD implements its own version of SMT in its Ryzen and EPYC processors, which functions similarly to Intel's Hyper-Threading but with architecture-specific optimizations.

Key Differences from Intel Hyper-Threading:- Different cache hierarchy

- CCX (Core Complex) based design

- Thread prioritization mechanisms

Practical Examples: Process and Thread Management

Process and Thread Creation in Java

// Process creation in Java

public class ProcessExample {

public static void main(String[] args) throws Exception {

ProcessBuilder processBuilder = new ProcessBuilder("notepad.exe");

Process process = processBuilder.start();

System.out.println("Notepad process started");

// Wait for process to complete

int exitCode = process.waitFor();

System.out.println("Notepad exited with code: " + exitCode);

}

}

// Thread creation in Java

public class ThreadExample {

public static void main(String[] args) {

Thread thread = new Thread(() -> {

System.out.println("Thread is running");

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println("Thread completed");

});

thread.start();

System.out.println("Main thread continues execution");

}

}

Process and Thread Management in Linux

# List all processes

ps aux

# View process hierarchy

pstree

# Monitor processes in real-time

top

# View thread information for process with PID 1234

ps -T -p 1234

# Set process priority (niceness)

nice -n 10 ./myprogram

# Change priority of running process

renice +5 -p 1234

Comments