41 min to read

Memory Layout in C/C++ - A Developer's Refresher

Understanding memory management for better debugging and optimization

Overview

Memory management is one of the most fundamental and critical aspects of programming. Understanding how a program’s memory is organized and managed is essential for writing efficient, bug-free code, especially in languages like C and C++ where the programmer has direct control over memory allocation and deallocation.

When a program runs, the operating system allocates a block of memory for the process. This memory space is divided into multiple segments, each with specific purposes and characteristics. Knowing how these segments function and interact allows developers to make informed decisions about memory usage, troubleshoot complex issues, and optimize performance.

The memory layout concepts we use today evolved alongside early computing systems. The stack-based memory model dates back to the 1950s, when Konrad Zuse's Z4 computer implemented a mechanical memory stack. The heap allocation model emerged in the 1960s with languages like ALGOL and early operating systems that needed dynamic memory management.

Dennis Ritchie's development of C in the early 1970s brought these memory concepts to the forefront of programming, offering direct memory management through pointers and malloc/free functions. C's approach to memory management remained largely unchanged through the evolution of C++, creating the foundation of memory models that influences most modern programming languages today.

Memory Layout

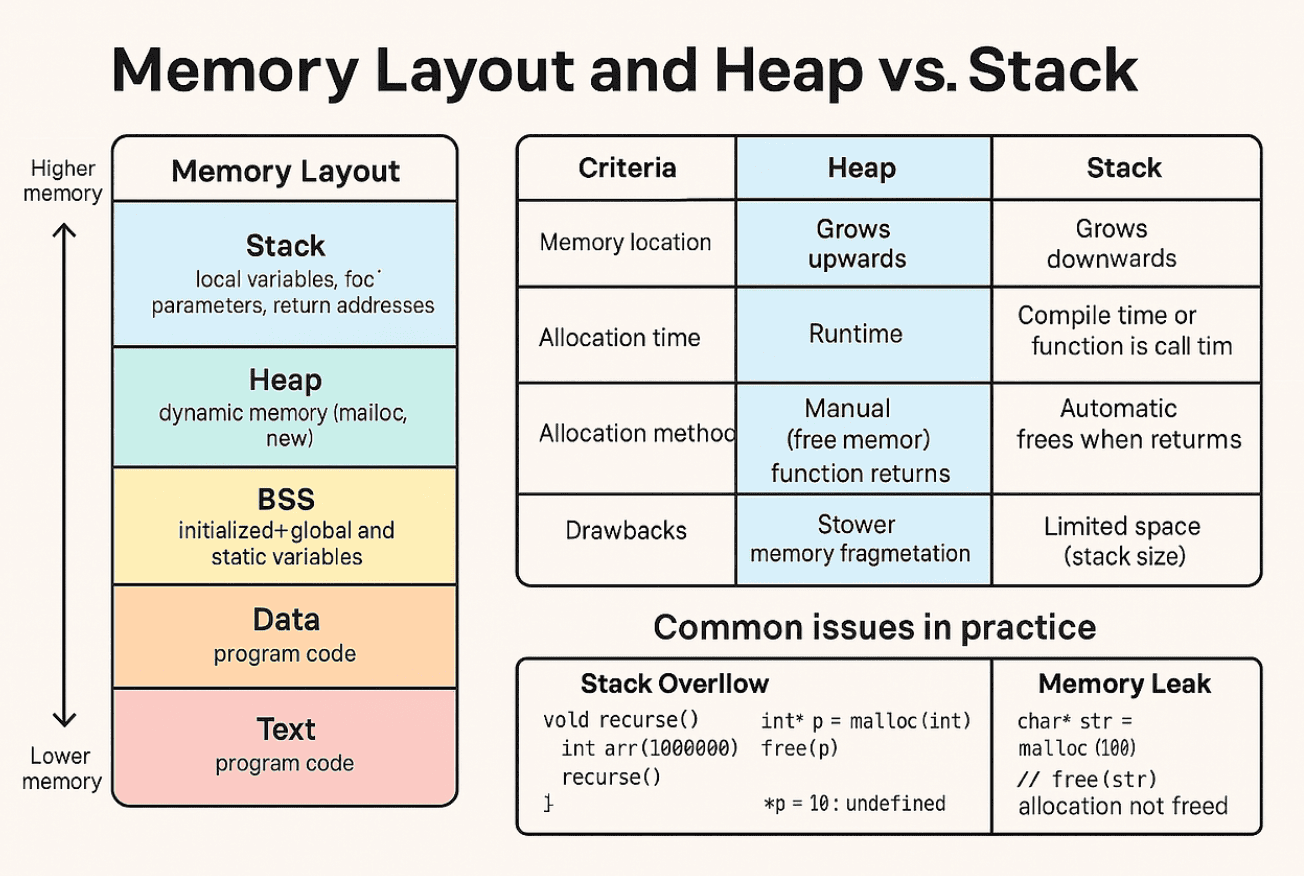

When a C/C++ program is executed, the operating system allocates memory to each process, dividing it into the following regions:

Text Segment (Code Segment)

The Text Segment contains the executable instructions of the program.

Location: Lower part of the memory space

Permissions: Read-only (generally) with execution rights

Contents: Machine code, constants, literals

This segment is often shared among multiple processes running the same program, saving memory. It’s typically read-only to prevent accidental modification of instructions, which would cause program crashes.

// This code gets stored in the text segment

int main() {

return 42; // The instructions for this function are in the text segment

}

// String literals are also stored in the text segment

const char* message = "Hello, World!"; // The string literal is in the text segment

Data Segment

The Data Segment holds initialized global and static variables.

Location: Above the text segment

Permissions: Read-write

Contents: Initialized global variables, static variables

This segment is further divided into read-only and read-write areas. Variables in this region exist for the entire duration of the program.

// These variables are stored in the data segment

int global_var = 100; // Initialized global variable

static int static_var = 200; // Initialized static variable

void function() {

static int static_local = 300; // Initialized static local variable (also in data segment)

}

BSS Segment (Block Started by Symbol)

The BSS Segment stores uninitialized global and static variables.

Location: Above the data segment

Permissions: Read-write

Contents: Uninitialized global and static variables

The BSS segment doesn’t take up space in the executable file. The operating system initializes this memory to zero at program startup.

// These variables are stored in the BSS segment

int global_uninitialized; // Uninitialized global variable

static int static_uninitialized; // Uninitialized static variable

void function() {

static int static_local_uninit; // Uninitialized static local variable (BSS)

}

Heap Segment

The Heap is used for dynamic memory allocation.

Location: Above the BSS segment, grows upward (toward higher addresses)

Permissions: Read-write

Contents: Dynamically allocated memory

Memory in the heap must be explicitly allocated and freed by the programmer. It’s managed by memory allocation functions like malloc(), calloc(), realloc() in C and new in C++.

// C example of heap allocation

int* ptr = (int*)malloc(sizeof(int) * 10); // Allocates an array of 10 integers on the heap

if (ptr != NULL) {

// Use the allocated memory

ptr[0] = 42;

// ...

// Must free the memory when done

free(ptr);

ptr = NULL; // Good practice to avoid dangling pointers

}

// C++ example of heap allocation

int* array = new int[10]; // Allocates an array of 10 integers on the heap

try {

// Use the allocated memory

array[0] = 42;

// ...

} catch (...) {

// Handle exceptions

}

// Must free the memory when done

delete[] array;

array = nullptr; // C++ equivalent of setting to NULL

Stack Segment

The Stack is used for local variables, function parameters, and call management.

Location: High memory addresses, grows downward (toward lower addresses)

Permissions: Read-write

Contents: Local variables, function parameters, return addresses, saved registers

The stack follows a Last-In-First-Out (LIFO) structure. Each function call creates a new stack frame, which is removed when the function returns.

void function(int parameter) { // 'parameter' is stored on the stack

int local_variable = 10; // 'local_variable' is stored on the stack

// When the function returns, these stack variables are automatically deallocated

}

int main() {

function(42); // Creates a stack frame for 'function'

return 0;

}

Memory Mapping Segment

This region is used for mapping files into memory and for dynamic libraries.

Location: Between heap and stack

Permissions: Varies based on mapping

Contents: Memory-mapped files, shared libraries

This area is used by the mmap() system call to map files or devices into memory and for loading shared libraries.

#include <sys/mman.h>

#include <fcntl.h>

#include <unistd.h>

void memory_mapping_example() {

int fd = open("file.txt", O_RDONLY);

if (fd != -1) {

// Get file size

off_t size = lseek(fd, 0, SEEK_END);

lseek(fd, 0, SEEK_SET);

// Map file into memory

void* mapped = mmap(NULL, size, PROT_READ, MAP_PRIVATE, fd, 0);

if (mapped != MAP_FAILED) {

// Use the mapped memory

// ...

// Unmap when done

munmap(mapped, size);

}

close(fd);

}

}

Stack vs Heap

The two primary regions for runtime memory allocation are the stack and the heap. Understanding their differences is crucial for effective memory management.

| Characteristic | Stack | Heap |

|---|---|---|

| Memory Growth | Grows downward (from higher to lower addresses) | Grows upward (from lower to higher addresses) |

| Allocation Time | Compile time or function call time | Runtime |

| Allocation Method | Automatic (allocated/deallocated on function call/return) | Manual (developer must explicitly free) |

| Speed | Very fast (pointer manipulation) | Slower (requires memory management algorithms) |

| Size Limitation | Limited (typically 1MB-8MB) | Large (limited by available virtual memory) |

| Memory Layout | Contiguous blocks in LIFO order | Can be fragmented across memory |

| Allocation Failure | Stack overflow (program crash) | NULL return from malloc (can be handled) |

| Leak Possibility | Almost none (automatic cleanup) | Possible memory leaks (if not freed) |

| Access Pattern | Predictable, good cache performance | Can be random, potentially worse cache performance |

| Variable Scope | Local to function | Can be accessed globally with pointers |

| Data Structure Support | Fixed size arrays, small objects | Dynamic arrays, large objects, recursive structures |

| Flexibility | Low (size must be known at compile time) | High (size can be determined at runtime) |

Stack Memory Allocation

Stack memory allocation is straightforward and managed by the compiler:

void stack_example() {

// All these variables are allocated on the stack

int a = 10; // Basic integer

double b[5] = {1.1, 2.2}; // Array (fixed size)

char message[50] = "Stack"; // Character array

// When this function exits, all stack memory is automatically reclaimed

}

Benefits of stack allocation:

- Very fast allocation and deallocation

- No memory fragmentation

- No need to explicitly free memory

- Memory is automatically reclaimed when variables go out of scope

Limitations of stack allocation:

- Size limitations (stack overflow for large allocations)

- Variables cannot persist beyond their scope

- Size must be known at compile time

Heap Memory Allocation

Heap memory must be explicitly managed:

// C style heap allocation

void heap_example_c() {

// Allocate a single integer

int* single_int_ptr = (int*)malloc(sizeof(int));

if (single_int_ptr != NULL) {

*single_int_ptr = 42;

printf("Value: %d\n", *single_int_ptr);

free(single_int_ptr);

}

// Allocate an array of 1000 integers

int* array_ptr = (int*)malloc(1000 * sizeof(int));

if (array_ptr != NULL) {

// Initialize array

for (int i = 0; i < 1000; i++) {

array_ptr[i] = i;

}

// Use array

printf("Element 500: %d\n", array_ptr[500]);

// Must free the memory

free(array_ptr);

}

// calloc initializes memory to zero

int* zeroed_array = (int*)calloc(50, sizeof(int));

if (zeroed_array != NULL) {

// All elements are already 0

printf("First element: %d\n", zeroed_array[0]);

free(zeroed_array);

}

// realloc changes the size of an existing allocation

int* resizable_array = (int*)malloc(10 * sizeof(int));

if (resizable_array != NULL) {

// Later, resize to hold 20 integers

int* new_array = (int*)realloc(resizable_array, 20 * sizeof(int));

if (new_array != NULL) {

resizable_array = new_array; // Update pointer if reallocation succeeded

// Use the resized array

resizable_array[15] = 100;

free(resizable_array); // Only need to free the final pointer

} else {

// realloc failed, but original memory is still allocated

free(resizable_array);

}

}

}

// C++ style heap allocation

void heap_example_cpp() {

// Single object allocation

int* p_int = new int(42);

std::cout << "Value: " << *p_int << std::endl;

delete p_int; // Must delete to free memory

// Array allocation

int* p_array = new int[100];

for (int i = 0; i < 100; i++) {

p_array[i] = i * 2;

}

std::cout << "Element 50: " << p_array[50] << std::endl;

delete[] p_array; // Note the [] for array deletion

// C++ smart pointers (modern C++)

std::unique_ptr<int> smart_ptr = std::make_unique<int>(100);

// No need to explicitly delete, memory is freed when smart_ptr goes out of scope

// Array with smart pointer

std::unique_ptr<int[]> smart_array = std::make_unique<int[]>(200);

smart_array[0] = 42;

// Automatically freed when smart_array goes out of scope

}

Benefits of heap allocation:

- Flexible size (can allocate large chunks of memory)

- Dynamic allocation (size can be determined at runtime)

- Memory can persist across function calls

- Suitable for complex data structures like linked lists, trees

Limitations of heap allocation:

- Slower than stack allocation

- Potential for memory leaks if not properly freed

- Memory fragmentation

- Manual memory management responsibility

When to Use Stack vs Heap

Use the Stack when:

- The memory needed is small

- The data has a clear, limited lifetime within a function

- You need the fastest possible allocation performance

- You’re working with fixed-size data structures

- You want to avoid memory management complexity

Use the Heap when:

- You need large amounts of memory

- The size of data is determined at runtime

- Data needs to persist beyond the function that created it

- You’re implementing complex data structures (trees, graphs)

- You need objects to exist independently of function call stacks

Real-world Memory Issues and Debugging

Memory-related bugs are among the most common and challenging issues in C and C++ programming. Understanding these issues and knowing how to identify and fix them is essential for developing reliable software.

Stack Overflow

Stack overflow occurs when a program attempts to use more stack memory than is available. This typically happens due to deep recursion or large local variable allocations.

// Stack overflow due to infinite recursion

void infinite_recursion(int n) {

char buffer[1024]; // Allocates 1KB on the stack

printf("Recursion level: %d\n", n);

infinite_recursion(n + 1); // No base case to stop recursion

}

// Stack overflow due to large local array

void large_local_array() {

int huge_array[1000000]; // ~4MB on the stack, likely to cause overflow

huge_array[0] = 42;

}

Detection:

- Program crashes with “stack overflow” or “segmentation fault”

- Using tools like

valgrindwith--tool=memcheck - Debuggers like GDB show call stack at the time of crash

Prevention:

- Ensure recursive functions have proper termination conditions

- Use heap for large arrays or data structures

- Increase stack size if necessary (e.g.,

ulimit -son Unix systems)

Memory Leaks

Memory leaks occur when allocated memory is never freed. Over time, this can cause a program to consume excessive memory.

// Simple memory leak

void memory_leak_example() {

char* buffer = (char*)malloc(1024); // Allocate memory

strcpy(buffer, "Important data");

// Function exits without calling free(buffer)

// The memory is leaked - no way to access it, but still allocated

}

// Memory leak in a loop

void repeated_leak() {

for (int i = 0; i < 1000; i++) {

int* data = (int*)malloc(sizeof(int) * 100);

// Process data

// No free() - leaks memory in each iteration

}

}

*Detection:

- Running program with Valgrind:

valgrind --leak-check=full ./program - Tools like ASAN (Address Sanitizer):

gcc -fsanitize=address program.c - Monitoring system memory usage during execution

Prevention:

- Always pair

malloc/newwith correspondingfree/delete - Use smart pointers in C++ (e.g.,

std::unique_ptr,std::shared_ptr) - Follow RAII (Resource Acquisition Is Initialization) principles in C++

- Implement cleanup functions that are always called (using

atexitor similar)

Use After Free

Use after free occurs when a program continues to use memory after it has been freed.

// Use after free example

void use_after_free() {

int* ptr = (int*)malloc(sizeof(int));

*ptr = 42;

free(ptr); // Memory is deallocated

// BUG: Accessing freed memory

*ptr = 100; // Undefined behavior - might crash, corrupt memory, or appear to work

printf("Value: %d\n", *ptr); // Dangerous - using freed memory

}

Detection:

- Valgrind:

valgrind --tool=memcheck ./program - ASAN:

gcc -fsanitize=address program.c - Random crashes or corrupted data

Prevention:

- Set pointers to NULL after freeing

- Use tools to detect invalid memory accesses

- Use smart pointers in C++ that manage object lifetime

Double Free

Double free occurs when free() is called on the same memory location twice.

// Double free example

void double_free() {

int* ptr = (int*)malloc(sizeof(int) * 10);

// Use the memory

ptr[0] = 42;

free(ptr); // First free - correct

// ... some code ...

free(ptr); // Second free - WRONG! This memory is already freed

}

Detection:

- Program crashes with “double free” or “corrupted memory” errors

- Valgrind and ASAN can detect these issues

Prevention:

- Set pointers to NULL after freeing:

ptr = NULL; - Use smart pointers in C++ that prevent double deletion

Buffer Overflow

Buffer overflow occurs when a program writes beyond the bounds of allocated memory.

// Stack buffer overflow

void stack_buffer_overflow() {

char buffer[10];

// BUG: Writing 15 characters into a 10-byte buffer

strcpy(buffer, "This is too long for the buffer");

// Can overwrite other stack variables, return addresses, etc.

}

// Heap buffer overflow

void heap_buffer_overflow() {

char* buffer = (char*)malloc(10);

// BUG: Writing beyond the allocated memory

strcpy(buffer, "Too long string for 10 bytes");

free(buffer);

}

Detection:

- Valgrind:

valgrind --tool=memcheck ./program - ASAN:

gcc -fsanitize=address program.c - Unpredictable program behavior or crashes

Prevention:

- Always check buffer sizes before writing

- Use safe string functions:

strncpy,snprintf - In C++, use containers like

std::stringandstd::vector - Enable compiler warnings:

-Wall -Wextra -Werror

Dangling Pointers

A dangling pointer refers to memory that has been freed or is out of scope.

// Returning address of local variable (stack)

char* dangling_stack_pointer() {

char local_array[20] = "Hello";

return local_array; // BUG: Returns pointer to memory that will be invalid

}

// Using freed heap memory

int* create_dangling_pointer() {

int* ptr = (int*)malloc(sizeof(int));

*ptr = 42;

free(ptr);

return ptr; // BUG: Returns a pointer to freed memory

}

Detection:

- Valgrind and ASAN

- Unpredictable program behavior

Prevention:

- Never return pointers to local variables

- Carefully track object ownership and lifetime

- Use smart pointers in C++

Memory Debugging Tools and Techniques

Effective memory debugging is essential for developing reliable C/C++ programs. Here are the key tools and techniques:

Valgrind

Valgrind is a comprehensive memory debugging and profiling tool.

# Basic memory error detection

valgrind --tool=memcheck ./your_program

# Detailed leak checking

valgrind --leak-check=full --show-leak-kinds=all ./your_program

# Track origins of uninitialized values

valgrind --track-origins=yes ./your_program

# Generate suppressions file for false positives

valgrind --gen-suppressions=all --suppressions=suppress.txt ./your_program

Address Sanitizer (ASAN)

ASAN is a fast memory error detector built into modern compilers.

# Compile with ASAN

gcc -fsanitize=address -g program.c -o program

# Run the program (no special command needed)

./program

# For more detailed output

ASAN_OPTIONS=verbosity=2 ./program

# To generate a log file

ASAN_OPTIONS=log_path=asan.log ./program

Memory Sanitizer (MSAN)

MSAN detects uses of uninitialized memory.

# Compile with MSAN (clang only)

clang -fsanitize=memory -g program.c -o program

# Run the program

./program

GDB Techniques

GNU Debugger (GDB) can help debug memory issues.

# Start debugging

gdb ./your_program

# Set watchpoint on a memory address

(gdb) watch *0x12345678

# Break on memory allocation/deallocation

(gdb) break malloc

(gdb) break free

# Examine memory

(gdb) x/10xw 0x12345678 # Show 10 words in hex at address

# Print backtrace when program crashes

(gdb) backtrace

Electric Fence

Electric Fence helps detect buffer overflows and underflows.

# Link with Electric Fence

gcc program.c -lefence -o program

# Run the program normally

./program

Debug Allocators

Custom memory allocators can help debug memory issues.

// Simple debug wrapper for malloc/free

#define DEBUG_MEMORY

#ifdef DEBUG_MEMORY

#include <stdio.h>

void* debug_malloc(size_t size, const char* file, int line) {

void* ptr = malloc(size);

printf("Allocated %zu bytes at %p (%s:%d)\n", size, ptr, file, line);

return ptr;

}

void debug_free(void* ptr, const char* file, int line) {

printf("Freeing memory at %p (%s:%d)\n", ptr, file, line);

free(ptr);

}

#define malloc(size) debug_malloc(size, __FILE__, __LINE__)

#define free(ptr) debug_free(ptr, __FILE__, __LINE__)

#endif

// Usage in code remains the same

void function() {

int* p = malloc(sizeof(int) * 10);

// ...

free(p);

}

Best Practices for Memory Debugging

- Use Multiple Tools: Different tools catch different types of issues.

- Enable Compiler Warnings: Use

-Wall -Wextra -Werrorflags. - Regular Testing: Run memory checks frequently during development.

- Debug Builds: Include debug symbols (

-g) and disable optimizations (-O0) for debugging. - Defensive Programming: Check return values from memory functions, validate pointers before use.

- Consistent Conventions: Establish clear ownership rules for memory in your codebase.

Advanced Memory Management Techniques

Memory Alignment

Memory alignment refers to placing data at memory addresses that are multiples of the data’s size. Proper alignment improves performance and is required by some architectures.

// Example of aligned allocation in C11

#include <stdlib.h>

#include <stdio.h>

void alignment_example() {

// Allocate 1024 bytes aligned to a 64-byte boundary

void* aligned_ptr = NULL;

int result = posix_memalign(&aligned_ptr, 64, 1024);

if (result == 0) {

printf("Allocated at %p\n", aligned_ptr);

// Check alignment

printf("Is 64-byte aligned: %s\n",

((uintptr_t)aligned_ptr % 64 == 0) ? "Yes" : "No");

free(aligned_ptr);

}

}

// C++17 aligned allocation

#include <new>

void cpp_alignment_example() {

// Allocate an int array with 32-byte alignment

int* aligned_array = static_cast<int*>(::operator new(100 * sizeof(int), std::align_val_t{32}));

// Use the memory

aligned_array[0] = 42;

// Deallocate with matching alignment

::operator delete(aligned_array, std::align_val_t{32});

}

Memory Pools

Memory pools (also known as memory arenas) preallocate a large block of memory and manage smaller allocations within it. This reduces fragmentation and improves performance for many small allocations.

// Simple memory pool implementation

typedef struct {

char* memory;

size_t size;

size_t used;

} MemoryPool;

MemoryPool* create_pool(size_t size) {

MemoryPool* pool = (MemoryPool*)malloc(sizeof(MemoryPool));

if (pool) {

pool->memory = (char*)malloc(size);

if (!pool->memory) {

free(pool);

return NULL;

}

pool->size = size;

pool->used = 0;

}

return pool;

}

void* pool_alloc(MemoryPool* pool, size_t size) {

// Ensure alignment (simplified)

size_t aligned_size = (size + 7) & ~7; // Align to 8 bytes

if (pool->used + aligned_size <= pool->size) {

void* ptr = pool->memory + pool->used;

pool->used += aligned_size;

return ptr;

}

return NULL; // Out of pool memory

}

void destroy_pool(MemoryPool* pool) {

if (pool) {

free(pool->memory);

free(pool);

}

}

// Usage

void pool_example() {

MemoryPool* pool = create_pool(1024);

// Allocate from pool

char* str1 = (char*)pool_alloc(pool, 100);

int* numbers = (int*)pool_alloc(pool, 10 * sizeof(int));

// Use the memory

strcpy(str1, "Test string");

numbers[0] = 42;

// No need to free individual allocations

destroy_pool(pool); // Frees all pool memory at once

}

Memory Mapping

Memory mapping allows files or devices to be mapped directly into memory, which can be more efficient than reading and writing using standard I/O functions.

#include <sys/mman.h>

#include <fcntl.h>

#include <unistd.h>

#include <string.h>

void mmap_example() {

// Create and write to a file

int fd = open("testfile.txt", O_RDWR | O_CREAT | O_TRUNC, 0644);

if (fd == -1) return;

// Set file size

size_t size = 4096;

ftruncate(fd, size);

// Map the file into memory

void* mapped = mmap(NULL, size, PROT_READ | PROT_WRITE, MAP_SHARED, fd, 0);

if (mapped == MAP_FAILED) {

close(fd);

return;

}

// Write to the mapped memory (writes directly to file)

strcpy((char*)mapped, "This is written through memory mapping");

// Sync changes to disk

msync(mapped, size, MS_SYNC);

// Unmap and close

munmap(mapped, size);

close(fd);

}

Custom Allocators

Custom memory allocators can be tailored to specific application needs, often providing better performance than general-purpose allocators.

// Simple C++ allocator for a fixed-size object pool

template<typename T, size_t BlockSize = 4096>

class PoolAllocator {

private:

struct Block {

char data[BlockSize];

Block* next;

};

struct ObjectNode {

ObjectNode* next;

};

Block* currentBlock;

ObjectNode* freeList;

// Calculate how many T objects fit in a block

static const size_t objectsPerBlock = BlockSize / sizeof(T);

public:

PoolAllocator() : currentBlock(nullptr), freeList(nullptr) {}

~PoolAllocator() {

Block* block = currentBlock;

while (block) {

Block* next = block->next;

delete block;

block = next;

}

}

T* allocate() {

if (!freeList) {

// Allocate a new block

Block* newBlock = new Block;

newBlock->next = currentBlock;

currentBlock = newBlock;

// Initialize free list with objects from the new block

char* blockData = newBlock->data;

freeList = reinterpret_cast<ObjectNode*>(blockData);

for (size_t i = 0; i < objectsPerBlock - 1; ++i) {

ObjectNode* current = reinterpret_cast<ObjectNode*>(blockData + i * sizeof(T));

current->next = reinterpret_cast<ObjectNode*>(blockData + (i + 1) * sizeof(T));

}

// Set the last object's next pointer to null

ObjectNode* lastObject = reinterpret_cast<ObjectNode*>(

blockData + (objectsPerBlock - 1) * sizeof(T));

lastObject->next = nullptr;

}

// Take an object from the free list

ObjectNode* result = freeList;

freeList = freeList->next;

return reinterpret_cast<T*>(result);

}

void deallocate(T* object) {

if (!object) return;

// Add the object back to the free list

ObjectNode* node = reinterpret_cast<ObjectNode*>(object);

node->next = freeList;

freeList = node;

}

};

// Usage example

class MyClass {

int data[25]; // 100 bytes

public:

void setValue(int index, int value) {

if (index >= 0 && index < 25) data[index] = value;

}

};

void custom_allocator_example() {

PoolAllocator<MyClass> pool;

// Allocate 100 objects efficiently

MyClass* objects[100];

for (int i = 0; i < 100; ++i) {

objects[i] = pool.allocate();

objects[i]->setValue(0, i);

}

// Deallocate some objects

for (int i = 0; i < 50; ++i) {

pool.deallocate(objects[i]);

objects[i] = nullptr;

}

// Allocate more - will reuse the freed memory

for (int i = 0; i < 25; ++i) {

objects[i] = pool.allocate();

objects[i]->setValue(0, i + 1000);

}

// Pool destructor will clean up automatically

}

Memory Optimization Techniques

Reducing Memory Footprint

// Struct padding and packing

#include <stdio.h>

// Inefficient structure layout

struct Inefficient {

char a; // 1 byte

// 7 bytes padding

double b; // 8 bytes

char c; // 1 byte

// 7 bytes padding

}; // Total: 24 bytes

// Optimized structure layout

struct Optimized {

double b; // 8 bytes

char a; // 1 byte

char c; // 1 byte

// 6 bytes padding

}; // Total: 16 bytes

// Packed structure using gcc attribute

struct Packed {

char a;

double b;

char c;

} __attribute__((packed)); // Total: 10 bytes

void structure_layout() {

printf("Size of Inefficient: %zu bytes\n", sizeof(struct Inefficient));

printf("Size of Optimized: %zu bytes\n", sizeof(struct Optimized));

printf("Size of Packed: %zu bytes\n", sizeof(struct Packed));

}

Lazy Allocation

// Only allocate memory when needed

void lazy_allocation_example(const char* filename) {

// Potentially large buffer - only allocate if needed

char* buffer = NULL;

size_t buffer_size = 0;

// Check file size first

FILE* file = fopen(filename, "rb");

if (file) {

fseek(file, 0, SEEK_END);

long size = ftell(file);

rewind(file);

if (size > 0) {

// Only now allocate the buffer

buffer_size = size;

buffer = (char*)malloc(buffer_size);

if (buffer) {

// Read the file content

fread(buffer, 1, buffer_size, file);

}

}

fclose(file);

}

// Use buffer if allocated

if (buffer) {

// Process buffer...

free(buffer);

}

}

Memory-Mapped I/O

Using memory mapping for large files can be more efficient than traditional read/write operations:

#include <sys/mman.h>

#include <fcntl.h>

#include <unistd.h>

void process_large_file(const char* filename) {

int fd = open(filename, O_RDONLY);

if (fd == -1) return;

// Get file size

off_t file_size = lseek(fd, 0, SEEK_END);

lseek(fd, 0, SEEK_SET);

// Map file into memory

void* file_data = mmap(NULL, file_size, PROT_READ, MAP_PRIVATE, fd, 0);

if (file_data != MAP_FAILED) {

// Process file data directly from memory

// ...

// Unmap when done

munmap(file_data, file_size);

}

close(fd);

}

Shared Memory

Shared memory allows multiple processes to access the same memory region:

#include <sys/mman.h>

#include <fcntl.h>

#include <unistd.h>

#include <string.h>

void shared_memory_example() {

// Create shared memory object

int fd = shm_open("/myshm", O_CREAT | O_RDWR, 0666);

if (fd == -1) return;

// Set size

size_t size = 4096;

ftruncate(fd, size);

// Map into memory

void* ptr = mmap(NULL, size, PROT_READ | PROT_WRITE, MAP_SHARED, fd, 0);

if (ptr == MAP_FAILED) {

close(fd);

return;

}

// Write to shared memory

strcpy((char*)ptr, "Data for other processes");

// Keep mapped for other processes to access

// Eventually: munmap(ptr, size);

// shm_unlink("/myshm");

}

-

Stack vs Heap

- Stack: Fast, automatic, limited size

- Heap: Flexible, manual management, potential for leaks -

Common Memory Issues

- Stack overflow: Excessive recursion or large local variables

- Memory leaks: Forgetting to free allocated memory

- Use after free: Accessing memory after deallocation

- Buffer overflow: Writing beyond allocated boundaries -

Debugging Tools

- Valgrind: Comprehensive memory analysis

- ASAN: Fast memory error detection

- GDB: Interactive debugging

- Custom allocators: Specialized memory tracking

Memory behavior can vary significantly across platforms:

- 32-bit vs 64-bit systems: Maximum addressable memory differs (4GB vs theoretically 16 exabytes)

- Default stack sizes: Linux (~8MB), Windows (~1MB), may need adjustment for deep recursion

- Memory allocation implementations: Different malloc implementations have different performance characteristics (e.g., glibc malloc, jemalloc, tcmalloc)

- Hardware considerations: Cache line sizes, memory alignment requirements, and NUMA architectures affect optimal memory usage patterns

Cross-platform code should be tested on all target platforms and avoid assumptions about memory behavior.

Conclusion

Understanding memory layout and management is fundamental to writing efficient, reliable C and C++ code. The concepts covered in this article—from stack and heap allocation to debugging memory issues—form the foundation of effective memory management.

Key takeaways include:

-

Know your memory regions: The text, data, BSS, heap, and stack segments each serve specific purposes in a program’s memory layout.

-

Choose the right allocation strategy: Stack allocation is fast and automatic but limited in size and scope. Heap allocation is flexible but requires careful management.

-

Prevent memory issues proactively: Many common problems like memory leaks, buffer overflows, and use-after-free bugs can be prevented with good coding practices and proper tooling.

-

Use debugging tools effectively: Tools like Valgrind, ASAN, and GDB are invaluable for identifying and resolving memory issues.

-

Consider advanced techniques when appropriate: Memory pools, custom allocators, and memory-mapped I/O can significantly improve performance for specific use cases.

-

Embrace modern C++ practices: RAII, smart pointers, and standard containers help automate memory management and reduce errors.

While modern languages increasingly abstract away direct memory management, understanding these concepts remains valuable. Even garbage-collected languages can suffer from memory-related issues, and knowledge of underlying memory principles helps diagnose problems across all programming environments.

For C and C++ developers especially, mastering memory management isn’t just about avoiding bugs—it’s about writing code that’s efficient, reliable, and maintainable. As systems grow more complex and performance demands increase, this foundational knowledge becomes ever more valuable.

References

- C++ Reference: Memory Management

- The GNU C Library: Memory Allocation

- Valgrind Documentation

- Address Sanitizer Wiki

- Advanced Linux Programming: Memory Management

- Memory Leak Detection in C++

- Effective C++: 55 Specific Ways to Improve Your Programs and Designs

- Inside the C++ Object Model

- Understanding and Using C Pointers

- Stack Overflow: Differences between stack and heap

Comments