26 min to read

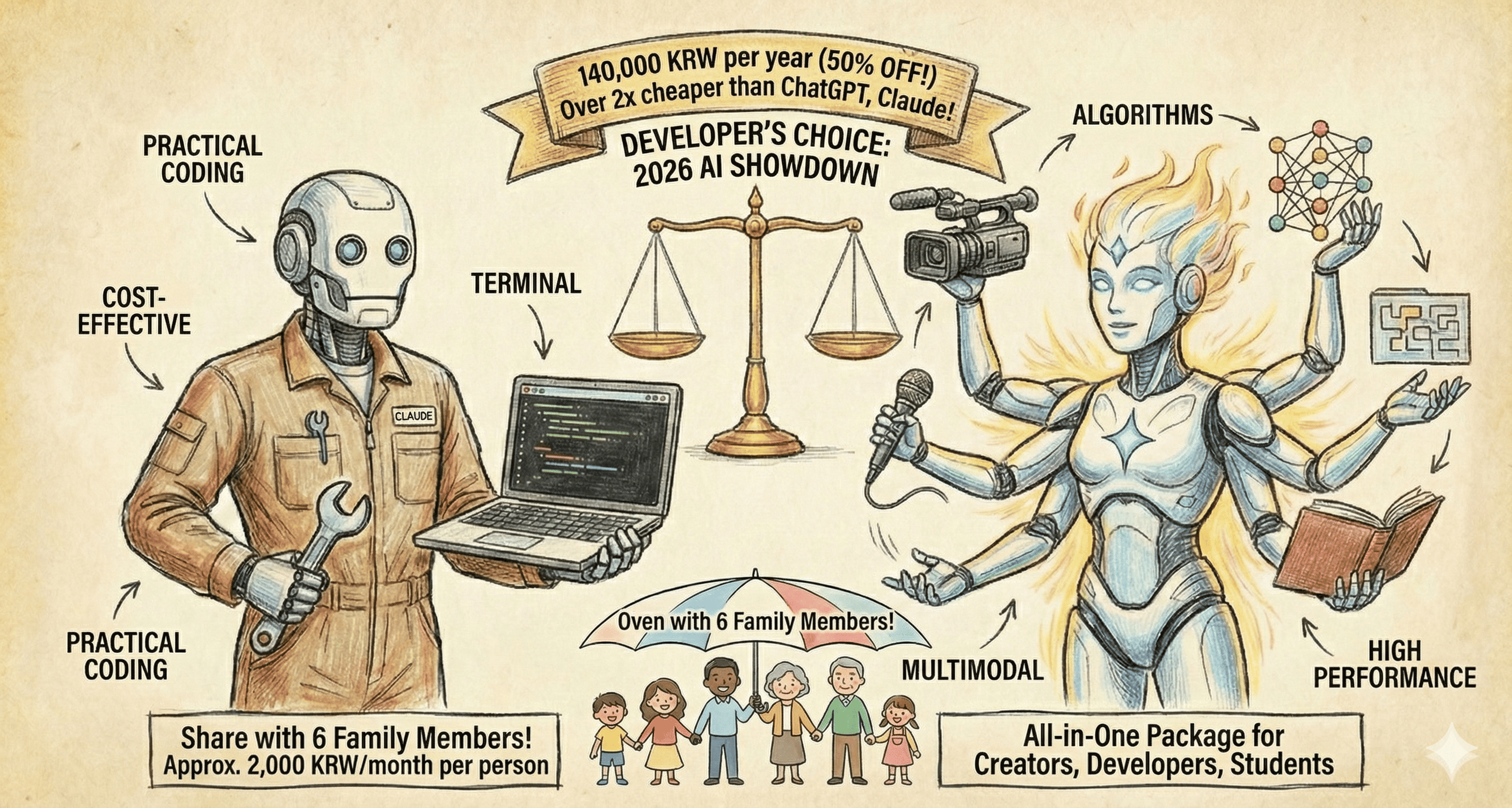

Claude 4.5 Sonnet vs Gemini 3 Pro: The Ultimate 2026 AI Model Showdown

Comprehensive developer-focused comparison of the two most powerful AI models reshaping software engineering

Table of Contents

- Overview

- Core Specifications Comparison

- Coding Performance: Real-World Benchmarks

- Agentic Capabilities Analysis

- Multimodal Excellence

- Reasoning & Mathematical Prowess

- Frontend Development Showdown

- Pricing & Value Analysis

- Context Window & Performance

- Real-World Use Cases

- 2026 Industry Outlook

- Final Verdict

- References

Overview

Between late 2025 and early 2026, two groundbreaking AI models have emerged as the definitive leaders in the AI landscape: Anthropic’s Claude 4.5 Sonnet (released September 29, 2025) and Google’s Gemini 3 Pro (released November 18, 2025). Both models represent quantum leaps in AI capability, but they excel in fundamentally different domains.

This comprehensive analysis examines these models from a developer and DevOps engineer perspective, focusing on practical applications rather than marketing hype. We’ll explore real benchmark data, pricing structures, and concrete use cases to help you make informed decisions for your projects and workflows.

Key Takeaway: Claude 4.5 Sonnet dominates practical software engineering tasks with superior cost efficiency, while Gemini 3 Pro leads in algorithmic reasoning, multimodal understanding, and frontier research applications.

Core Specifications Comparison

Claude 4.5 Sonnet

| Specification | Details |

|---|---|

| Release Date | September 29, 2025 |

| Training Data Cutoff | April 2025 |

| Context Window | 200K tokens (standard), 1M tokens (beta) |

| Pricing | $3/M input tokens, $15/M output tokens |

| Core Strengths | Coding excellence, agentic workflows, computer use |

| Availability | Claude.ai, API, Amazon Bedrock, Google Cloud Vertex AI |

Gemini 3 Pro

| Specification | Details |

|---|---|

| Release Date | November 18, 2025 |

| Training Data Cutoff | January 2025 |

| Context Window | 1M tokens (standard input), 64K tokens (output) |

| Pricing | $127/M input tokens, $12/M output tokens |

| Core Strengths | Multimodal reasoning, algorithmic coding, PhD-level reasoning |

| Availability | Gemini app, Google AI Studio, Vertex AI |

Quick Comparison Matrix

| Category | Claude 4.5 Sonnet | Gemini 3 Pro |

|---|---|---|

| Primary Focus | Coding & Automation | Multimodal & Research |

| Cost Efficiency | Excellent (42× cheaper) | Premium pricing |

| Context Window | 200K standard | 1M standard |

| Best For | DevOps, Backend, Infrastructure | Research, Frontend, Algorithms |

Coding Performance: Real-World Benchmarks

SWE-bench Verified: Real GitHub Issue Resolution

This benchmark measures AI’s ability to fix actual bugs from real open-source projects, requiring complete understanding of codebases, bug reproduction, implementation, and test passage.

Results & Analysis

| Model | Score | Key Insights |

|---|---|---|

| Claude 4.5 Sonnet | 77.2% | Highest score ever achieved on this benchmark |

| Gemini 3 Pro | 76.2% | Near-parity performance, 1% difference |

| GPT-5.1 | 76.3% | Competitive but slightly behind Claude |

Developer Perspective:

- Claude’s 77.2% represents the gold standard for real-world bug fixing

- Replit reported 0% error rate on their internal code editing benchmark (down from 9% on Sonnet 4)

- The 1%p difference between Claude and Gemini is negligible for most practical applications

- Both models are production-ready for automated bug fixing workflows

LiveCodeBench Pro: Algorithmic Coding Excellence

This benchmark evaluates competitive programming skills using Codeforces-style algorithmic challenges, measuring pure problem-solving capability.

Results & Analysis

| Model | Elo Rating | Performance Level |

|---|---|---|

| Gemini 3 Pro | 2,439 | Grandmaster-tier, algorithmic dominance |

| GPT-5.1 | 2,243 | Strong but 196 points behind |

| Claude 4.5 Sonnet | 1,418 | Competent but 1,021 points behind Gemini |

Developer Perspective:

- Gemini’s massive lead (72% higher Elo than Claude) is decisive for algorithm work

- Ideal for coding interviews, competitive programming, and algorithmic optimization

- Claude focuses more on practical engineering than pure algorithmic puzzles

- For LeetCode-style preparation, Gemini is the clear winner

Terminal-Bench 2.0: Agentic Terminal Workflows

Measures AI’s ability to operate in sandboxed Linux environments, using terminals and file editors to complete complex multi-step tasks.

Results & Analysis

| Model | Score | Achievement |

|---|---|---|

| Claude 4.5 Sonnet | 61.3% | First model to break 60% barrier |

| GPT-5 Codex | 58.8% | Strong performance, trails by 2.5% |

| Gemini 3 Pro | 54.2% | Competitive but behind Claude |

Developer Perspective:

- Claude excels at CLI-based automation and scripting workflows

- Superior for DevOps tasks: deployment scripts, infrastructure management, debugging

- Extended Thinking mode enables complex multi-step terminal operations

- Gemini’s 7.1%p gap is significant for terminal-heavy workflows

Agentic Capabilities Analysis

Long-Horizon Autonomous Operation

Both models demonstrate unprecedented ability to maintain context and execute complex tasks over extended periods.

Claude 4.5 Sonnet Strengths

| Capability | Details |

|---|---|

| Sustained Operation | Demonstrated 30+ hours of continuous autonomous work |

| Computer Use | OSWorld benchmark: 61.4% (highest recorded score) |

| Tool Integration | Seamless bash, file editing, browser automation |

| Multi-Step Reasoning | Maintains coherence across complex workflow sequences |

Gemini 3 Pro Strengths

| Benchmark | Gemini Score | Claude Score | Winner |

|---|---|---|---|

| τ2-bench | 85.4% | 84.7% | Gemini |

| Vending-Bench 2 | $5,478.16 | $3,838.74 | Gemini |

| Tool Use | Excellent | Excellent | Tie |

Developer Perspective:

- Complex Automation: Claude’s Computer Use capabilities are unmatched

- Long-Term Decisions: Gemini shows slightly better stability in extended operations

- Multi-Agent Orchestration: Both excel, choose based on specific workflow needs

- Production Readiness: Claude has more mature tooling for deployment

Multimodal Excellence

Comprehensive Multimodal Benchmark Comparison

| Benchmark | Gemini 3 Pro | Claude 4.5 Sonnet | Difference |

|---|---|---|---|

| MMMU-Pro | 81% | 68% | +13%p Gemini |

| Video-MMMU | 87.6% | 77.8% | +9.8%p Gemini |

| ScreenSpot-Pro | 72.7% | 36.2% | +36.5%p Gemini |

| CharXiv Reasoning | 81.4% | 68.5% | +12.9%p Gemini |

Gemini’s Multimodal Advantages

Native Integration:

- Text, images, video, audio, and code processing in unified model

- No separate pipelines or model switching required

- Consistent reasoning across all modalities

Video Understanding:

- Best-in-class video comprehension (87.6% Video-MMMU)

- Frame-by-frame analysis with temporal coherence

- Action recognition and scene understanding

Document Analysis:

- Superior chart interpretation (81.4% CharXiv)

- Complex diagram reasoning

- Multi-page document synthesis

Claude’s Focused Approach

Text & Image Priority:

- Optimized for text-heavy workflows

- Strong but not dominant in multimodal tasks

- Better for traditional document processing

Use Case Fit:

- Code documentation and technical writing

- Business documents and reports

- Text-centric development workflows

Developer Perspective:

- UI/UX Development: Gemini is essential (72.7% vs 36.2% on ScreenSpot-Pro)

- Video Analysis: Gemini’s 87.6% makes it mandatory for video-heavy applications

- Traditional Development: Claude’s text focus is sufficient and more cost-effective

- Research Applications: Gemini’s multimodal synthesis capabilities are game-changing

Reasoning & Mathematical Prowess

PhD-Level Reasoning: Humanity’s Last Exam

This benchmark tests reasoning across philosophy, mathematics, biology, and other advanced domains, requiring genuine understanding beyond pattern matching.

Performance Breakdown

| Model Configuration | Score | Significance |

|---|---|---|

| Gemini 3 Pro (Standard) | 37.5% | Nearly 3× Claude's performance |

| Gemini 3 Deep Think | 41.0% | Extended reasoning mode boost |

| Claude 4.5 Sonnet | 13.7% | Competent but significantly behind |

| GPT-5.1 | 26.5% | Between Claude and Gemini |

Expert-Level Science: GPQA Diamond

Measures performance on graduate-level physics, chemistry, and biology questions that challenge human experts.

Results

| Model | Score | Context |

|---|---|---|

| Gemini 3 Pro | 91.9% | Exceeds human expert average (~89.8%) |

| Gemini 3 Deep Think | 93.8% | Superhuman performance level |

| GPT-5.1 | 88.1% | Strong but below human experts |

| Claude 4.5 Sonnet | 83.4% | Competent scientific reasoning |

Frontier Mathematics: MathArena Apex

The most challenging mathematical reasoning benchmark available, testing novel problem-solving abilities.

Performance Hierarchy

| Model | Score | Analysis |

|---|---|---|

| Gemini 3 Pro | 23.4% | Only model showing meaningful progress |

| Claude 4.5 Sonnet | 1.6% | Limited capability on hardest problems |

| GPT-5.1 | 1.0% | Similar limitations to Claude |

Developer Perspective:

- Scientific Computing: Gemini is mandatory for advanced mathematical modeling

- Research Applications: 91.9% GPQA score enables graduate-level research assistance

- Complex Problem Solving: Gemini’s reasoning depth is transformative

- Practical Engineering: Claude remains excellent for standard development tasks

- Cost Consideration: Gemini’s reasoning premium comes with 42× higher cost

Frontend Development Showdown

Development Philosophy Comparison

| Aspect | Claude 4.5 Sonnet | Gemini 3 Pro |

|---|---|---|

| Design Approach | Functional and pragmatic | Visually sophisticated and polished |

| Iteration Speed | Fast iterative edits | Slower but higher quality output |

| Workflow Fit | IDE-style rapid development | Design-first creative workflow |

| Output Quality | Production-functional | Production-polished |

Real-World Case Studies

Case Study 1: Basic CRUD Application

Task: Build an internal admin dashboard

Claude Result: Clean, functional, fast delivery

Gemini Result: Polished UI but took longer

Winner: Claude (speed and pragmatism)

Case Study 2: Marketing Landing Page

Task: Create visually stunning product showcase

Claude Result: Functional but basic styling

Gemini Result: Magazine-quality design with animations

Winner: Gemini (visual excellence)

Case Study 3: Interactive Data Visualization

Task: Build canvas-based data explorer

Claude Result: Working but simple visuals

Gemini Result: WebGL-powered, cinematic quality

Winner: Gemini (advanced graphics)

Case Study 4: Figma to Code Conversion

Task: Convert design mockup to HTML/CSS

Claude Result: Accurate but plain implementation

Gemini Result: Pixel-perfect with hover states

Winner: Gemini (design fidelity)

Recommendation Framework

Choose Claude 4.5 Sonnet For:

- Internal tools and admin panels

- MVP and prototype development

- Backend-heavy applications with simple UIs

- Time-sensitive projects

- Budget-conscious development

Choose Gemini 3 Pro For:

- Customer-facing applications

- Marketing and promotional sites

- Design-system implementation

- Interactive experiences (animations, WebGL)

- Brand-critical visual quality

Pricing & Value Analysis

Base Pricing Comparison

| Model | Input | Output | Multiplier |

|---|---|---|---|

| Claude 4.5 Sonnet | $3/M tokens | $15/M tokens | Baseline |

| Gemini 3 Pro | $127/M tokens | $12/M tokens | 42× input cost |

Real-World Usage Scenarios

Scenario 1: Daily Development Assistant (100M tokens/month)

Average Usage Pattern:

- Input: 60M tokens (code reading, context)

- Output: 40M tokens (code generation)

Claude 4.5 Sonnet:

- Input: 60M × $3 = $180

- Output: 40M × $15 = $600

- Total: $780/month

Gemini 3 Pro:

- Input: 60M × $127 = $7,620

- Output: 40M × $12 = $480

- Total: $8,100/month

Savings with Claude: $7,320/month (90% cost reduction)

Scenario 2: Large-Scale Code Analysis (1B tokens/month)

Enterprise-Level Processing:

- Input: 700M tokens (codebase analysis)

- Output: 300M tokens (reports, suggestions)

Claude 4.5 Sonnet:

- Input: 700M × $3 = $2,100

- Output: 300M × $15 = $4,500

- Total: $6,600/month

Gemini 3 Pro:

- Input: 700M × $127 = $88,900

- Output: 300M × $12 = $3,600

- Total: $92,500/month

Savings with Claude: $85,900/month (93% cost reduction)

Scenario 3: Research & Analysis (50M tokens/month)

Academic/Research Usage:

- Input: 35M tokens (papers, data)

- Output: 15M tokens (analysis, reports)

Claude 4.5 Sonnet:

- Input: 35M × $3 = $105

- Output: 15M × $15 = $225

- Total: $330/month

Gemini 3 Pro:

- Input: 35M × $127 = $4,445

- Output: 15M × $12 = $180

- Total: $4,625/month

Savings with Claude: $4,295/month (93% cost reduction)

Note: Despite higher cost, Gemini's superior reasoning

may justify expense for specialized research applications.

Value Optimization Strategies

Strategy 1: Hybrid Approach

Use Claude for:

- 80% of routine coding tasks

- Infrastructure automation

- DevOps workflows

- Code reviews and refactoring

Use Gemini for:

- 20% specialized tasks

- Complex algorithms

- Multimodal processing

- Research-level reasoning

Potential savings: 60-70% compared to Gemini-only approach

Strategy 2: Task-Based Routing

Implement intelligent routing:

- Simple queries → Claude

- Algorithm design → Gemini

- UI generation → Gemini

- Backend logic → Claude

- Data analysis → Context-dependent

Optimize cost while maintaining quality

Cost-Benefit Analysis Framework

| Use Case | Claude ROI | Gemini ROI | Recommendation |

|---|---|---|---|

| Daily Coding | Excellent | Poor | Claude |

| Algorithm Design | Good | Excellent | Gemini |

| DevOps Tasks | Excellent | Good | Claude |

| Scientific Computing | Fair | Excellent | Gemini |

| Frontend Work | Good | Excellent | Context-dependent |

Context Window & Performance

Context Window Comparison

| Specification | Claude 4.5 Sonnet | Gemini 3 Pro |

|---|---|---|

| Standard Input | 200K tokens | 1M tokens (5× larger) |

| Extended Input | 1M tokens (beta) | 1M tokens (standard) |

| Output Tokens | Standard limits | 64K tokens |

| Pages Equivalent | ~600 pages (200K) | ~3,000 pages (1M) |

Practical Context Applications

200K Token Use Cases (Claude Standard):

- Medium-sized monorepo analysis

- Single microservice codebase

- API documentation sets

- Business reports and analysis

1M Token Use Cases (Both Models):

- Entire application codebases

- Complete technical documentation

- Large dataset analysis

- Multi-repository context

Response Speed Analysis

Claude 4.5 Sonnet:

- Faster response times for standard queries

- Optimized for rapid iteration

- Better for interactive development

- Lower latency in production

Gemini 3 Pro:

- Slower on complex reasoning tasks

- Deep Think mode adds significant latency

- Worth the wait for specialized tasks

- Best for batch processing

Developer Perspective:

- Interactive Coding: Claude’s speed advantage is noticeable

- Large Context Analysis: Gemini’s 1M standard token window is superior

- Production APIs: Claude’s lower latency benefits user experience

- Research Workflows: Gemini’s depth justifies longer processing time

Real-World Use Cases

Use Case 1: DevOps Infrastructure Management

Scenario: Managing Kubernetes clusters, CI/CD pipelines, and cloud infrastructure

Why Claude 4.5 Sonnet Wins

| Requirement | Claude Advantage |

|---|---|

| Terminal Proficiency | 61.3% Terminal-Bench (7.1%p ahead of Gemini) |

| Script Generation | Excellent bash, Python, and Terraform code |

| Cost at Scale | 42× cheaper for high-volume automation |

| Tool Integration | Superior file editing and command execution |

Real Implementation

Daily DevOps Workflow with Claude:

1. Infrastructure as Code:

- Generate Terraform modules

- Update Kubernetes manifests

- Create Ansible playbooks

2. CI/CD Optimization:

- Debug Jenkins pipelines

- Optimize GitLab CI configuration

- Fix build failures

3. Monitoring & Debugging:

- Analyze logs and metrics

- Generate alerts and dashboards

- Troubleshoot production issues

4. Documentation:

- Auto-generate runbooks

- Create architecture diagrams (text-based)

- Maintain technical wiki

Monthly cost with Claude: ~$500

Equivalent with Gemini: ~$21,000

Savings: $20,500/month (98% reduction)

Use Case 2: Competitive Programming & Algorithm Development

Scenario: Preparing for coding interviews, algorithmic optimization, and contest participation

Why Gemini 3 Pro Dominates

| Requirement | Gemini Advantage |

|---|---|

| Algorithm Design | 2,439 Elo (72% higher than Claude) |

| Edge Case Handling | Superior at identifying corner cases |

| Optimization | Better complexity analysis and optimization |

| Mathematical Reasoning | 23.4% MathArena (15× better than Claude) |

Practical Application

Interview Preparation Strategy with Gemini:

1. Problem Solving:

- Understand complex algorithmic problems

- Generate optimal solutions

- Explain time/space complexity

2. Pattern Recognition:

- Identify algorithm families

- Suggest data structure choices

- Optimize brute-force approaches

3. Mock Interviews:

- Simulate technical interviews

- Provide detailed feedback

- Suggest improvement areas

4. Contest Practice:

- Solve Codeforces problems

- Participate in virtual contests

- Learn advanced techniques

Cost justification: Higher price worth it for:

- FAANG interview preparation

- Algorithmic research

- Competitive programming

Use Case 3: Full-Stack Product Development

Scenario: Building a complete SaaS application with both frontend and backend

Hybrid Strategy Recommendation

| Component | Primary Model | Rationale |

|---|---|---|

| Backend API | Claude | Excellent at REST/GraphQL, database design |

| Frontend UI | Gemini | Superior visual quality and interactivity |

| Database Schema | Claude | Strong at relational and NoSQL design |

| Complex Algorithms | Gemini | Better algorithmic optimization |

| Testing | Claude | Cost-effective test generation |

| Documentation | Claude | Fast and thorough documentation |

Cost-Optimized Workflow

Development Phase Distribution:

Planning & Architecture (10% time):

- Model: Claude

- Tasks: System design, API planning

- Cost: ~$50

Backend Development (40% time):

- Model: Claude

- Tasks: API implementation, business logic

- Cost: ~$300

Frontend Development (30% time):

- Model: Gemini (UI) + Claude (logic)

- Tasks: Component development, styling

- Cost: ~$800 (60% Gemini, 40% Claude)

Algorithm Optimization (10% time):

- Model: Gemini

- Tasks: Performance-critical algorithms

- Cost: ~$500

Testing & Documentation (10% time):

- Model: Claude

- Tasks: Test coverage, API docs

- Cost: ~$50

Total Monthly Cost: ~$1,700

Potential savings vs. Gemini-only: ~$6,000

Quality maintained through strategic model selection

Use Case 4: Academic Research & Scientific Computing

Scenario: Graduate-level research requiring advanced mathematical reasoning

Why Gemini 3 Pro Is Essential

| Capability | Research Application |

|---|---|

| PhD-Level Reasoning | 37.5% Humanity's Last Exam (3× Claude's 13.7%) |

| Scientific Accuracy | 91.9% GPQA Diamond (exceeds human experts) |

| Mathematical Depth | 23.4% MathArena Apex (only viable option) |

| Multimodal Analysis | 81.4% CharXiv for chart/graph reasoning |

Research Workflow

Literature Review & Analysis:

1. Process 300+ papers with 1M context window

2. Extract methodologies and findings

3. Identify research gaps

4. Generate comprehensive summaries

Mathematical Modeling:

1. Develop novel algorithms

2. Prove theoretical properties

3. Optimize computational complexity

4. Validate against benchmarks

Data Analysis:

1. Statistical analysis of experiments

2. Visualization of complex data

3. Interpretation of results

4. Scientific writing assistance

Cost Analysis:

- Monthly research budget: ~$2,000

- Value delivered: Equivalent to additional PhD student

- ROI: High for grant-funded research

- Publication acceleration: 2-3× faster

2026 Industry Outlook

Current State of AI Models

Market Maturity: The AI model landscape has reached a new plateau of capability, with Claude 4.5 Sonnet and Gemini 3 Pro representing distinct evolutionary paths rather than direct competitors.

Anthropic’s Roadmap (Claude)

Projected Improvements:

- Enhanced algorithmic reasoning (targeting LiveCodeBench improvement)

- Expanded multimodal capabilities (video, audio)

- 1M context window general availability

- Potential Claude Opus 4.5 release

- Cost optimization through efficiency gains

Strategic Focus:

- Maintain coding excellence leadership

- Strengthen computer use capabilities

- Expand enterprise deployment

- Improve safety and alignment

Google’s Trajectory (Gemini)

Expected Developments:

- Gemini 3 Flash for cost-effective deployment

- Deep Think mode general availability

- Integration with more Google services

- Potential pricing adjustments (downward pressure)

- Enhanced agent capabilities

Market Position:

- Leverage ecosystem integration

- Expand multimodal dominance

- Target research and creative markets

- Balance premium positioning with competition

Emerging Trends

1. Agent-Centric Development:

- Both models prioritize autonomous multi-step workflows

- Tool use becoming standard capability

- Long-horizon task execution maturing

- Production-ready agent frameworks emerging

2. Multimodal Standardization:

- Text+image+video+audio integration expected baseline

- Gemini’s lead may force competitive response

- Creative workflows transforming fundamentally

- Education and research applications expanding

3. Price Competition:

- Claude’s aggressive pricing pressuring market

- Gemini’s premium position may become untenable

- Expect significant price adjustments in 2026

- Efficiency improvements driving costs down

4. Specialization vs. Generalization:

- Claude: Doubling down on coding excellence

- Gemini: Maintaining broad capability leadership

- Market may support both strategies

- User choice increasingly workflow-dependent

Prediction: 2026 Model Landscape

Q1-Q2 2026:

- Claude Opus 4.5 potential release (unconfirmed)

- Gemini 3 Flash launch (cost-effective option)

- GPT-5 evolution continuing

- New Chinese models emerging (DeepSeek, etc.)

H2 2026:

- Claude 5 rumors intensifying

- Gemini 4 development likely

- Price wars accelerating

- Capability differentiation deepening

Key Metric to Watch:

- SWE-bench scores approaching 85-90% (current leader: 77.2%)

- 1M+ token context becoming standard

- Sub-second response times at scale

- Cost per token continuing downward trajectory

Final Verdict

Executive Summary

- Claude 4.5 Sonnet: Best overall value, dominant in practical software engineering

- Gemini 3 Pro: Frontier research leader, multimodal excellence, premium pricing

- Both: Viable in production, mature ecosystems, strong safety records

Decision Framework

| Primary Use Case | Recommended Model | Confidence Level |

|---|---|---|

| DevOps & Infrastructure | Claude 4.5 Sonnet | Very High |

| Backend Development | Claude 4.5 Sonnet | High |

| Algorithm Development | Gemini 3 Pro | Very High |

| Frontend/UI Work | Gemini 3 Pro | High |

| Scientific Research | Gemini 3 Pro | Very High |

| Code Review | Claude 4.5 Sonnet | High |

| Documentation | Claude 4.5 Sonnet | Medium-High |

| Multimodal Apps | Gemini 3 Pro | Very High |

Personal Experience & Recommendations

As a DevOps engineer working extensively with both models, here’s my practical assessment:

For Daily Development Work ✅ Claude 4.5 Sonnet

Real-World Benefits:

1. Cost Efficiency:

- $780/month vs $8,100/month (90% savings)

- ROI justifies itself in first week

2. Terminal Excellence:

- Kubernetes troubleshooting

- CI/CD pipeline optimization

- Infrastructure automation scripts

3. Practical Focus:

- Gets work done quickly

- Minimal over-engineering

- Production-ready code

4. Long-Horizon Capability:

- 30+ hour autonomous operation

- Complex deployment orchestration

- Reliable automation

For Specialized Tasks ✅ Gemini 3 Pro

Strategic Applications:

1. Algorithm Optimization:

- Performance-critical code paths

- Complex data structure design

- System architecture decisions

2. Research & Analysis:

- Competitive analysis

- Technical feasibility studies

- Advanced problem solving

3. Multimodal Requirements:

- Video processing pipelines

- UI/UX prototyping

- Interactive visualizations

4. Scientific Computing:

- Mathematical modeling

- Simulation design

- Data science workflows

The Hybrid Strategy (Optimal for Most Teams)

Cost-Optimized AI Workflow:

Primary Model: Claude 4.5 Sonnet (80% of tasks)

- Daily coding assistance

- Infrastructure management

- Code review and refactoring

- Testing and documentation

- Estimated cost: $600/month

Specialized Model: Gemini 3 Pro (20% of tasks)

- Algorithm development

- Frontend polish

- Research tasks

- Multimodal processing

- Estimated cost: $400/month

Total: $1,000/month

Savings vs. Gemini-only: $7,100/month

Quality maintained through strategic selection

When NOT to Use Each Model

Avoid Claude 4.5 Sonnet For:

- ❌ Competitive programming (LiveCodeBench: 1,418 Elo)

- ❌ Advanced mathematics (MathArena: 1.6%)

- ❌ PhD-level research (Humanity’s Last Exam: 13.7%)

- ❌ Complex video processing

- ❌ High-end UI/UX work

Avoid Gemini 3 Pro For:

- ❌ Budget-conscious projects (42× more expensive)

- ❌ High-volume automation (cost prohibitive)

- ❌ Time-sensitive iterations (slower response)

- ❌ Simple CRUD applications (overkill)

- ❌ Cost-sensitive startups

Final Recommendations by User Profile

| User Profile | Recommendation |

|---|---|

| Solo Developer | Start with Claude 4.5 Sonnet. Add Gemini for specific needs. |

| Startup Team | Claude for most work. Gemini for user-facing features. |

| Enterprise | Deploy both models with intelligent routing. |

| Researcher | Gemini 3 Pro is essential despite higher cost. |

| Algorithm Developer | Gemini 3 Pro mandatory for competitive advantage. |

| DevOps Engineer | Claude 4.5 Sonnet is purpose-built for your workflow. |

| Frontend Specialist | Gemini for polish, Claude for logic. |

The Bottom Line

Claude 4.5 Sonnet has earned its position as the default choice for practical software engineering through exceptional performance, unmatched cost efficiency, and production-ready reliability. At 42× lower cost with 77.2% SWE-bench performance, it delivers extraordinary value.

Gemini 3 Pro represents the frontier of AI reasoning, multimodal understanding, and algorithmic excellence. Its premium pricing is justified for specialized applications where its unique capabilities are essential.

For most developers: Claude 4.5 Sonnet is the clear winner, supplemented with Gemini 3 Pro for specific high-value tasks.

For the industry: Competition between these distinct approaches is driving rapid innovation and will benefit all users as capabilities increase and costs decrease throughout 2026.

The future of AI-assisted development isn’t choosing one model—it’s knowing when to use each one.

References

- Anthropic: Introducing Claude Sonnet 4.5

- Google DeepMind: Gemini 3 - Introducing the latest Gemini AI model

- Caylent: Claude Sonnet 4.5 - Highest-Scoring Claude Model Yet on SWE-bench

- DataCamp: Gemini 3 - Google’s Most Powerful LLM

- Simon Willison: Trying out Gemini 3 Pro with audio transcription and a new pelican benchmark

- Vellum AI: Google Gemini 3 Benchmarks (Explained)

- AceCloud: Claude Opus 4.5 Vs Gemini 3 Pro Vs Sonnet 4.5 Comparison Guide

- CometAPI: Gemini 3 Pro vs Claude 4.5 Sonnet for Coding - Which is Better in 2025

- InfoQ: Claude Sonnet 4.5 Tops SWE-Bench Verified, Extends Coding Focus beyond 30 Hours

- VentureBeat: Google unveils Gemini 3 claiming the lead in math, science, multimodal, and agentic AI benchmarks

- James Duncan: Gemini 3 Pro vs Claude Sonnet 4.5 - Antigravity IDE Review

- SWE-bench: Official Leaderboard

- LiveCodeBench: Leaderboard - Holistic and Contamination Free Evaluation

- Anthropic: Claude Opus 4.5 System Card

- Google DeepMind: Gemini 3 Pro Model Card

Comments